diff --git a/README.md b/README.md

index da4c7e1..c41c1c4 100644

--- a/README.md

+++ b/README.md

@@ -3,37 +3,18 @@ CIS565: Project 6 -- Deferred Shader

-------------------------------------------------------------------------------

Fall 2014

-------------------------------------------------------------------------------

-Due Wed, 11/12/2014 at Noon

--------------------------------------------------------------------------------

--------------------------------------------------------------------------------

-NOTE:

--------------------------------------------------------------------------------

-This project requires any graphics card with support for a modern OpenGL

-pipeline. Any AMD, NVIDIA, or Intel card from the past few years should work

-fine, and every machine in the SIG Lab and Moore 100 is capable of running

-this project.

+[Youtube](https://www.youtube.com/watch?v=ggUH_oqFYuo&feature=youtu.be)

-This project also requires a WebGL capable browser. The project is known to

-have issues with Chrome on windows, but Firefox seems to run it fine.

+[Live Demo](http://xjma.github.io/Project6-DeferredShader/)

-------------------------------------------------------------------------------

INTRODUCTION:

-------------------------------------------------------------------------------

-In this project, you will get introduced to the basics of deferred shading. You will write GLSL and OpenGL code to perform various tasks in a deferred lighting pipeline such as creating and writing to a G-Buffer.

-

--------------------------------------------------------------------------------

-CONTENTS:

--------------------------------------------------------------------------------

-The Project5 root directory contains the following subdirectories:

-

-* js/ contains the javascript files, including external libraries, necessary.

-* assets/ contains the textures that will be used in the second half of the

- assignment.

-* resources/ contains the screenshots found in this readme file.

+In this project, i write GLSL and OpenGL code to perform various tasks in a deferred lighting pipeline such as creating and writing to a G-Buffer. This project requires a graphic card support for deferred shader pipeline.

- This Readme file edited as described above in the README section.

+

-------------------------------------------------------------------------------

OVERVIEW:

@@ -69,154 +50,62 @@ WASDRF - Movement (along w the arrow keys)

* 2 - Normals

* 3 - Color

* 4 - Depth

+* 5 - Blinn-Phong shading

+* 6 - bloom shading

+* 7 - Toon shading

+* 8 - SSAO

* 0 - Full deferred pipeline

There are also mouse controls for camera rotation.

-------------------------------------------------------------------------------

-REQUIREMENTS:

+Blinn-Phong:

-------------------------------------------------------------------------------

-In this project, you are given code for:

-* Loading .obj file

-* Deferred shading pipeline

-* GBuffer pass

+The diffuse and specular shader is implemented in lighting passes and accumulates stage that writes the result to P-buffer.

-You are required to implement:

-* Either of the following effects

- * Bloom

- * "Toon" Shading (with basic silhouetting)

-* Screen Space Ambient Occlusion

-* Diffuse and Blinn-Phong shading

-

-**NOTE**: Implementing separable convolution will require another link in your pipeline and will count as an extra feature if you do performance analysis with a standard one-pass 2D convolution. The overhead of rendering and reading from a texture _may_ offset the extra computations for smaller 2D kernels.

-

-You must implement two of the following extras:

-* The effect you did not choose above

-* Compare performance to a normal forward renderer with

- * No optimizations

- * Coarse sort geometry front-to-back for early-z

- * Z-prepass for early-z

-* Optimize g-buffer format, e.g., pack things together, quantize, reconstruct z from normal x and y (because it is normalized), etc.

- * Must be accompanied with a performance analysis to count

-* Additional lighting and pre/post processing effects! (email first please, if they are good you may add multiple).

+

-------------------------------------------------------------------------------

-RUNNING THE CODE:

+Bloom

-------------------------------------------------------------------------------

+Bloom is a post processing effects. Normally, Bloom effects is implemented with a texture that specify the glow source and then blur the glow source. But here I just treat the whole object as a glow source. I use a gaussian convolution on color from G-buffer.

-Since the code attempts to access files that are local to your computer, you

-will either need to:

-

-* Run your browser under modified security settings, or

-* Create a simple local server that serves the files

-

-

-FIREFOX: change ``strict_origin_policy`` to false in about:config

-

-CHROME: run with the following argument : `--allow-file-access-from-files`

-

-(You can do this on OSX by running Chrome from /Applications/Google

-Chrome/Contents/MacOS with `open -a "Google Chrome" --args

---allow-file-access-from-files`)

-

-* To check if you have set the flag properly, you can open chrome://version and

- check under the flags

-

-RUNNING A SIMPLE SERVER:

-

-If you have Python installed, you can simply run a simple HTTP server off your

-machine from the root directory of this repository with the following command:

-

-`python -m SimpleHTTPServer`

+

-------------------------------------------------------------------------------

-RESOURCES:

+"Toon" Shading (with basic silhouetting)

-------------------------------------------------------------------------------

-The following are articles and resources that have been chosen to help give you

-a sense of each of the effects:

+Toon shading is a non-photorealistic rendering technique that is used to achieve a cartoonish or hand-drawn appearance of three-dimensional models. To make is cartoonish we don't want many color in the final rendering so I round the colors in the scene to a certain color set. Basic silhouetting is achieved by compare the depth of the object with the background to get the edge.

-* Bloom : [GPU Gems](http://http.developer.nvidia.com/GPUGems/gpugems_ch21.html)

-* Screen Space Ambient Occlusion : [Floored

- Article](http://floored.com/blog/2013/ssao-screen-space-ambient-occlusion.html)

+

-------------------------------------------------------------------------------

-README

+Screen Space Ambient Occlusion

-------------------------------------------------------------------------------

-All students must replace or augment the contents of this Readme.md in a clear

-manner with the following:

+Ambient occlusion is an approximation of the amount by which a point on a surface is occluded by the surrounding geometry. To achieve this I sample a random position within a hemisphere, oriented along the surface normal at that pixel. Then project the sample position into screen space to get its depth on depth buffer. If the depth buffer value is smaller than sample position's depth, then occlusion accumulates.

-* A brief description of the project and the specific features you implemented.

-* At least one screenshot of your project running.

-* A 30 second or longer video of your project running. To create the video you

- can use [Open Broadcaster Software](http://obsproject.com)

-* A performance evaluation (described in detail below).

+

-------------------------------------------------------------------------------

PERFORMANCE EVALUATION

-------------------------------------------------------------------------------

-The performance evaluation is where you will investigate how to make your

-program more efficient using the skills you've learned in class. You must have

-performed at least one experiment on your code to investigate the positive or

-negative effects on performance.

-

-We encourage you to get creative with your tweaks. Consider places in your code

-that could be considered bottlenecks and try to improve them.

-Each student should provide no more than a one page summary of their

-optimizations along with tables and or graphs to visually explain any

-performance differences.

+

--------------------------------------------------------------------------------

-THIRD PARTY CODE POLICY

--------------------------------------------------------------------------------

-* Use of any third-party code must be approved by asking on the Google groups.

- If it is approved, all students are welcome to use it. Generally, we approve

- use of third-party code that is not a core part of the project. For example,

- for the ray tracer, we would approve using a third-party library for loading

- models, but would not approve copying and pasting a CUDA function for doing

- refraction.

-* Third-party code must be credited in README.md.

-* Using third-party code without its approval, including using another

- student's code, is an academic integrity violation, and will result in you

- receiving an F for the semester.

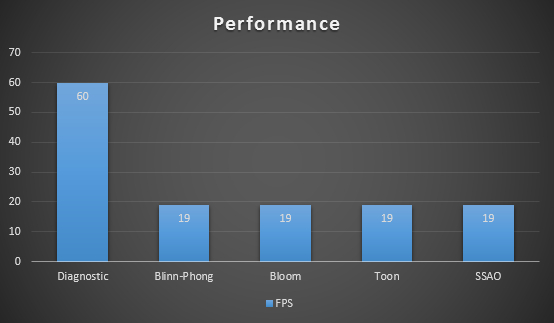

+In diagnostic mode(show normal, position, etc) I just output the color read from G buffer without light accumulation or post processing. From the chart above we can see the stage 2 and 3 of deferred shading is quite computational intense. And I think the performance is not that good because I implement the deferred shader with simple one-pass pipline and my browser does not support drawbuffer. So every part get computed no matter is is used or not. I think Implementing separable convolution will definetely help improving the performance.

--------------------------------------------------------------------------------

-SELF-GRADING

--------------------------------------------------------------------------------

-* On the submission date, email your grade, on a scale of 0 to 100, to Harmony,

- harmoli+cis565@seas.upenn.edu, with a one paragraph explanation. Be concise and

- realistic. Recall that we reserve 30 points as a sanity check to adjust your

- grade. Your actual grade will be (0.7 * your grade) + (0.3 * our grade). We

- hope to only use this in extreme cases when your grade does not realistically

- reflect your work - it is either too high or too low. In most cases, we plan

- to give you the exact grade you suggest.

-* Projects are not weighted evenly, e.g., Project 0 doesn't count as much as

- the path tracer. We will determine the weighting at the end of the semester

- based on the size of each project.

+

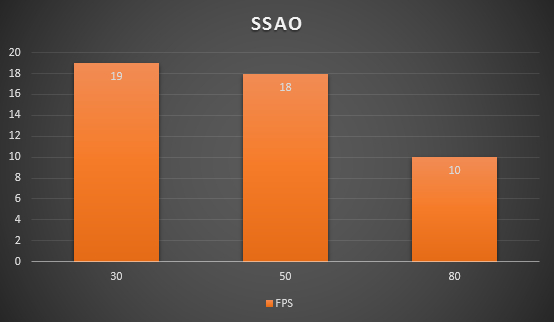

+Apparently use more sample kernels to compute SSAO will slow down the computation process, but the result is not as obvious as I expect. I want to test with more kernel computed but my laptop can't handle it when the kernel size exceed 90.

---

-SUBMISSION

+Reference

---

-As with the previous projects, you should fork this project and work inside of

-your fork. Upon completion, commit your finished project back to your fork, and

-make a pull request to the master repository. You should include a README.md

-file in the root directory detailing the following

-

-* A brief description of the project and specific features you implemented

-* At least one screenshot of your project running.

-* A link to a video of your project running.

-* Instructions for building and running your project if they differ from the

- base code.

-* A performance writeup as detailed above.

-* A list of all third-party code used.

-* This Readme file edited as described above in the README section.

+BLOOM: http://http.developer.nvidia.com/GPUGems/gpugems_ch21.html

----

-ACKNOWLEDGEMENTS

----

+SSAO: http://john-chapman-graphics.blogspot.co.uk/2013/01/ssao-tutorial.html

Many thanks to Cheng-Tso Lin, whose framework for CIS700 we used for this

assignment.

diff --git a/assets/deferred/diffuse.frag b/assets/deferred/diffuse.frag

index ef0c5fc..02b245d 100644

--- a/assets/deferred/diffuse.frag

+++ b/assets/deferred/diffuse.frag

@@ -8,16 +8,42 @@ uniform sampler2D u_depthTex;

uniform float u_zFar;

uniform float u_zNear;

uniform int u_displayType;

+uniform vec4 u_Light;

varying vec2 v_texcoord;

float linearizeDepth( float exp_depth, float near, float far ){

- return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

}

void main()

{

- // Write a diffuse shader and a Blinn-Phong shader

- // NOTE : You may need to add your own normals to fulfill the second's requirements

- gl_FragColor = vec4(texture2D(u_colorTex, v_texcoord).rgb, 1.0);

+

+ // Diffuse calculation

+ vec4 lightColor = vec4(0.5, 0.5, 0.5, 1.0);

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ vec3 lightDir = normalize(u_Light.xyz - position);

+ vec3 diffuseColor = texture2D(u_colorTex, v_texcoord).rgb;

+ float diffuseTerm = clamp(abs(dot(normalize(normal), normalize(lightDir))), 0.0, 1.0);//max(dot(lightDir,normal), 0.0);

+ float specular = 0.0;

+

+

+ vec3 viewDir = normalize(-position);

+ vec3 halfDir = normalize(lightDir + viewDir);

+ float specAngle = max(dot(halfDir, normal), 0.0);

+ specular = pow(specAngle, 80.0);

+

+

+ //change background color

+ float depth = texture2D( u_depthTex, v_texcoord ).x;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+

+ if (depth > 0.99) {

+ gl_FragColor = vec4(vec3(0.0), 1.0);//vec4(vec3(u_displayType == 5 ? 0.0 : 0.0), 1.0);

+ } else {

+ gl_FragColor = vec4(diffuseTerm*diffuseColor + specular*vec3(1.0), 1.0);

+ }

+

}

diff --git a/assets/deferred/diffuse.frag.bak b/assets/deferred/diffuse.frag.bak

new file mode 100644

index 0000000..7d1f3d9

--- /dev/null

+++ b/assets/deferred/diffuse.frag.bak

@@ -0,0 +1,49 @@

+precision highp float;

+

+uniform sampler2D u_positionTex;

+uniform sampler2D u_normalTex;

+uniform sampler2D u_colorTex;

+uniform sampler2D u_depthTex;

+

+uniform float u_zFar;

+uniform float u_zNear;

+uniform int u_displayType;

+uniform vec4 u_Light;

+

+varying vec2 v_texcoord;

+

+float linearizeDepth( float exp_depth, float near, float far ){

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+}

+

+void main()

+{

+

+ // Diffuse calculation

+ vec4 lightColor = vec4(0.5, 0.5, 0.5, 1.0);

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ vec3 lightDir = normalize(u_Light.xyz - position);

+ vec3 diffuseColor = texture2D(u_colorTex, v_texcoord).rgb;

+ float diffuseTerm = clamp(abs(dot(normalize(normal), normalize(lightDir))), 0.0, 1.0);//max(dot(lightDir,normal), 0.0);

+ float specular = 0.0;

+

+

+ vec3 viewDir = normalize(-position);

+ vec3 halfDir = normalize(lightDir + viewDir);

+ float specAngle = max(dot(halfDir, normal), 0.0);

+ specular = pow(specAngle, 80.0);

+

+

+ //change background color

+ float depth = texture2D( u_depthTex, v_texcoord ).x;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+

+ if (depth > 0.99) {

+ gl_FragColor = vec4(vec3(0.0), 1.0);//vec4(vec3(u_displayType == 5 ? 0.0 : 0.0), 1.0);

+ } else {

+ //gl_FragColor = vec4(diffuseTerm*diffuseColor + specular*vec3(1.0), 1.0);

+ }

+

+}

diff --git a/assets/shader/deferred/diagnostic.frag b/assets/shader/deferred/diagnostic.frag

index d47a5e9..e3eda38 100644

--- a/assets/shader/deferred/diagnostic.frag

+++ b/assets/shader/deferred/diagnostic.frag

@@ -34,7 +34,7 @@ void main()

else if( u_displayType == DISPLAY_COLOR )

gl_FragColor = color;

else if( u_displayType == DISPLAY_NORMAL )

- gl_FragColor = vec4( normal, 1 );

+ gl_FragColor = vec4( normalize(normal), 1 );

else

gl_FragColor = vec4( position, 1 );

}

diff --git a/assets/shader/deferred/diagnostic.frag.bak b/assets/shader/deferred/diagnostic.frag.bak

new file mode 100644

index 0000000..d47a5e9

--- /dev/null

+++ b/assets/shader/deferred/diagnostic.frag.bak

@@ -0,0 +1,40 @@

+precision highp float;

+

+#define DISPLAY_POS 1

+#define DISPLAY_NORMAL 2

+#define DISPLAY_COLOR 3

+#define DISPLAY_DEPTH 4

+

+uniform sampler2D u_positionTex;

+uniform sampler2D u_normalTex;

+uniform sampler2D u_colorTex;

+uniform sampler2D u_depthTex;

+

+uniform float u_zFar;

+uniform float u_zNear;

+uniform int u_displayType;

+

+varying vec2 v_texcoord;

+

+float linearizeDepth( float exp_depth, float near, float far ){

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+}

+

+void main()

+{

+ vec3 normal = texture2D( u_normalTex, v_texcoord ).xyz;

+ vec3 position = texture2D( u_positionTex, v_texcoord ).xyz;

+ vec4 color = texture2D( u_colorTex, v_texcoord );

+ float depth = texture2D( u_depthTex, v_texcoord ).x;

+

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+

+ if( u_displayType == DISPLAY_DEPTH )

+ gl_FragColor = vec4( depth, depth, depth, 1 );

+ else if( u_displayType == DISPLAY_COLOR )

+ gl_FragColor = color;

+ else if( u_displayType == DISPLAY_NORMAL )

+ gl_FragColor = vec4( normal, 1 );

+ else

+ gl_FragColor = vec4( position, 1 );

+}

diff --git a/assets/shader/deferred/diffuse.frag b/assets/shader/deferred/diffuse.frag

index ef0c5fc..91a1c6c 100644

--- a/assets/shader/deferred/diffuse.frag

+++ b/assets/shader/deferred/diffuse.frag

@@ -8,16 +8,43 @@ uniform sampler2D u_depthTex;

uniform float u_zFar;

uniform float u_zNear;

uniform int u_displayType;

+uniform vec4 u_Light;

varying vec2 v_texcoord;

float linearizeDepth( float exp_depth, float near, float far ){

- return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

}

void main()

{

- // Write a diffuse shader and a Blinn-Phong shader

- // NOTE : You may need to add your own normals to fulfill the second's requirements

- gl_FragColor = vec4(texture2D(u_colorTex, v_texcoord).rgb, 1.0);

-}

+

+ // Diffuse calculation

+ vec4 lightColor = vec4(0.5, 0.5, 0.5, 1.0);

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ vec3 lightDir = normalize(u_Light.xyz - position);

+ vec3 diffuseColor = texture2D(u_colorTex, v_texcoord).rgb;

+ float diffuseTerm = clamp(abs(dot(normalize(normal), normalize(lightDir))), 0.0, 1.0);//max(dot(lightDir,normal), 0.0);

+ float specular = 0.0;

+

+

+ vec3 viewDir = normalize(-position);

+ vec3 halfDir = normalize(lightDir + viewDir);

+ float specAngle = max(dot(halfDir, normal), 0.0);

+ specular = pow(specAngle, 80.0);

+

+

+ //change background color

+ float depth = texture2D( u_depthTex, v_texcoord ).x;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+

+ if (depth > 0.99) {

+ gl_FragColor = vec4(vec3(u_displayType == 5 ? 0.0 : 0.8), 1.0);

+ } else {

+ gl_FragColor = vec4(diffuseTerm*diffuseColor + specular*vec3(1.0), 1.0);

+ //gl_FragColor = vec4(normal, 1.0);

+ }

+

+}

\ No newline at end of file

diff --git a/assets/shader/deferred/diffuse.frag.bak b/assets/shader/deferred/diffuse.frag.bak

new file mode 100644

index 0000000..a6b70ff

--- /dev/null

+++ b/assets/shader/deferred/diffuse.frag.bak

@@ -0,0 +1,50 @@

+precision highp float;

+

+uniform sampler2D u_positionTex;

+uniform sampler2D u_normalTex;

+uniform sampler2D u_colorTex;

+uniform sampler2D u_depthTex;

+

+uniform float u_zFar;

+uniform float u_zNear;

+uniform int u_displayType;

+uniform vec4 u_Light;

+

+varying vec2 v_texcoord;

+

+float linearizeDepth( float exp_depth, float near, float far ){

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+}

+

+void main()

+{

+

+ // Diffuse calculation

+ vec4 lightColor = vec4(0.5, 0.5, 0.5, 1.0);

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ vec3 lightDir = normalize(u_Light.xyz - position);

+ vec3 diffuseColor = texture2D(u_colorTex, v_texcoord).rgb;

+ float diffuseTerm = clamp(abs(dot(normalize(normal), normalize(lightDir))), 0.0, 1.0);//max(dot(lightDir,normal), 0.0);

+ float specular = 0.0;

+

+

+ vec3 viewDir = normalize(-position);

+ vec3 halfDir = normalize(lightDir + viewDir);

+ float specAngle = max(dot(halfDir, normal), 0.0);

+ specular = pow(specAngle, 80.0);

+

+

+ //change background color

+ float depth = texture2D( u_depthTex, v_texcoord ).x;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+

+ if (depth > 0.99) {

+ gl_FragColor = vec4(vec3(u_displayType == 5 ? 0.0 : 0.8), 1.0);

+ } else {

+ //gl_FragColor = vec4(diffuseTerm*diffuseColor + specular*vec3(1.0), 1.0);

+ gl_FragColor = vec4(normal, 1.0);

+ }

+

+}

\ No newline at end of file

diff --git a/assets/shader/deferred/normPass.frag b/assets/shader/deferred/normPass.frag

index b41d6ed..5df3879 100644

--- a/assets/shader/deferred/normPass.frag

+++ b/assets/shader/deferred/normPass.frag

@@ -3,5 +3,5 @@ precision highp float;

varying vec3 v_normal;

void main(void){

- gl_FragColor = vec4(v_normal, 1.0);

+ gl_FragColor = vec4(normalize(v_normal), 1.0);

}

diff --git a/assets/shader/deferred/normPass.frag.bak b/assets/shader/deferred/normPass.frag.bak

new file mode 100644

index 0000000..b41d6ed

--- /dev/null

+++ b/assets/shader/deferred/normPass.frag.bak

@@ -0,0 +1,7 @@

+precision highp float;

+

+varying vec3 v_normal;

+

+void main(void){

+ gl_FragColor = vec4(v_normal, 1.0);

+}

diff --git a/assets/shader/deferred/post.frag b/assets/shader/deferred/post.frag

index 52edda2..0739e7c 100644

--- a/assets/shader/deferred/post.frag

+++ b/assets/shader/deferred/post.frag

@@ -1,17 +1,166 @@

precision highp float;

uniform sampler2D u_shadeTex;

+uniform sampler2D u_colorTex;

+uniform sampler2D u_positionTex;

+uniform sampler2D u_normalTex;

+uniform sampler2D u_depthTex;

+

+uniform float u_zFar;

+uniform float u_zNear;

+

+uniform int u_width;

+uniform int u_height;

+uniform int u_displayType;

+uniform vec3 u_kernel[100];

+

varying vec2 v_texcoord;

+#define SAMPLEKERNEL_SIZE 60

+#define KERNEL_SIZE 25 // has to be an odd number

+#define DISPLAY_TOON 7

+#define DISPLAY_BLOOM 6

+#define DISPLAY_BLINN 5

+#define DISPLAY_SSAO 8

+#define DISPLAY_DOF 9

+float w = 1.0 / float(u_width);

+float h = 1.0 / float(u_height);

float linearizeDepth( float exp_depth, float near, float far ){

- return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+}

+float hash( float n ){ //Borrowed from voltage

+ return fract(sin(n)*43758.5453);

+}

+float rand(float co){

+ return fract(sin(dot(vec2(co,co) ,vec2(12.9898,78.233))) * 43758.5453);

+}

+float gaussian2d(int x,int y,int n) {

+ float sigma = 2.0;

+ float fx = float(x) - (float(n) - 1.0) / 2.0;

+ float fy = float(y) - (float(n) - 1.0) / 2.0;

+ return (exp(-abs(fx*fy)/ (2.0*sigma*sigma)))/ (2.0 * 3.1415926 *sigma*sigma);

+}

+

+vec4 bloomShader() {

+

+ float width = (float(KERNEL_SIZE) - 1.0) / 2.0;

+ vec3 color = vec3(0.0,0.0,0.0);

+ for(int i=0; i< KERNEL_SIZE; i++){

+ for(int j=0; j< KERNEL_SIZE; j++){

+ vec2 tc = v_texcoord;

+ tc.y += (float(i)-width)*h;

+ tc.x += (float(j)-width)*w;

+ color += gaussian2d(i,j,KERNEL_SIZE) * texture2D(u_shadeTex, tc).rgb;

+ }

+ }

+

+ return vec4(color,1.0);

+}

+//This function blurs the depth buffer and subtracts it from the original (a high pass filter)

+float round(float f, int num) {

+ return floor(float(num+1)*f)/float(num);

+}

+float detectEdge() {

+ float result = linearizeDepth(texture2D(u_depthTex, v_texcoord).x, u_zNear, u_zFar);

+ for (int i = -4; i <= 4; i++) {

+ for (int j = -4; j <= 4; j++) {

+ result -= linearizeDepth(texture2D(u_depthTex, v_texcoord + vec2(w*float(i), h*float(j))).x, u_zNear, u_zFar)/(81.0);

+ }

+ }

+ return result;

+}

+vec4 toonShader(vec3 color, int numColors) {

+ // Flatten the color

+ vec3 p_color = vec3(round(color.r, numColors), round(color.g, numColors), round(color.b, numColors));

+ // Sharpen the edges

+ return abs(detectEdge()) > 0.005 ? vec4(vec3(0.0), 1.0) : vec4(p_color, 1.0);

}

+vec4 SSAO(vec3 color) {

+ float radius = 0.1;

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ float depth = texture2D(u_depthTex, v_texcoord).r;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+ float occlusion = 0.0;

+ vec3 origin = vec3(position.x, position.y, depth);

+

+ for(int i = 0; i < SAMPLEKERNEL_SIZE; ++i){

+

+ vec3 rvec = normalize(u_kernel[i]);

+ vec3 tangent = normalize(rvec - normal * dot(rvec, normal));

+ vec3 bitangent = cross(normal, tangent);

+ mat3 tbn = mat3(tangent, bitangent, normal);

+ vec3 kernelv = vec3(rand(position.x),rand(position.y),(rand(position.z)+1.0) / 2.0);

+ kernelv = normalize(kernelv);

+ float scale = float(i) / float(SAMPLEKERNEL_SIZE);

+ scale = mix(0.1, 1.0, scale * scale);

+ kernelv = kernelv * scale ;

+

+ vec3 sample = tbn * kernelv;

+ float sampleDepth = texture2D(u_depthTex, v_texcoord + vec2(sample.x,sample.y)* radius).r;

+ sampleDepth = linearizeDepth( sampleDepth, u_zNear, u_zFar );

+

+ float samplez = origin.z - (sample * radius).z / 2.0;

+

+ //rangeCheck helps to prevent erroneous occlusion between large depth discontinuities:

+ float rangeCheck = abs(origin.z - sampleDepth) < radius ? 1.0 : 0.0;

+

+ if(sampleDepth <= samplez)

+ occlusion += 1.0 * rangeCheck;

+ }

+

+ occlusion = 1.0 - (occlusion / float(SAMPLEKERNEL_SIZE));

+

+ return vec4(vec3(occlusion), 1.0);

+}

+

+vec4 Blur(vec3 color) {

+ float depth = texture2D(u_depthTex, v_texcoord).r;

+ depth = 1.0 -linearizeDepth( depth, u_zNear, u_zFar );

+ if(depth < 0.99){

+ vec2 texelSize = vec2(1.0/w, 1.0/h);

+ vec3 result = vec3(0.0,0.0,0.0);

+

+ const int uBlurSize = 5;

+

+ vec2 hlim = vec2(float(-uBlurSize) * 0.5 + 0.5);

+ for (int i = 0; i < uBlurSize; ++i) {

+ if(true) {

+ for (int j = 0; j < uBlurSize; ++j) {

+ if(true){

+ vec2 offset = (hlim + vec2(float(i), float(j))) * texelSize;

+ result += texture2D(u_shadeTex, v_texcoord + offset).rgb;

+ }

+ }

+ }

+

+ }

+

+ vec4 fResult = vec4(result / float(uBlurSize * uBlurSize),1.0);

+ return fResult;

+ }

+ else{

+ return vec4(color, 1.0);

+ }

+

+}

void main()

{

// Currently acts as a pass filter that immmediately renders the shaded texture

// Fill in post-processing as necessary HERE

// NOTE : You may choose to use a key-controlled switch system to display one feature at a time

- gl_FragColor = vec4(texture2D( u_shadeTex, v_texcoord).rgb, 1.0);

-}

+ vec3 color = texture2D( u_shadeTex, v_texcoord).rgb;

+ if(u_displayType == DISPLAY_BLINN)

+ gl_FragColor = vec4(color, 1.0);

+ if(u_displayType == DISPLAY_BLOOM)

+ gl_FragColor = bloomShader();

+ if(u_displayType == DISPLAY_TOON)

+ gl_FragColor = toonShader(color, 3);

+ if(u_displayType == DISPLAY_SSAO)

+ gl_FragColor = SSAO(color);

+ if(u_displayType == DISPLAY_DOF)

+ gl_FragColor = Blur(color);

+

+}

\ No newline at end of file

diff --git a/assets/shader/deferred/post.frag.bak b/assets/shader/deferred/post.frag.bak

new file mode 100644

index 0000000..d2dd90d

--- /dev/null

+++ b/assets/shader/deferred/post.frag.bak

@@ -0,0 +1,166 @@

+precision highp float;

+

+uniform sampler2D u_shadeTex;

+uniform sampler2D u_colorTex;

+uniform sampler2D u_positionTex;

+uniform sampler2D u_normalTex;

+uniform sampler2D u_depthTex;

+

+uniform float u_zFar;

+uniform float u_zNear;

+

+uniform int u_width;

+uniform int u_height;

+uniform int u_displayType;

+uniform vec3 u_kernel[100];

+

+

+varying vec2 v_texcoord;

+#define SAMPLEKERNEL_SIZE 50

+#define KERNEL_SIZE 25 // has to be an odd number

+#define DISPLAY_TOON 7

+#define DISPLAY_BLOOM 6

+#define DISPLAY_BLINN 5

+#define DISPLAY_SSAO 8

+#define DISPLAY_DOF 9

+

+float w = 1.0 / float(u_width);

+float h = 1.0 / float(u_height);

+float linearizeDepth( float exp_depth, float near, float far ){

+ return ( 2.0 * near ) / ( far + near - exp_depth * ( far - near ) );

+}

+float hash( float n ){ //Borrowed from voltage

+ return fract(sin(n)*43758.5453);

+}

+float rand(float co){

+ return fract(sin(dot(vec2(co,co) ,vec2(12.9898,78.233))) * 43758.5453);

+}

+float gaussian2d(int x,int y,int n) {

+ float sigma = 2.0;

+ float fx = float(x) - (float(n) - 1.0) / 2.0;

+ float fy = float(y) - (float(n) - 1.0) / 2.0;

+ return (exp(-abs(fx*fy)/ (2.0*sigma*sigma)))/ (2.0 * 3.1415926 *sigma*sigma);

+}

+

+vec4 bloomShader() {

+

+ float width = (float(KERNEL_SIZE) - 1.0) / 2.0;

+ vec3 color = vec3(0.0,0.0,0.0);

+ for(int i=0; i< KERNEL_SIZE; i++){

+ for(int j=0; j< KERNEL_SIZE; j++){

+ vec2 tc = v_texcoord;

+ tc.y += (float(i)-width)*h;

+ tc.x += (float(j)-width)*w;

+ color += gaussian2d(i,j,KERNEL_SIZE) * texture2D(u_shadeTex, tc).rgb;

+ }

+ }

+

+ return vec4(color,1.0);

+}

+//This function blurs the depth buffer and subtracts it from the original (a high pass filter)

+float round(float f, int num) {

+ return floor(float(num+1)*f)/float(num);

+}

+float detectEdge() {

+ float result = linearizeDepth(texture2D(u_depthTex, v_texcoord).x, u_zNear, u_zFar);

+ for (int i = -4; i <= 4; i++) {

+ for (int j = -4; j <= 4; j++) {

+ result -= linearizeDepth(texture2D(u_depthTex, v_texcoord + vec2(w*float(i), h*float(j))).x, u_zNear, u_zFar)/(81.0);

+ }

+ }

+ return result;

+}

+vec4 toonShader(vec3 color, int numColors) {

+ // Flatten the color

+ vec3 p_color = vec3(round(color.r, numColors), round(color.g, numColors), round(color.b, numColors));

+ // Sharpen the edges

+ return abs(detectEdge()) > 0.005 ? vec4(vec3(0.0), 1.0) : vec4(p_color, 1.0);

+}

+vec4 SSAO(vec3 color) {

+ float radius = 0.1;

+ vec3 normal = texture2D(u_normalTex, v_texcoord).xyz;

+ vec3 position = texture2D(u_positionTex, v_texcoord).xyz;

+ float depth = texture2D(u_depthTex, v_texcoord).r;

+ depth = linearizeDepth( depth, u_zNear, u_zFar );

+ float occlusion = 0.0;

+ vec3 origin = vec3(position.x, position.y, depth);

+

+ for(int i = 0; i < SAMPLEKERNEL_SIZE; ++i){

+

+ vec3 rvec = normalize(u_kernel[i]);

+ vec3 tangent = normalize(rvec - normal * dot(rvec, normal));

+ vec3 bitangent = cross(normal, tangent);

+ mat3 tbn = mat3(tangent, bitangent, normal);

+

+ vec3 kernelv = vec3(rand(position.x),rand(position.y),(rand(position.z)+1.0) / 2.0);

+ kernelv = normalize(kernelv);

+ float scale = float(i) / float(SAMPLEKERNEL_SIZE);

+ scale = mix(0.1, 1.0, scale * scale);

+ kernelv = kernelv * scale ;

+

+ vec3 sample = tbn * kernelv;

+ float sampleDepth = texture2D(u_depthTex, v_texcoord + vec2(sample.x,sample.y)* radius).r;

+ sampleDepth = linearizeDepth( sampleDepth, u_zNear, u_zFar );

+

+ float samplez = origin.z - (sample * radius).z / 2.0;

+

+ //rangeCheck helps to prevent erroneous occlusion between large depth discontinuities:

+ float rangeCheck = abs(origin.z - sampleDepth) < radius ? 1.0 : 0.0;

+

+ if(sampleDepth <= samplez)

+ occlusion += 1.0 * rangeCheck;

+ }

+

+ occlusion = 1.0 - (occlusion / float(SAMPLEKERNEL_SIZE));

+

+ return vec4(vec3(occlusion), 1.0);

+}

+

+vec4 Blur(vec3 color) {

+ float depth = texture2D(u_depthTex, v_texcoord).r;

+ depth = 1.0 -linearizeDepth( depth, u_zNear, u_zFar );

+ if(depth < 0.99){

+ vec2 texelSize = vec2(1.0/w, 1.0/h);

+ vec3 result = vec3(0.0,0.0,0.0);

+

+ const int uBlurSize = 5;

+

+ vec2 hlim = vec2(float(-uBlurSize) * 0.5 + 0.5);

+ for (int i = 0; i < uBlurSize; ++i) {

+ if(true) {

+ for (int j = 0; j < uBlurSize; ++j) {

+ if(true){

+ vec2 offset = (hlim + vec2(float(i), float(j))) * texelSize;

+ result += texture2D(u_shadeTex, v_texcoord + offset).rgb;

+ }

+ }

+ }

+

+ }

+

+ vec4 fResult = vec4(result / float(uBlurSize * uBlurSize),1.0);

+ return fResult;

+ }

+ else{

+ return vec4(color, 1.0);

+ }

+

+}

+void main()

+{

+ // Currently acts as a pass filter that immmediately renders the shaded texture

+ // Fill in post-processing as necessary HERE

+ // NOTE : You may choose to use a key-controlled switch system to display one feature at a time

+ vec3 color = texture2D( u_shadeTex, v_texcoord).rgb;

+ if(u_displayType == DISPLAY_BLINN)

+ gl_FragColor = vec4(color, 1.0);

+ if(u_displayType == DISPLAY_BLOOM)

+ gl_FragColor = bloomShader();

+ if(u_displayType == DISPLAY_TOON)

+ gl_FragColor = toonShader(color, 3);

+ if(u_displayType == DISPLAY_SSAO)

+ gl_FragColor = SSAO(color);

+ if(u_displayType == DISPLAY_DOF)

+ gl_FragColor = Blur(color);

+

+}

\ No newline at end of file

diff --git a/index.html b/index.html

index dd0ffef..dc8b9b7 100644

--- a/index.html

+++ b/index.html

@@ -12,9 +12,8 @@

-

-

+

+

diff --git a/index.html.bak b/index.html.bak

new file mode 100644

index 0000000..7697d31

--- /dev/null

+++ b/index.html.bak

@@ -0,0 +1,32 @@

+

+

+

+ CIS 565 WebGL Deferred Shader

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/js/core/fbo-util.js b/js/core/fbo-util.js

index 42abe4c..e377550 100644

--- a/js/core/fbo-util.js

+++ b/js/core/fbo-util.js

@@ -125,6 +125,7 @@ CIS565WEBGLCORE.createFBO = function(){

// Set up GBuffer Normal

fbo[FBO_GBUFFER_NORMAL] = gl.createFramebuffer();

gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_GBUFFER_NORMAL]);

+ //gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0);//add

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[1], 0);

FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

@@ -138,6 +139,7 @@ CIS565WEBGLCORE.createFBO = function(){

// Set up GBuffer Color

fbo[FBO_GBUFFER_COLOR] = gl.createFramebuffer();

gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_GBUFFER_COLOR]);

+ //gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0);//add

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[2], 0);

FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

@@ -195,5 +197,4 @@ CIS565WEBGLCORE.createFBO = function(){

}

}

};

-};

-

+};

\ No newline at end of file

diff --git a/js/core/fbo-util.js.bak b/js/core/fbo-util.js.bak

new file mode 100644

index 0000000..01acbe7

--- /dev/null

+++ b/js/core/fbo-util.js.bak

@@ -0,0 +1,200 @@

+or creating framebuffer objects

+

+//CIS565WEBGLCORE is a core function interface

+var CIS565WEBGLCORE = CIS565WEBGLCORE || {};

+

+var FBO_GBUFFER = 0;

+var FBO_PBUFFER = 10;

+var FBO_GBUFFER_POSITION = 0;

+var FBO_GBUFFER_NORMAL = 1;

+var FBO_GBUFFER_COLOR = 2;

+var FBO_GBUFFER_DEPTH = 3;

+var FBO_GBUFFER_TEXCOORD = 4;

+

+CIS565WEBGLCORE.createFBO = function(){

+ "use strict"

+

+ var textures = [];

+ var depthTex = null;

+ var fbo = [];

+

+ var multipleTargets = true;

+

+ function init( gl, width, height ){

+ gl.getExtension( "OES_texture_float" );

+ gl.getExtension( "OES_texture_float_linear" );

+ var extDrawBuffers = gl.getExtension( "WEBGL_draw_buffers");

+ var extDepthTex = gl.getExtension( "WEBGL_depth_texture" );

+

+ if( !extDepthTex ) {

+ alert("WARNING : Depth texture extension unavailabe on your browser!");

+ return false;

+ }

+

+ if ( !extDrawBuffers ){

+ alert("WARNING : Draw buffer extension unavailable on your browser! Defaulting to multiple render pases.");

+ multipleTargets = false;

+ }

+

+ //Create depth texture

+ depthTex = gl.createTexture();

+ gl.bindTexture( gl.TEXTURE_2D, depthTex );

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

+ gl.texImage2D(gl.TEXTURE_2D, 0, gl.DEPTH_COMPONENT, width, height, 0, gl.DEPTH_COMPONENT, gl.UNSIGNED_SHORT, null);

+

+ // Create textures for FBO attachment

+ for( var i = 0; i < 5; ++i ){

+ textures[i] = gl.createTexture()

+ gl.bindTexture( gl.TEXTURE_2D, textures[i] );

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

+ gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

+ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGBA, width, height, 0, gl.RGBA, gl.FLOAT, null);

+ }

+

+ // Create GBuffer FBO

+ if (multipleTargets) {

+ fbo[FBO_GBUFFER] = gl.createFramebuffer();

+ gl.bindFramebuffer( gl.FRAMEBUFFER, fbo[FBO_GBUFFER] );

+

+ // Create render target;

+ var drawbuffers = [];

+ drawbuffers[0] = extDrawBuffers.COLOR_ATTACHMENT0_WEBGL;

+ drawbuffers[1] = extDrawBuffers.COLOR_ATTACHMENT1_WEBGL;

+ drawbuffers[2] = extDrawBuffers.COLOR_ATTACHMENT2_WEBGL;

+ drawbuffers[3] = extDrawBuffers.COLOR_ATTACHMENT3_WEBGL;

+ extDrawBuffers.drawBuffersWEBGL( drawbuffers );

+

+ //Attach textures to FBO

+ gl.framebufferTexture2D( gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0 );

+ gl.framebufferTexture2D( gl.FRAMEBUFFER, drawbuffers[0], gl.TEXTURE_2D, textures[0], 0 );

+ gl.framebufferTexture2D( gl.FRAMEBUFFER, drawbuffers[1], gl.TEXTURE_2D, textures[1], 0 );

+ gl.framebufferTexture2D( gl.FRAMEBUFFER, drawbuffers[2], gl.TEXTURE_2D, textures[2], 0 );

+ gl.framebufferTexture2D( gl.FRAMEBUFFER, drawbuffers[3], gl.TEXTURE_2D, textures[3], 0 );

+

+ var FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if( FBOstatus !== gl.FRAMEBUFFER_COMPLETE ){

+ console.log( "GBuffer FBO incomplete! Initialization failed!" );

+ return false;

+ }

+

+ // Create PBuffer FBO

+ fbo[FBO_PBUFFER] = gl.createFramebuffer();

+ gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_PBUFFER]);

+

+ // Attach textures

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[4], 0);

+

+ FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if(FBOstatus !== gl.FRAMEBUFFER_COMPLETE) {

+ console.log("PBuffer FBO incomplete! Initialization failed!");

+ return false;

+ }

+ } else {

+ fbo[FBO_GBUFFER_POSITION] = gl.createFramebuffer();

+

+ // Set up GBuffer Position

+ gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_GBUFFER_POSITION]);

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0);

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[0], 0);

+

+ var FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if (FBOstatus !== gl.FRAMEBUFFER_COMPLETE) {

+ console.log("GBuffer Position FBO incomplete! Init failed!");

+ return false;

+ }

+

+ gl.bindFramebuffer(gl.FRAMEBUFFER, null);

+

+ fbo[FBO_PBUFFER] = gl.createFramebuffer();

+ gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_PBUFFER]);

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[4], 0);

+

+ FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if (FBOstatus !== gl.FRAMEBUFFER_COMPLETE) {

+ console.log("PBuffer FBO incomplete! Init failed!");

+ return false;

+ }

+

+ gl.bindFramebuffer(gl.FRAMEBUFFER, null);

+

+ // Set up GBuffer Normal

+ fbo[FBO_GBUFFER_NORMAL] = gl.createFramebuffer();

+ gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_GBUFFER_NORMAL]);

+ //gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0);//add

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[1], 0);

+

+ FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if (FBOstatus !== gl.FRAMEBUFFER_COMPLETE) {

+ console.log("GBuffer Normal FBO incomplete! Init failed!");

+ return false;

+ }

+

+ gl.bindFramebuffer(gl.FRAMEBUFFER, null);

+

+ // Set up GBuffer Color

+ fbo[FBO_GBUFFER_COLOR] = gl.createFramebuffer();

+ gl.bindFramebuffer(gl.FRAMEBUFFER, fbo[FBO_GBUFFER_COLOR]);

+ //gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.TEXTURE_2D, depthTex, 0);//add

+ gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, textures[2], 0);

+

+ FBOstatus = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

+ if (FBOstatus !== gl.FRAMEBUFFER_COMPLETE) {

+ console.log("GBuffer Color FBO incomplete! Init failed!");

+ return false;

+ }

+ }

+

+ gl.bindFramebuffer( gl.FRAMEBUFFER, null );

+ gl.bindTexture( gl.TEXTURE_2D, null );

+

+ return true;

+ }

+

+ return {

+ ref: function(buffer){

+ return fbo[buffer];

+ },

+ initialize: function( gl, width, height ){

+ return init( gl, width, height );

+ },

+ bind: function(gl, buffer){

+ gl.bindFramebuffer( gl.FRAMEBUFFER, fbo[buffer] );

+ },

+ unbind: function(gl){

+ gl.bindFramebuffer( gl.FRAMEBUFFER, null );

+ },

+ texture: function(i){

+ return textures[i];

+ },

+ depthTexture: function(){

+ return depthTex;

+ },

+ isMultipleTargets: function(){

+ return multipleTargets;

+ },

+ ///// The following 3 functions should be implemented for all objects

+ ///// whose resources are retrieved asynchronously

+ isReady: function(){

+ var isReady = ready;

+ for( var i = 0; i < textures.length; ++i ){

+ isReady &= textures[i].ready;

+ }

+ console.log( isReady );

+ return isReady;

+ },

+ addCallback: function( functor ){

+ callbackFunArray[callbackFunArray.length] = functor;

+ },

+ executeCallBackFunc: function(){

+ var i;

+ for( i = 0; i < callbackFunArray.length; ++i ){

+ callbackFunArray[i]();

+ }

+ }

+ };

+};

\ No newline at end of file

diff --git a/js/ext/Stats.js b/js/ext/Stats.js

new file mode 100644

index 0000000..90b2a27

--- /dev/null

+++ b/js/ext/Stats.js

@@ -0,0 +1,149 @@

+/**

+ * @author mrdoob / http://mrdoob.com/

+ */

+

+var Stats = function () {

+

+ var startTime = Date.now(), prevTime = startTime;

+ var ms = 0, msMin = Infinity, msMax = 0;

+ var fps = 0, fpsMin = Infinity, fpsMax = 0;

+ var frames = 0, mode = 0;

+

+ var container = document.createElement( 'div' );

+ container.id = 'stats';

+ container.addEventListener( 'mousedown', function ( event ) { event.preventDefault(); setMode( ++ mode % 2 ) }, false );

+ container.style.cssText = 'width:80px;opacity:0.9;cursor:pointer';

+

+ var fpsDiv = document.createElement( 'div' );

+ fpsDiv.id = 'fps';

+ fpsDiv.style.cssText = 'padding:0 0 3px 3px;text-align:left;background-color:#002';

+ container.appendChild( fpsDiv );

+

+ var fpsText = document.createElement( 'div' );

+ fpsText.id = 'fpsText';

+ fpsText.style.cssText = 'color:#0ff;font-family:Helvetica,Arial,sans-serif;font-size:9px;font-weight:bold;line-height:15px';

+ fpsText.innerHTML = 'FPS';

+ fpsDiv.appendChild( fpsText );

+

+ var fpsGraph = document.createElement( 'div' );

+ fpsGraph.id = 'fpsGraph';

+ fpsGraph.style.cssText = 'position:relative;width:74px;height:30px;background-color:#0ff';

+ fpsDiv.appendChild( fpsGraph );

+

+ while ( fpsGraph.children.length < 74 ) {

+

+ var bar = document.createElement( 'span' );

+ bar.style.cssText = 'width:1px;height:30px;float:left;background-color:#113';

+ fpsGraph.appendChild( bar );

+

+ }

+

+ var msDiv = document.createElement( 'div' );

+ msDiv.id = 'ms';

+ msDiv.style.cssText = 'padding:0 0 3px 3px;text-align:left;background-color:#020;display:none';

+ container.appendChild( msDiv );

+

+ var msText = document.createElement( 'div' );

+ msText.id = 'msText';

+ msText.style.cssText = 'color:#0f0;font-family:Helvetica,Arial,sans-serif;font-size:9px;font-weight:bold;line-height:15px';

+ msText.innerHTML = 'MS';

+ msDiv.appendChild( msText );

+

+ var msGraph = document.createElement( 'div' );

+ msGraph.id = 'msGraph';

+ msGraph.style.cssText = 'position:relative;width:74px;height:30px;background-color:#0f0';

+ msDiv.appendChild( msGraph );

+

+ while ( msGraph.children.length < 74 ) {

+

+ var bar = document.createElement( 'span' );

+ bar.style.cssText = 'width:1px;height:30px;float:left;background-color:#131';

+ msGraph.appendChild( bar );

+

+ }

+

+ var setMode = function ( value ) {

+

+ mode = value;

+

+ switch ( mode ) {

+

+ case 0:

+ fpsDiv.style.display = 'block';

+ msDiv.style.display = 'none';

+ break;

+ case 1:

+ fpsDiv.style.display = 'none';

+ msDiv.style.display = 'block';

+ break;

+ }

+

+ };

+

+ var updateGraph = function ( dom, value ) {

+

+ var child = dom.appendChild( dom.firstChild );

+ child.style.height = value + 'px';

+

+ };

+

+ return {

+

+ REVISION: 12,

+

+ domElement: container,

+

+ setMode: setMode,

+

+ begin: function () {

+

+ startTime = Date.now();

+

+ },

+

+ end: function () {

+

+ var time = Date.now();

+

+ ms = time - startTime;

+ msMin = Math.min( msMin, ms );

+ msMax = Math.max( msMax, ms );

+

+ msText.textContent = ms + ' MS (' + msMin + '-' + msMax + ')';

+ updateGraph( msGraph, Math.min( 30, 30 - ( ms / 200 ) * 30 ) );

+

+ frames ++;

+

+ if ( time > prevTime + 1000 ) {

+

+ fps = Math.round( ( frames * 1000 ) / ( time - prevTime ) );

+ fpsMin = Math.min( fpsMin, fps );

+ fpsMax = Math.max( fpsMax, fps );

+

+ fpsText.textContent = fps + ' FPS (' + fpsMin + '-' + fpsMax + ')';

+ updateGraph( fpsGraph, Math.min( 30, 30 - ( fps / 100 ) * 30 ) );

+

+ prevTime = time;

+ frames = 0;

+

+ }

+

+ return time;

+

+ },

+

+ update: function () {

+

+ startTime = this.end();

+

+ }

+

+ }

+

+};

+

+if ( typeof module === 'object' ) {

+

+ module.exports = Stats;

+

+}

\ No newline at end of file

diff --git a/js/ext/stats.min.js b/js/ext/stats.min.js

new file mode 100644

index 0000000..52539f4

--- /dev/null

+++ b/js/ext/stats.min.js

@@ -0,0 +1,6 @@

+// stats.js - http://github.com/mrdoob/stats.js

+var Stats=function(){var l=Date.now(),m=l,g=0,n=Infinity,o=0,h=0,p=Infinity,q=0,r=0,s=0,f=document.createElement("div");f.id="stats";f.addEventListener("mousedown",function(b){b.preventDefault();t(++s%2)},!1);f.style.cssText="width:80px;opacity:0.9;cursor:pointer";var a=document.createElement("div");a.id="fps";a.style.cssText="padding:0 0 3px 3px;text-align:left;background-color:#002";f.appendChild(a);var i=document.createElement("div");i.id="fpsText";i.style.cssText="color:#0ff;font-family:Helvetica,Arial,sans-serif;font-size:9px;font-weight:bold;line-height:15px";

+i.innerHTML="FPS";a.appendChild(i);var c=document.createElement("div");c.id="fpsGraph";c.style.cssText="position:relative;width:74px;height:30px;background-color:#0ff";for(a.appendChild(c);74>c.children.length;){var j=document.createElement("span");j.style.cssText="width:1px;height:30px;float:left;background-color:#113";c.appendChild(j)}var d=document.createElement("div");d.id="ms";d.style.cssText="padding:0 0 3px 3px;text-align:left;background-color:#020;display:none";f.appendChild(d);var k=document.createElement("div");

+k.id="msText";k.style.cssText="color:#0f0;font-family:Helvetica,Arial,sans-serif;font-size:9px;font-weight:bold;line-height:15px";k.innerHTML="MS";d.appendChild(k);var e=document.createElement("div");e.id="msGraph";e.style.cssText="position:relative;width:74px;height:30px;background-color:#0f0";for(d.appendChild(e);74>e.children.length;)j=document.createElement("span"),j.style.cssText="width:1px;height:30px;float:left;background-color:#131",e.appendChild(j);var t=function(b){s=b;switch(s){case 0:a.style.display=

+"block";d.style.display="none";break;case 1:a.style.display="none",d.style.display="block"}};return{REVISION:12,domElement:f,setMode:t,begin:function(){l=Date.now()},end:function(){var b=Date.now();g=b-l;n=Math.min(n,g);o=Math.max(o,g);k.textContent=g+" MS ("+n+"-"+o+")";var a=Math.min(30,30-30*(g/200));e.appendChild(e.firstChild).style.height=a+"px";r++;b>m+1E3&&(h=Math.round(1E3*r/(b-m)),p=Math.min(p,h),q=Math.max(q,h),i.textContent=h+" FPS ("+p+"-"+q+")",a=Math.min(30,30-30*(h/100)),c.appendChild(c.firstChild).style.height=

+a+"px",m=b,r=0);return b},update:function(){l=this.end()}}};"object"===typeof module&&(module.exports=Stats);

diff --git a/js/main.js b/js/main.js

index 4140ae1..de1b997 100644

--- a/js/main.js

+++ b/js/main.js

@@ -13,6 +13,9 @@ var objloader; // OBJ loader

var model; // Model object

var quad = {}; // Empty object for full-screen quad

+//for SSAO

+var kernel = []; // Random samples for SSAO to use

+var kernelSize = 100;

// Framebuffer

var fbo = null;

@@ -31,10 +34,10 @@ var isDiagnostic = true;

var zNear = 20;

var zFar = 2000;

var texToDisplay = 1;

-

+var stats;

var main = function (canvasId, messageId) {

var canvas;

-

+ stats = initStats();

// Initialize WebGL

initGL(canvasId, messageId);

@@ -49,7 +52,7 @@ var main = function (canvasId, messageId) {

// Set up shaders

initShaders();

-

+ initKernel();

// Register our render callbacks

CIS565WEBGLCORE.render = render;

CIS565WEBGLCORE.renderLoop = renderLoop;

@@ -64,6 +67,8 @@ var renderLoop = function () {

};

var render = function () {

+ if (stats)

+ stats.update();

if (fbo.isMultipleTargets()) {

renderPass();

} else {

@@ -157,6 +162,7 @@ var renderMulti = function () {

fbo.bind(gl, FBO_GBUFFER_POSITION);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

+ //gl.clear(gl.COLOR_BUFFER_BIT);//add

gl.enable(gl.DEPTH_TEST);

gl.useProgram(posProg.ref());

@@ -176,7 +182,8 @@ var renderMulti = function () {

fbo.bind(gl, FBO_GBUFFER_NORMAL);

gl.clear(gl.COLOR_BUFFER_BIT);

-

+ gl.enable(gl.DEPTH_TEST);

+

gl.useProgram(normProg.ref());

//update the normal matrix

@@ -194,7 +201,7 @@ var renderMulti = function () {

fbo.bind(gl, FBO_GBUFFER_COLOR);

gl.clear(gl.COLOR_BUFFER_BIT);

-

+ gl.enable(gl.DEPTH_TEST);

gl.useProgram(colorProg.ref());

gl.uniformMatrix4fv(colorProg.uMVPLoc, false, mvpMat);

@@ -215,26 +222,30 @@ var renderShade = function () {

gl.clear(gl.COLOR_BUFFER_BIT);

// Bind necessary textures

- //gl.activeTexture( gl.TEXTURE0 ); //position

- //gl.bindTexture( gl.TEXTURE_2D, fbo.texture(0) );

- //gl.uniform1i( shadeProg.uPosSamplerLoc, 0 );

-

- //gl.activeTexture( gl.TEXTURE1 ); //normal

- //gl.bindTexture( gl.TEXTURE_2D, fbo.texture(1) );

- //gl.uniform1i( shadeProg.uNormalSamplerLoc, 1 );

+ //comment begin

+ gl.activeTexture( gl.TEXTURE0 ); //position

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(0) );

+ gl.uniform1i( shadeProg.uPosSamplerLoc, 0 );

+ gl.activeTexture( gl.TEXTURE1 ); //normal

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(1) );

+ gl.uniform1i( shadeProg.uNormalSamplerLoc, 1 );

+ //comment end

gl.activeTexture( gl.TEXTURE2 ); //color

gl.bindTexture( gl.TEXTURE_2D, fbo.texture(2) );

gl.uniform1i( shadeProg.uColorSamplerLoc, 2 );

-

- //gl.activeTexture( gl.TEXTURE3 ); //depth

- //gl.bindTexture( gl.TEXTURE_2D, fbo.depthTexture() );

- //gl.uniform1i( shadeProg.uDepthSamplerLoc, 3 );

-

- // Bind necessary uniforms

- //gl.uniform1f( shadeProg.uZNearLoc, zNear );

- //gl.uniform1f( shadeProg.uZFarLoc, zFar );

-

+ //comment begin

+ gl.activeTexture( gl.TEXTURE3 ); //depth

+ gl.bindTexture( gl.TEXTURE_2D, fbo.depthTexture() );

+ gl.uniform1i( shadeProg.uDepthSamplerLoc, 3 );

+

+ //Bind necessary uniforms

+ gl.uniform1f( shadeProg.uZNearLoc, zNear );

+ gl.uniform1f( shadeProg.uZFarLoc, zFar );

+ var light = vec4.create();

+ vec4.transformMat4(light, vec4.create([1.0, 1.0, 100.0, 1.0]), camera.getViewTransform());

+ gl.uniform4fv(shadeProg.uLightLoc, light);

+ //comment out

drawQuad(shadeProg);

// Unbind FBO

@@ -279,9 +290,34 @@ var renderPost = function () {

gl.clear(gl.COLOR_BUFFER_BIT);

// Bind necessary textures

- gl.activeTexture( gl.TEXTURE4 );

+ gl.activeTexture( gl.TEXTURE4 ); //shade

gl.bindTexture( gl.TEXTURE_2D, fbo.texture(4) );

- gl.uniform1i(postProg.uShadeSamplerLoc, 4 );

+ gl.uniform1i(postProg.uShadeSamplerLoc, 4);

+

+ gl.activeTexture(gl.TEXTURE0); //position

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(0));

+ gl.uniform1i(postProg.uPosSamplerLoc, 0);

+

+ gl.activeTexture(gl.TEXTURE1); //normal

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(1));

+ gl.uniform1i(postProg.uNormalSamplerLoc, 1);

+

+ gl.activeTexture(gl.TEXTURE2); //color

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(2));

+ gl.uniform1i(postProg.uColorSamplerLoc, 2);

+

+ gl.activeTexture(gl.TEXTURE3); //depth

+ gl.bindTexture(gl.TEXTURE_2D, fbo.depthTexture());

+ gl.uniform1i(postProg.uDepthSamplerLoc, 3);

+

+

+

+ gl.uniform1f(postProg.uZNearLoc, zNear);

+ gl.uniform1f(postProg.uZFarLoc, zFar);

+ gl.uniform1i(postProg.uWidthLoc, canvas.width);

+ gl.uniform1i(postProg.uHeightLoc, canvas.height);

+ gl.uniform1i(postProg.uDisplayTypeLoc, texToDisplay);

+ gl.uniform3fv(postProg.uKernelLoc, kernel);

drawQuad(postProg);

};

@@ -337,6 +373,26 @@ var initCamera = function () {

isDiagnostic = true;

texToDisplay = 4;

break;

+ case 53:

+ isDiagnostic = false;

+ texToDisplay = 5;//blinn

+ break;

+ case 54:

+ isDiagnostic = false;

+ texToDisplay = 6;//bloom

+ break;

+ case 55:

+ isDiagnostic = false;

+ texToDisplay = 7;

+ break;

+ case 56:

+ isDiagnostic = false;

+ texToDisplay = 8;

+ break;

+ case 57:

+ isDiagnostic = false;

+ texToDisplay = 9;

+ break;

}

}

};

@@ -466,6 +522,7 @@ var initShaders = function () {

shadeProg.uZNearLoc = gl.getUniformLocation( shadeProg.ref(), "u_zNear" );

shadeProg.uZFarLoc = gl.getUniformLocation( shadeProg.ref(), "u_zFar" );

shadeProg.uDisplayTypeLoc = gl.getUniformLocation( shadeProg.ref(), "u_displayType" );

+ shadeProg.uLightLoc = gl.getUniformLocation(shadeProg.ref(), "u_Light");

});

CIS565WEBGLCORE.registerAsyncObj(gl, shadeProg);

@@ -477,6 +534,21 @@ var initShaders = function () {

postProg.aVertexTexcoordLoc = gl.getAttribLocation( postProg.ref(), "a_texcoord" );

postProg.uShadeSamplerLoc = gl.getUniformLocation( postProg.ref(), "u_shadeTex");

+ postProg.aVertexTexcoordLoc = gl.getAttribLocation(postProg.ref(), "a_texcoord");

+

+ postProg.uShadeSamplerLoc = gl.getUniformLocation( postProg.ref(), "u_shadeTex");

+ postProg.uPosSamplerLoc = gl.getUniformLocation(postProg.ref(), "u_positionTex");

+ postProg.uNormalSamplerLoc = gl.getUniformLocation(postProg.ref(), "u_normalTex");

+ postProg.uColorSamplerLoc = gl.getUniformLocation(postProg.ref(), "u_colorTex");

+ postProg.uDepthSamplerLoc = gl.getUniformLocation(postProg.ref(), "u_depthTex");

+

+

+ postProg.uZNearLoc = gl.getUniformLocation(postProg.ref(), "u_zNear");

+ postProg.uZFarLoc = gl.getUniformLocation(postProg.ref(), "u_zFar");

+ postProg.uWidthLoc = gl.getUniformLocation(postProg.ref(), "u_width");

+ postProg.uHeightLoc = gl.getUniformLocation(postProg.ref(), "u_height");

+ postProg.uDisplayTypeLoc = gl.getUniformLocation(postProg.ref(), "u_displayType");

+ postProg.uKernelLoc = gl.getUniformLocation(postProg.ref(), "u_kernel");

});

CIS565WEBGLCORE.registerAsyncObj(gl, postProg);

};

@@ -488,3 +560,26 @@ var initFramebuffer = function () {

return;

}

};

+var initKernel = function () {

+ for (var i = 0; i < kernelSize; i++) {

+ var x = Math.random() * 2.0 - 1.0;

+ var y = Math.random() * 2.0 - 1.0;

+ var z = Math.random();

+

+ kernel[i] = [Math.random() * x, Math.random() * y, Math.random() * z];

+ }

+};

+function initStats() {

+ stats = new Stats();

+ stats.setMode(0); // 0: fps, 1: ms

+

+ // Align top-left

+ stats.domElement.style.position = 'absolute';

+ stats.domElement.style.left = '0px';

+ stats.domElement.style.top = '0px';

+

+ document.body.appendChild(stats.domElement);

+

+

+ return stats;

+}

\ No newline at end of file

diff --git a/js/main.js.bak b/js/main.js.bak

new file mode 100644

index 0000000..972ff86

--- /dev/null

+++ b/js/main.js.bak

@@ -0,0 +1,587 @@

+// Written by Harmony Li. Based on Cheng-Tso Lin's CIS 700 starter engine.

+// CIS 565 : GPU Programming, Fall 2014.

+// University of Pennsvylania (c) 2014.

+

+// Global Variables

+var canvas; // Canvas DOM Element

+var gl; // GL context object

+var camera; // Camera object

+var interactor; // Camera interaction object

+var objloader; // OBJ loader

+

+// Models

+var model; // Model object

+var quad = {}; // Empty object for full-screen quad

+

+//for SSAO

+var kernel = []; // Random samples for SSAO to use

+var kernelSize = 100;

+// Framebuffer

+var fbo = null;

+

+// Shader programs

+var passProg; // Shader program for G-Buffer

+var shadeProg; // Shader program for P-Buffer

+var diagProg; // Shader program from diagnostic

+var postProg; // Shader for post-process effects

+

+// Multi-Pass programs

+var posProg;

+var normProg;

+var colorProg;

+

+var isDiagnostic = true;

+var zNear = 20;

+var zFar = 2000;

+var texToDisplay = 1;

+var stats;

+var main = function (canvasId, messageId) {

+ var canvas;

+ stats = initStats();

+ // Initialize WebGL

+ initGL(canvasId, messageId);

+

+ // Set up camera

+ initCamera(canvas);

+

+ // Set up FBOs

+ initFramebuffer();

+

+ // Set up models

+ initObjs();

+

+ // Set up shaders

+ initShaders();

+ initKernel();

+ // Register our render callbacks

+ CIS565WEBGLCORE.render = render;

+ CIS565WEBGLCORE.renderLoop = renderLoop;

+

+ // Start the rendering loop

+ CIS565WEBGLCORE.run(gl);

+};

+

+var renderLoop = function () {

+ window.requestAnimationFrame(renderLoop);

+ render();

+};

+

+var render = function () {

+ if (stats)

+ stats.update();

+ if (fbo.isMultipleTargets()) {

+ renderPass();

+ } else {

+ renderMulti();

+ }

+

+ if (!isDiagnostic) {

+ renderShade();

+ renderPost();

+ } else {

+ renderDiagnostic();

+ }

+

+ gl.useProgram(null);

+};

+

+var drawModel = function (program, mask) {

+ // Bind attributes

+ for(var i = 0; i < model.numGroups(); i++) {

+ if (mask & 0x1) {

+ gl.bindBuffer(gl.ARRAY_BUFFER, model.vbo(i));

+ gl.vertexAttribPointer(program.aVertexPosLoc, 3, gl.FLOAT, false, 0, 0);

+ gl.enableVertexAttribArray(program.aVertexPosLoc);

+ }

+

+ if (mask & 0x2) {

+ gl.bindBuffer(gl.ARRAY_BUFFER, model.nbo(i));

+ gl.vertexAttribPointer(program.aVertexNormalLoc, 3, gl.FLOAT, false, 0, 0);

+ gl.enableVertexAttribArray(program.aVertexNormalLoc);

+ }

+

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, model.ibo(i));

+ gl.drawElements(gl.TRIANGLES, model.iboLength(i), gl.UNSIGNED_SHORT, 0);

+ }

+

+ if (mask & 0x1) gl.disableVertexAttribArray(program.aVertexPosLoc);

+ if (mask & 0x2) gl.disableVertexAttribArray(program.aVertexNormalLoc);

+

+ gl.bindBuffer(gl.ARRAY_BUFFER, null);

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, null);

+};

+

+var drawQuad = function (program) {

+ gl.bindBuffer(gl.ARRAY_BUFFER, quad.vbo);

+ gl.vertexAttribPointer(program.aVertexPosLoc, 3, gl.FLOAT, false, 0, 0);

+ gl.enableVertexAttribArray(program.aVertexPosLoc);

+

+ gl.bindBuffer(gl.ARRAY_BUFFER, quad.tbo);

+ gl.vertexAttribPointer(program.aVertexTexcoordLoc, 2, gl.FLOAT, false, 0, 0);

+ gl.enableVertexAttribArray(program.aVertexTexcoordLoc);

+

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, quad.ibo);

+ gl.drawElements(gl.TRIANGLES, 6, gl.UNSIGNED_SHORT, 0);

+

+ gl.disableVertexAttribArray(program.aVertexPosLoc);

+ gl.disableVertexAttribArray(program.aVertexTexcoordLoc);

+

+ gl.bindBuffer(gl.ARRAY_BUFFER, null);

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, null);

+};

+

+var renderPass = function () {

+ // Bind framebuffer object for gbuffer

+ fbo.bind(gl, FBO_GBUFFER);

+

+ gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

+ gl.enable(gl.DEPTH_TEST);

+

+ gl.useProgram(passProg.ref());

+

+ //update the model-view matrix

+ var mvpMat = mat4.create();

+ mat4.multiply( mvpMat, persp, camera.getViewTransform() );

+

+ //update the normal matrix

+ var nmlMat = mat4.create();

+ mat4.invert( nmlMat, camera.getViewTransform() );

+ mat4.transpose( nmlMat, nmlMat);

+

+ gl.uniformMatrix4fv( passProg.uModelViewLoc, false, camera.getViewTransform());

+ gl.uniformMatrix4fv( passProg.uMVPLoc, false, mvpMat );

+ gl.uniformMatrix4fv( passProg.uNormalMatLoc, false, nmlMat );

+

+ drawModel(passProg, 0x3);

+

+ // Unbind framebuffer

+ fbo.unbind(gl);

+};

+

+var renderMulti = function () {

+ fbo.bind(gl, FBO_GBUFFER_POSITION);

+

+ gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

+ //gl.clear(gl.COLOR_BUFFER_BIT);//add

+ gl.enable(gl.DEPTH_TEST);

+

+ gl.useProgram(posProg.ref());

+

+ //update the model-view matrix

+ var mvpMat = mat4.create();

+ mat4.multiply( mvpMat, persp, camera.getViewTransform() );

+

+ gl.uniformMatrix4fv( posProg.uModelViewLoc, false, camera.getViewTransform());

+ gl.uniformMatrix4fv( posProg.uMVPLoc, false, mvpMat );

+

+ drawModel(posProg, 1);

+

+ gl.disable(gl.DEPTH_TEST);

+ fbo.unbind(gl);

+ gl.useProgram(null);

+

+ fbo.bind(gl, FBO_GBUFFER_NORMAL);

+ gl.clear(gl.COLOR_BUFFER_BIT);

+ gl.enable(gl.DEPTH_TEST);

+

+ gl.useProgram(normProg.ref());

+

+ //update the normal matrix

+ var nmlMat = mat4.create();

+ mat4.invert( nmlMat, camera.getViewTransform() );

+ mat4.transpose( nmlMat, nmlMat);

+

+ gl.uniformMatrix4fv(normProg.uMVPLoc, false, mvpMat);

+ gl.uniformMatrix4fv(normProg.uNormalMatLoc, false, nmlMat);

+

+ drawModel(normProg, 3);

+

+ gl.useProgram(null);

+ fbo.unbind(gl);

+

+ fbo.bind(gl, FBO_GBUFFER_COLOR);

+ gl.clear(gl.COLOR_BUFFER_BIT);

+ gl.enable(gl.DEPTH_TEST);

+ gl.useProgram(colorProg.ref());

+

+ gl.uniformMatrix4fv(colorProg.uMVPLoc, false, mvpMat);

+

+ drawModel(colorProg, 1);

+

+ gl.useProgram(null);

+ fbo.unbind(gl);

+};

+

+var renderShade = function () {

+ gl.useProgram(shadeProg.ref());

+ gl.disable(gl.DEPTH_TEST);

+

+ // Bind FBO

+ fbo.bind(gl, FBO_PBUFFER);

+

+ gl.clear(gl.COLOR_BUFFER_BIT);

+

+ // Bind necessary textures

+ //comment begin

+ gl.activeTexture( gl.TEXTURE0 ); //position

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(0) );

+ gl.uniform1i( shadeProg.uPosSamplerLoc, 0 );

+

+ gl.activeTexture( gl.TEXTURE1 ); //normal

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(1) );

+ gl.uniform1i( shadeProg.uNormalSamplerLoc, 1 );

+ //comment end

+ gl.activeTexture( gl.TEXTURE2 ); //color

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(2) );

+ gl.uniform1i( shadeProg.uColorSamplerLoc, 2 );

+ //comment begin

+ gl.activeTexture( gl.TEXTURE3 ); //depth

+ gl.bindTexture( gl.TEXTURE_2D, fbo.depthTexture() );

+ gl.uniform1i( shadeProg.uDepthSamplerLoc, 3 );

+

+ //Bind necessary uniforms

+ gl.uniform1f( shadeProg.uZNearLoc, zNear );

+ gl.uniform1f( shadeProg.uZFarLoc, zFar );

+ var light = vec4.create();

+ vec4.transformMat4(light, vec4.create([1.0, 1.0, 100.0, 1.0]), camera.getViewTransform());

+ gl.uniform4fv(shadeProg.uLightLoc, light);

+ //comment out

+ drawQuad(shadeProg);

+

+ // Unbind FBO

+ fbo.unbind(gl);

+};

+

+var renderDiagnostic = function () {

+ gl.useProgram(diagProg.ref());

+

+ gl.disable(gl.DEPTH_TEST);

+ gl.clear(gl.COLOR_BUFFER_BIT);

+

+ // Bind necessary textures

+ gl.activeTexture( gl.TEXTURE0 ); //position

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(0) );

+ gl.uniform1i( diagProg.uPosSamplerLoc, 0 );

+

+ gl.activeTexture( gl.TEXTURE1 ); //normal

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(1) );

+ gl.uniform1i( diagProg.uNormalSamplerLoc, 1 );

+

+ gl.activeTexture( gl.TEXTURE2 ); //color

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(2) );

+ gl.uniform1i( diagProg.uColorSamplerLoc, 2 );

+

+ gl.activeTexture( gl.TEXTURE3 ); //depth

+ gl.bindTexture( gl.TEXTURE_2D, fbo.depthTexture() );

+ gl.uniform1i( diagProg.uDepthSamplerLoc, 3 );

+

+ // Bind necessary uniforms

+ gl.uniform1f( diagProg.uZNearLoc, zNear );

+ gl.uniform1f( diagProg.uZFarLoc, zFar );

+ gl.uniform1i( diagProg.uDisplayTypeLoc, texToDisplay );

+

+ drawQuad(diagProg);

+};

+

+var renderPost = function () {

+ gl.useProgram(postProg.ref());

+

+ gl.disable(gl.DEPTH_TEST);

+ gl.clear(gl.COLOR_BUFFER_BIT);

+

+ // Bind necessary textures

+ gl.activeTexture( gl.TEXTURE4 ); //shade

+ gl.bindTexture( gl.TEXTURE_2D, fbo.texture(4) );

+ gl.uniform1i(postProg.uShadeSamplerLoc, 4);

+

+ gl.activeTexture(gl.TEXTURE0); //position

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(0));

+ gl.uniform1i(postProg.uPosSamplerLoc, 0);

+

+ gl.activeTexture(gl.TEXTURE1); //normal

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(1));

+ gl.uniform1i(postProg.uNormalSamplerLoc, 1);

+

+ gl.activeTexture(gl.TEXTURE2); //color

+ gl.bindTexture(gl.TEXTURE_2D, fbo.texture(2));

+ gl.uniform1i(postProg.uColorSamplerLoc, 2);

+

+ gl.activeTexture(gl.TEXTURE3); //depth

+ gl.bindTexture(gl.TEXTURE_2D, fbo.depthTexture());

+ gl.uniform1i(postProg.uDepthSamplerLoc, 3);

+

+

+

+ gl.uniform1f(postProg.uZNearLoc, zNear);

+ gl.uniform1f(postProg.uZFarLoc, zFar);

+ gl.uniform1i(postProg.uWidthLoc, canvas.width);

+ gl.uniform1i(postProg.uHeightLoc, canvas.height);

+ gl.uniform1i(postProg.uDisplayTypeLoc, texToDisplay);

+ gl.uniform3fv(postProg.uKernelLoc, kernel);

+

+ drawQuad(postProg);

+};

+

+var initGL = function (canvasId, messageId) {

+ var msg;

+

+ // Get WebGL context

+ canvas = document.getElementById(canvasId);

+ msg = document.getElementById(messageId);

+ gl = CIS565WEBGLCORE.getWebGLContext(canvas, msg);

+

+ if (!gl) {

+ return; // return if a WebGL context not found

+ }

+

+ // Set up WebGL stuff

+ gl.viewport(0, 0, canvas.width, canvas.height);

+ gl.clearColor(0.3, 0.3, 0.3, 1.0);

+ gl.enable(gl.DEPTH_TEST);

+ gl.depthFunc(gl.LESS);

+};

+

+var initCamera = function () {

+ // Setup camera

+ persp = mat4.create();

+ mat4.perspective(persp, todeg(60), canvas.width / canvas.height, 0.1, 2000);

+

+ camera = CIS565WEBGLCORE.createCamera(CAMERA_TRACKING_TYPE);

+ camera.goHome([0, 0, 4]);

+ interactor = CIS565WEBGLCORE.CameraInteractor(camera, canvas);

+

+ // Add key-input controls

+ window.onkeydown = function (e) {

+ interactor.onKeyDown(e);

+ switch(e.keyCode) {

+ case 48:

+ isDiagnostic = false;

+ break;

+ case 49:

+ isDiagnostic = true;

+ texToDisplay = 1;

+ break;

+ case 50:

+ isDiagnostic = true;

+ texToDisplay = 2;

+ break;

+ case 51:

+ isDiagnostic = true;

+ texToDisplay = 3;

+ break;

+ case 52:

+ isDiagnostic = true;

+ texToDisplay = 4;

+ break;

+ case 53:

+ isDiagnostic = false;

+ texToDisplay = 5;//blinn

+ break;

+ case 54:

+ isDiagnostic = false;

+ texToDisplay = 6;//bloom

+ break;

+ case 55:

+ isDiagnostic = false;

+ texToDisplay = 7;

+ break;

+ case 56:

+ isDiagnostic = false;

+ texToDisplay = 8;

+ break;

+ case 57:

+ isDiagnostic = false;

+ texToDisplay = 9;

+ break;

+ }

+ }

+};

+

+var initObjs = function () {

+ // Create an OBJ loader

+ objloader = CIS565WEBGLCORE.createOBJLoader();

+

+ // Load the OBJ from file

+ objloader.loadFromFile(gl, "assets/models/suzanne.obj", null);

+

+ // Add callback to upload the vertices once loaded

+ objloader.addCallback(function () {

+ model = new Model(gl, objloader);

+ });

+

+ // Register callback item

+ CIS565WEBGLCORE.registerAsyncObj(gl, objloader);

+

+ // Initialize full-screen quad

+ quad.vbo = gl.createBuffer();

+ quad.ibo = gl.createBuffer();

+ quad.tbo = gl.createBuffer();

+

+ gl.bindBuffer(gl.ARRAY_BUFFER, quad.vbo);

+ gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(screenQuad.vertices), gl.STATIC_DRAW);

+

+ gl.bindBuffer(gl.ARRAY_BUFFER, quad.tbo);

+ gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(screenQuad.texcoords), gl.STATIC_DRAW);

+ gl.bindBuffer(gl.ARRAY_BUFFER, null)

+

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, quad.ibo);

+ gl.bufferData(gl.ELEMENT_ARRAY_BUFFER, new Uint16Array(screenQuad.indices), gl.STATIC_DRAW);

+ gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, null);

+};

+

+var initShaders = function () {

+ if (fbo.isMultipleTargets()) {

+ // Create a shader program for rendering the object we are loading

+ passProg = CIS565WEBGLCORE.createShaderProgram();

+

+ // Load the shader source asynchronously

+ passProg.loadShader(gl, "assets/shader/deferred/pass.vert", "assets/shader/deferred/pass.frag");

+

+ // Register the necessary callback functions

+ passProg.addCallback( function() {

+ gl.useProgram(passProg.ref());

+

+ // Add uniform locations

+ passProg.aVertexPosLoc = gl.getAttribLocation( passProg.ref(), "a_pos" );

+ passProg.aVertexNormalLoc = gl.getAttribLocation( passProg.ref(), "a_normal" );

+ passProg.aVertexTexcoordLoc = gl.getAttribLocation( passProg.ref(), "a_texcoord" );

+

+ passProg.uPerspLoc = gl.getUniformLocation( passProg.ref(), "u_projection" );

+ passProg.uModelViewLoc = gl.getUniformLocation( passProg.ref(), "u_modelview" );

+ passProg.uMVPLoc = gl.getUniformLocation( passProg.ref(), "u_mvp" );

+ passProg.uNormalMatLoc = gl.getUniformLocation( passProg.ref(), "u_normalMat");

+ passProg.uSamplerLoc = gl.getUniformLocation( passProg.ref(), "u_sampler");

+ });

+

+ CIS565WEBGLCORE.registerAsyncObj(gl, passProg);

+ } else {

+ posProg = CIS565WEBGLCORE.createShaderProgram();

+ posProg.loadShader(gl, "assets/shader/deferred/posPass.vert", "assets/shader/deferred/posPass.frag");

+ posProg.addCallback(function() {

+ posProg.aVertexPosLoc = gl.getAttribLocation(posProg.ref(), "a_pos");

+

+ posProg.uModelViewLoc = gl.getUniformLocation(posProg.ref(), "u_modelview");

+ posProg.uMVPLoc = gl.getUniformLocation(posProg.ref(), "u_mvp");

+ });

+

+ CIS565WEBGLCORE.registerAsyncObj(gl, posProg);

+