From 35a612f8765347d4fc24a2defb68cf66098762d1 Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Desprez=20Aur=C3=A9lien?=

<45626870+aurelien45100@users.noreply.github.com>

Date: Thu, 16 Jun 2022 18:18:07 +0200

Subject: [PATCH 1/4] Update INSTALL.md

Added the description for the S3 first configuration.

---

INSTALL.md | 91 +++++++++++++++++++++++++++++++++++++++++++++++++++---

1 file changed, 86 insertions(+), 5 deletions(-)

diff --git a/INSTALL.md b/INSTALL.md

index 4183eb0..6d1d3bf 100644

--- a/INSTALL.md

+++ b/INSTALL.md

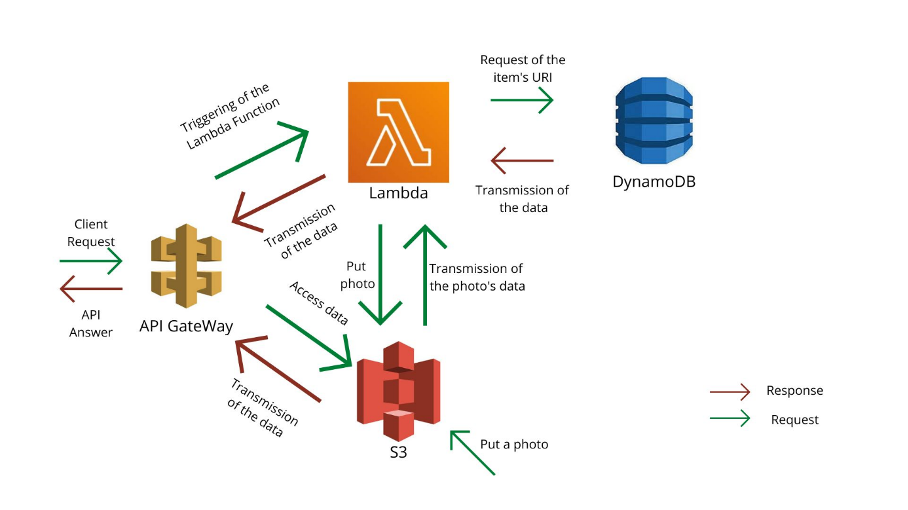

@@ -29,17 +29,98 @@ We are going to start by configurating all the aws elements of the project and f

#### **S3 Configuration**

-For this project, we only need to create a S3 bucket. To do so:

+For this project, we need to do multiple steps for s3.

+1. Create an "S3" bucket.

+2. Change bucket policy and make the bucket private.

+3. Create the "Thumbnail" and "Optimized" default folder.

+

+##### **1. CREATE AN "S3" BUCKET**

+

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on create a bucket

+* Click on "Create bucket" :

+  +

+

+

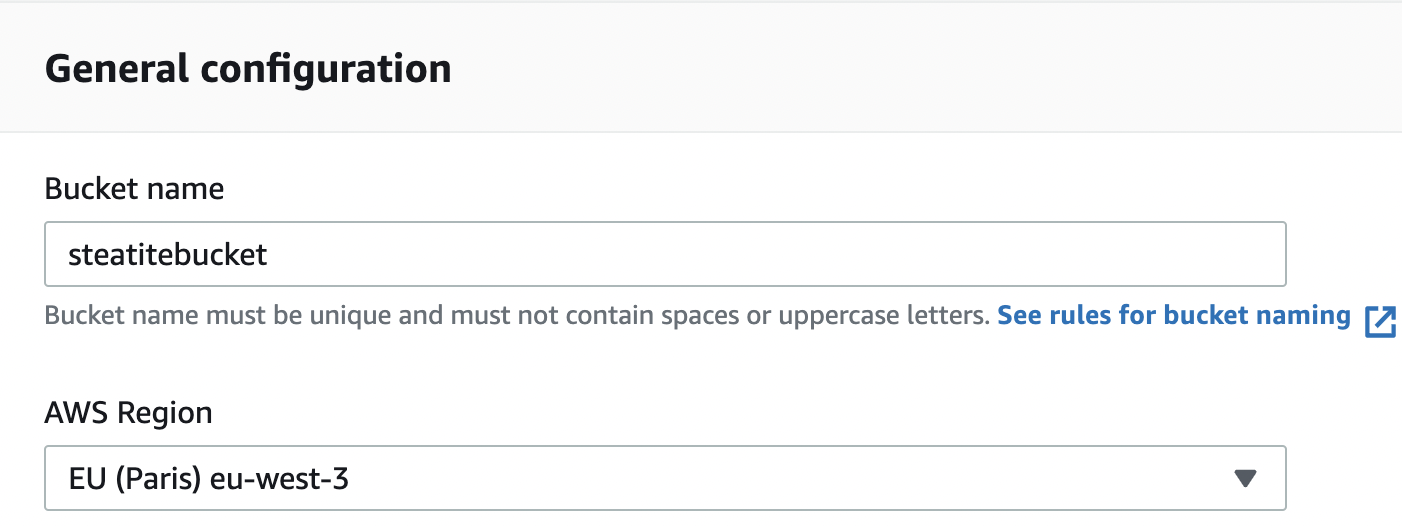

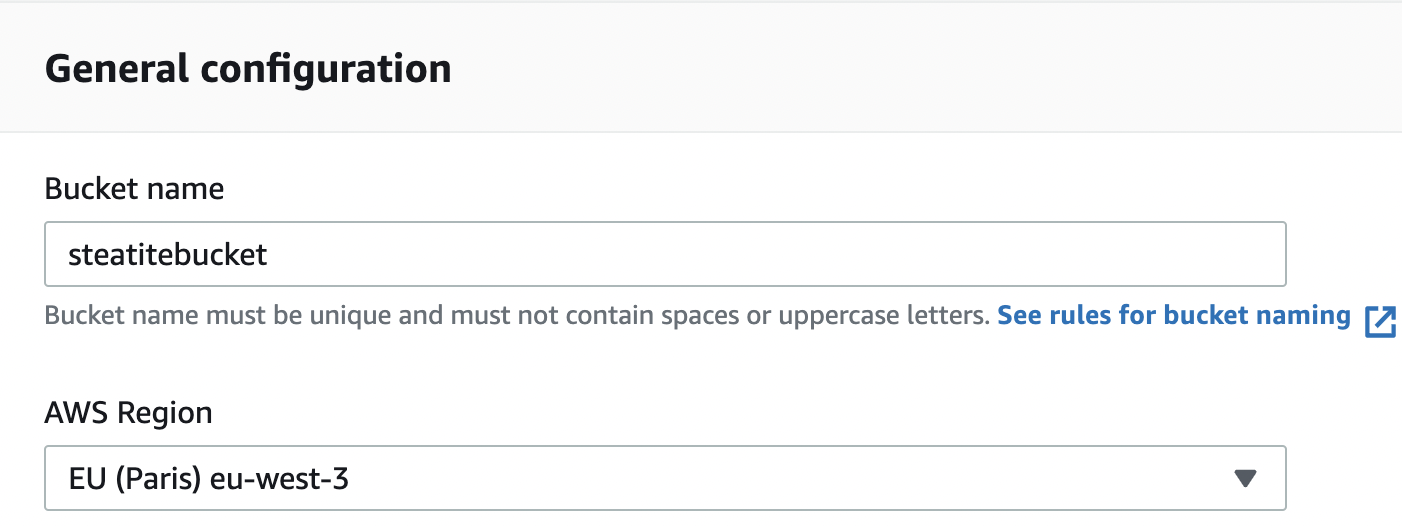

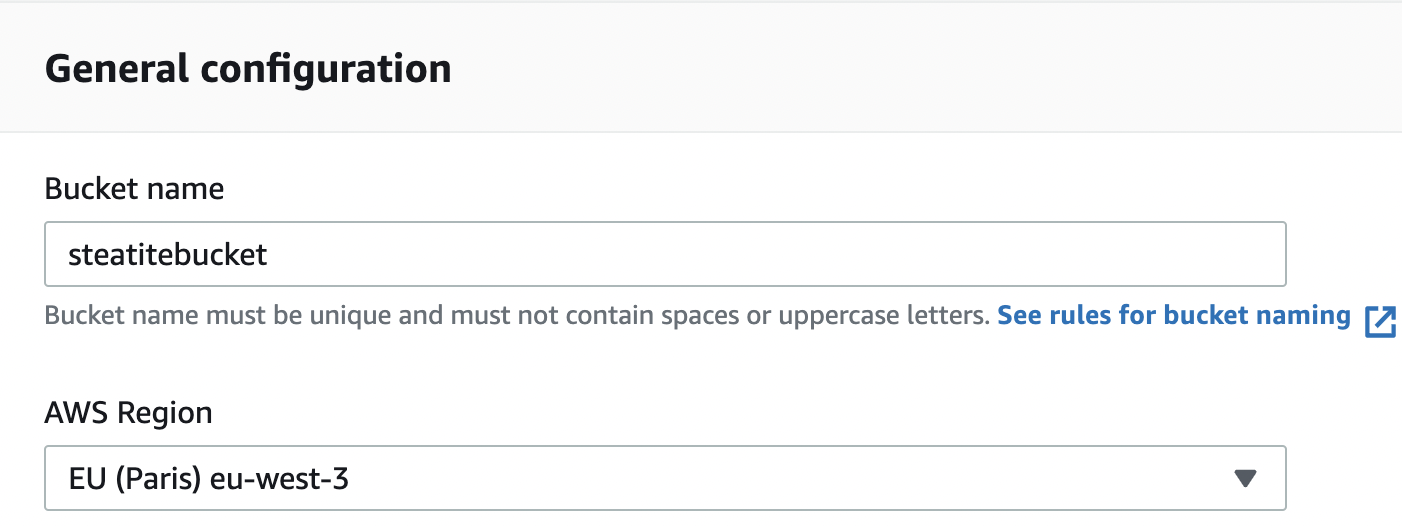

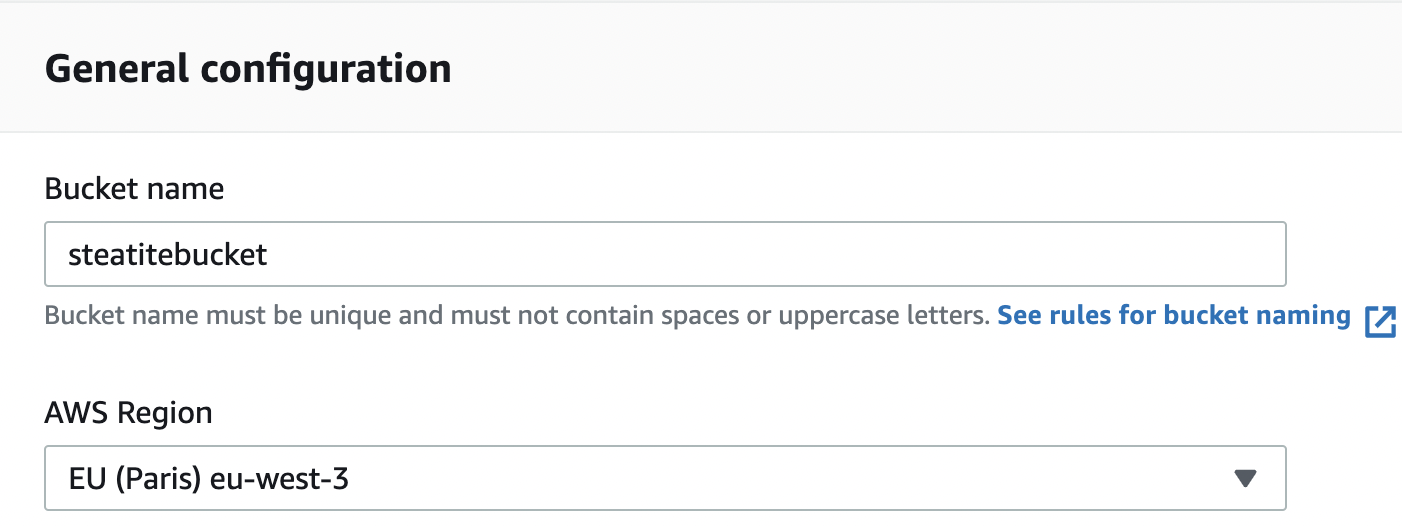

* Configurate your bucket:

- * Give a name.

+ * Give a name (*for exemple "steatitebucket"*).

* Make sure the region is the one you want to use.

- * Make sur all the accesses are blocked (we will modify them after).

- * Click on create a bucket.

+

+  +

+

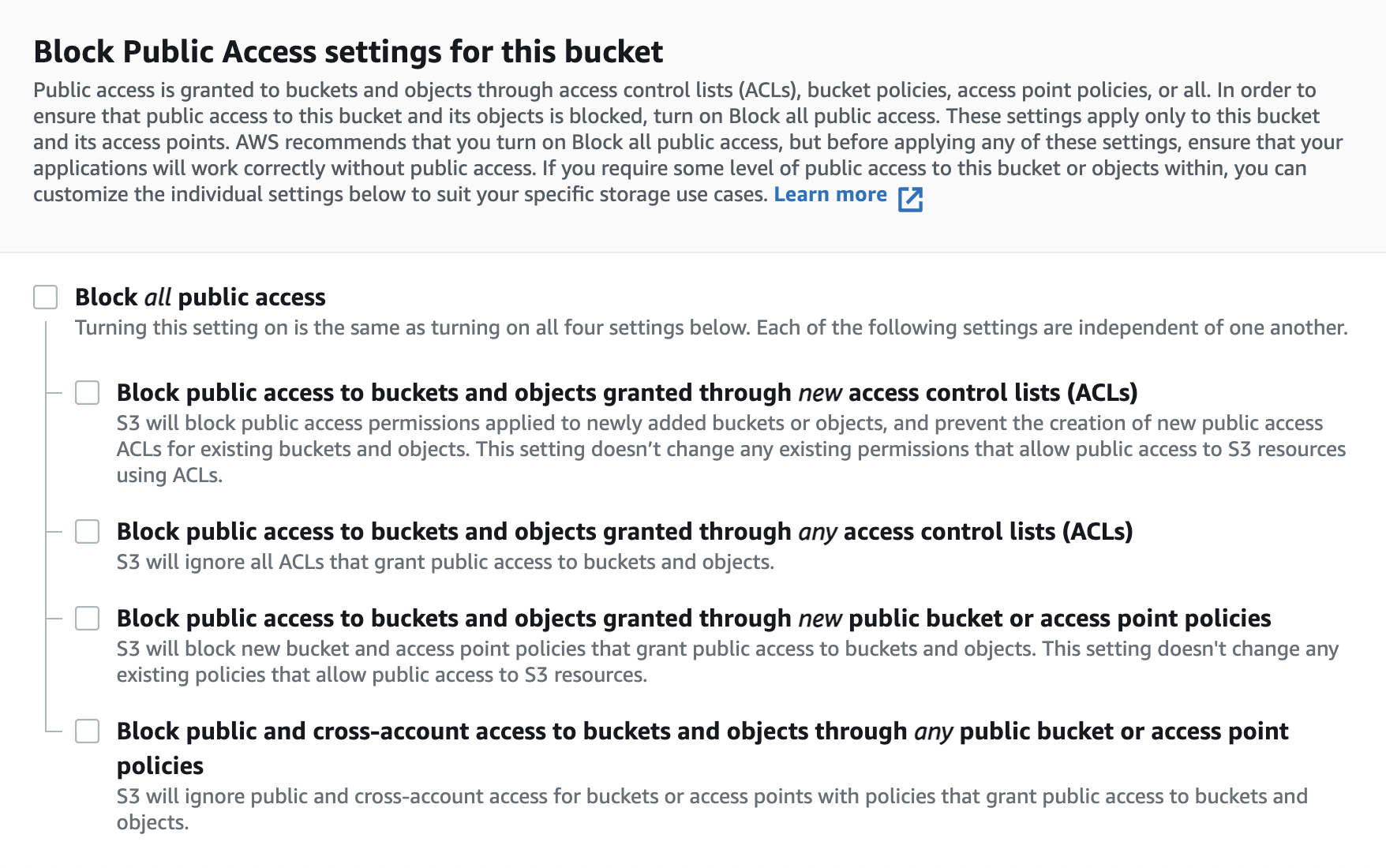

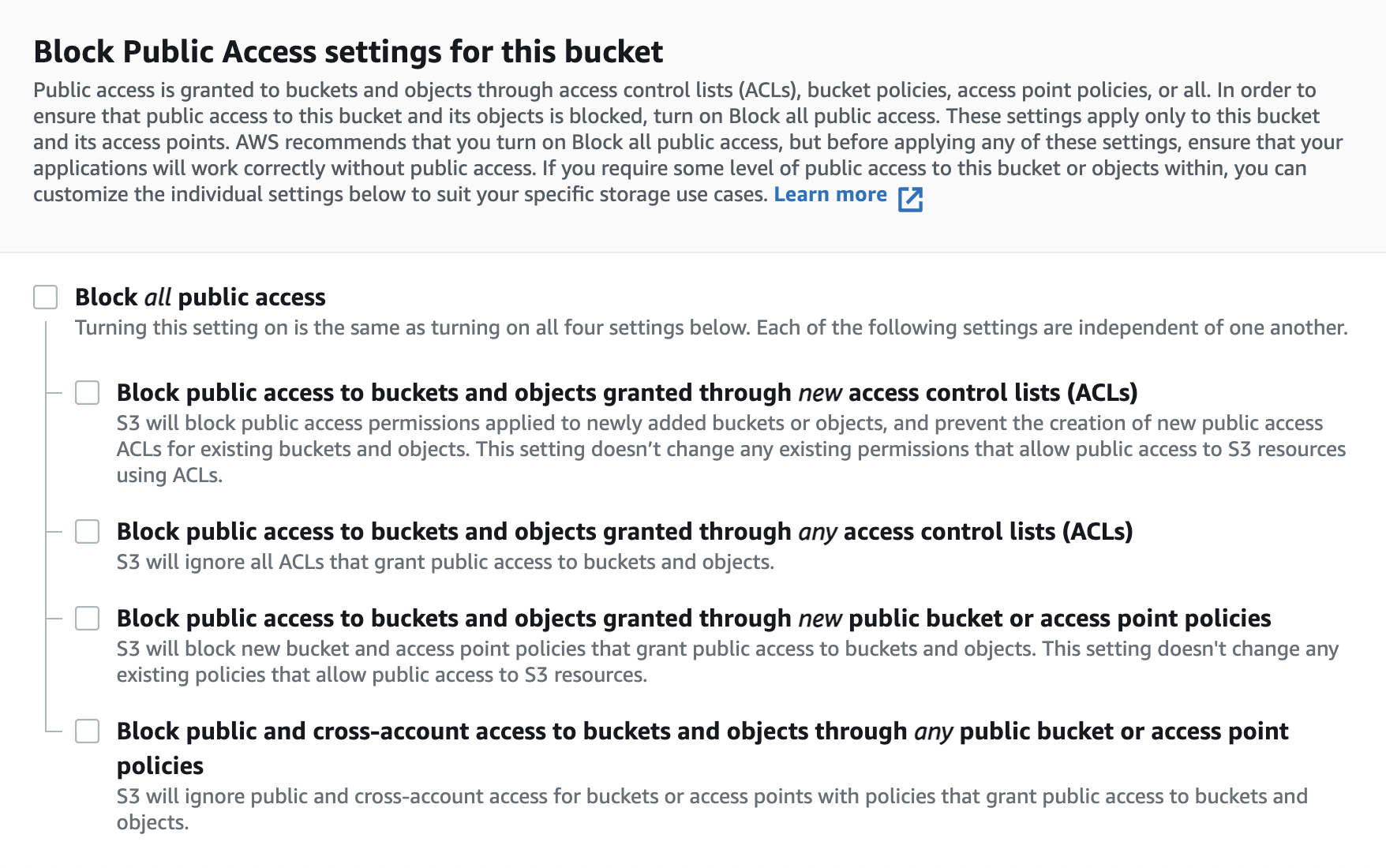

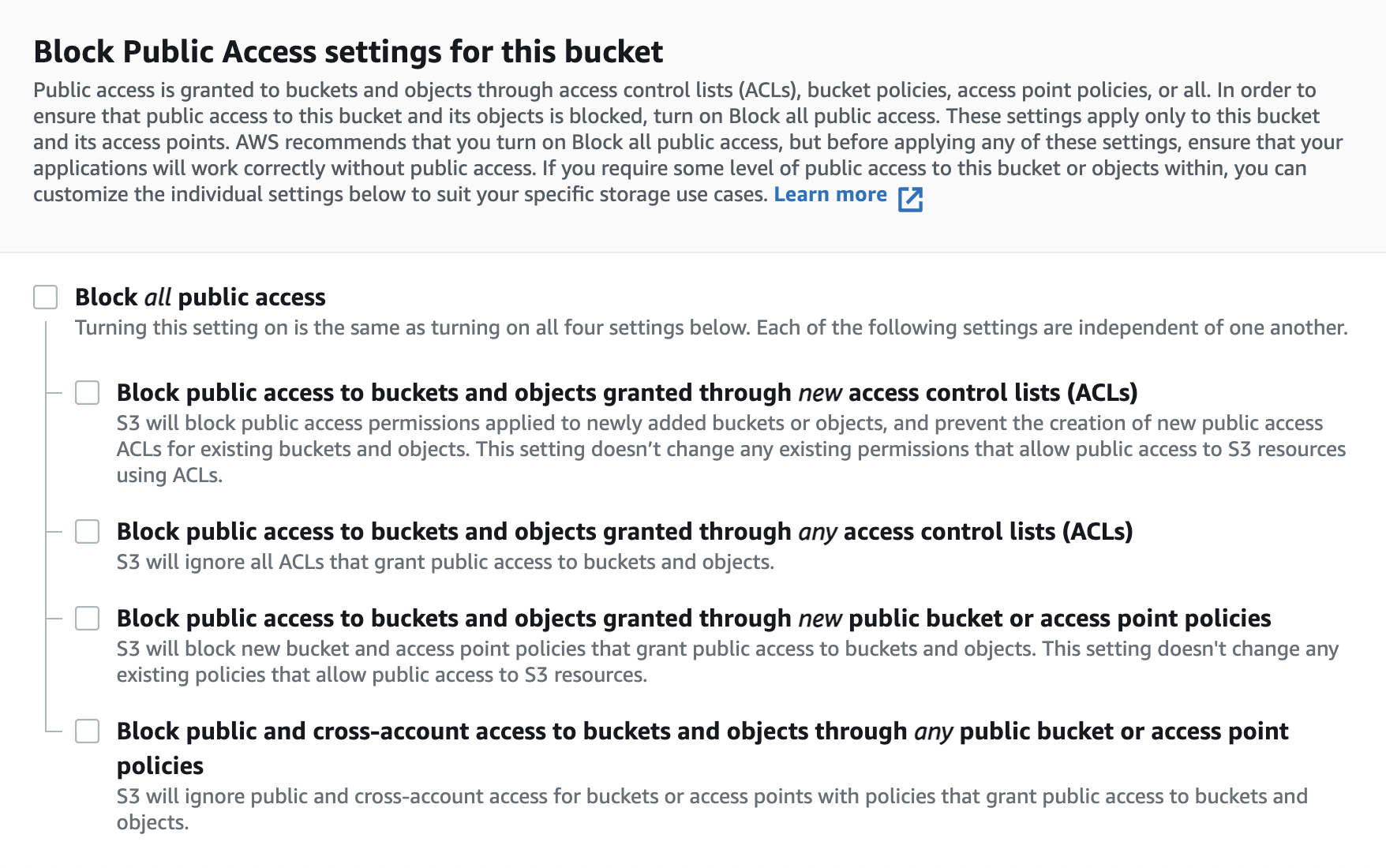

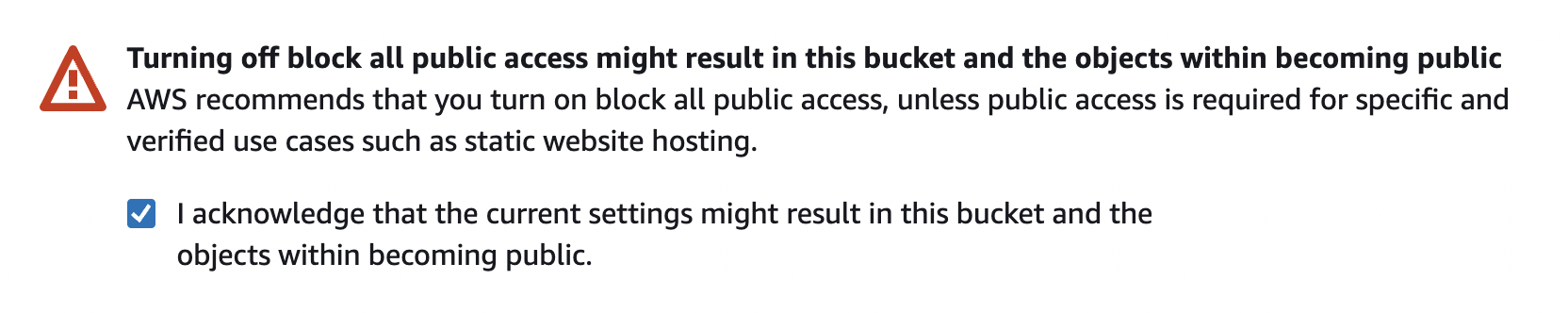

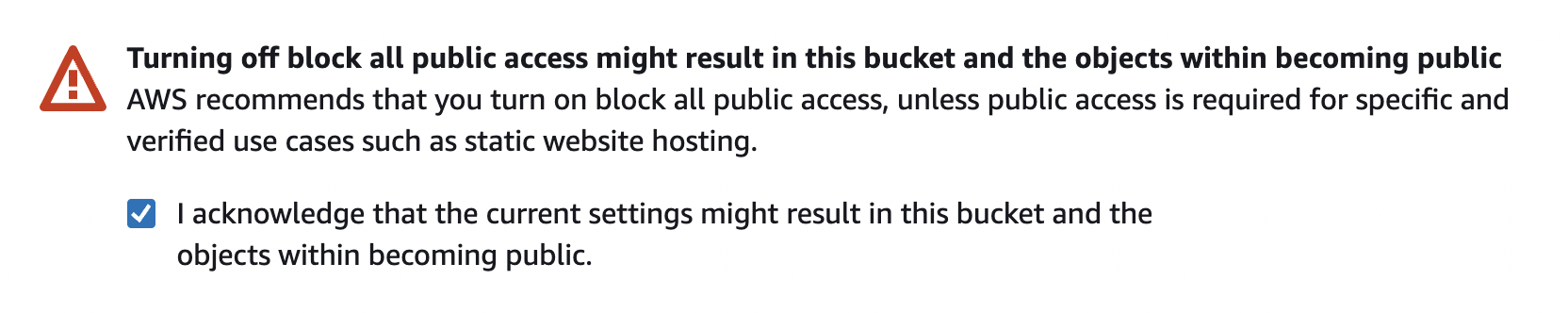

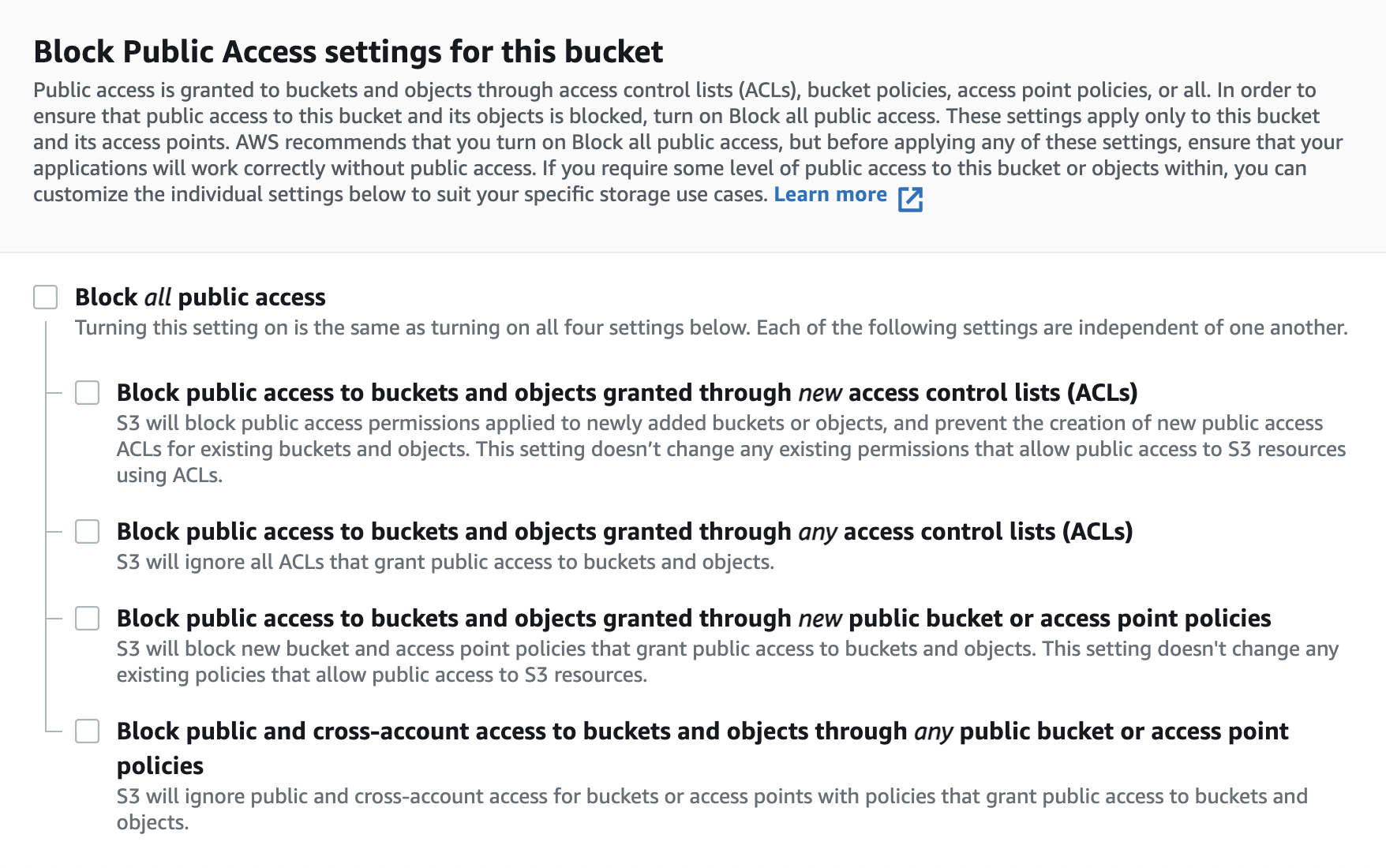

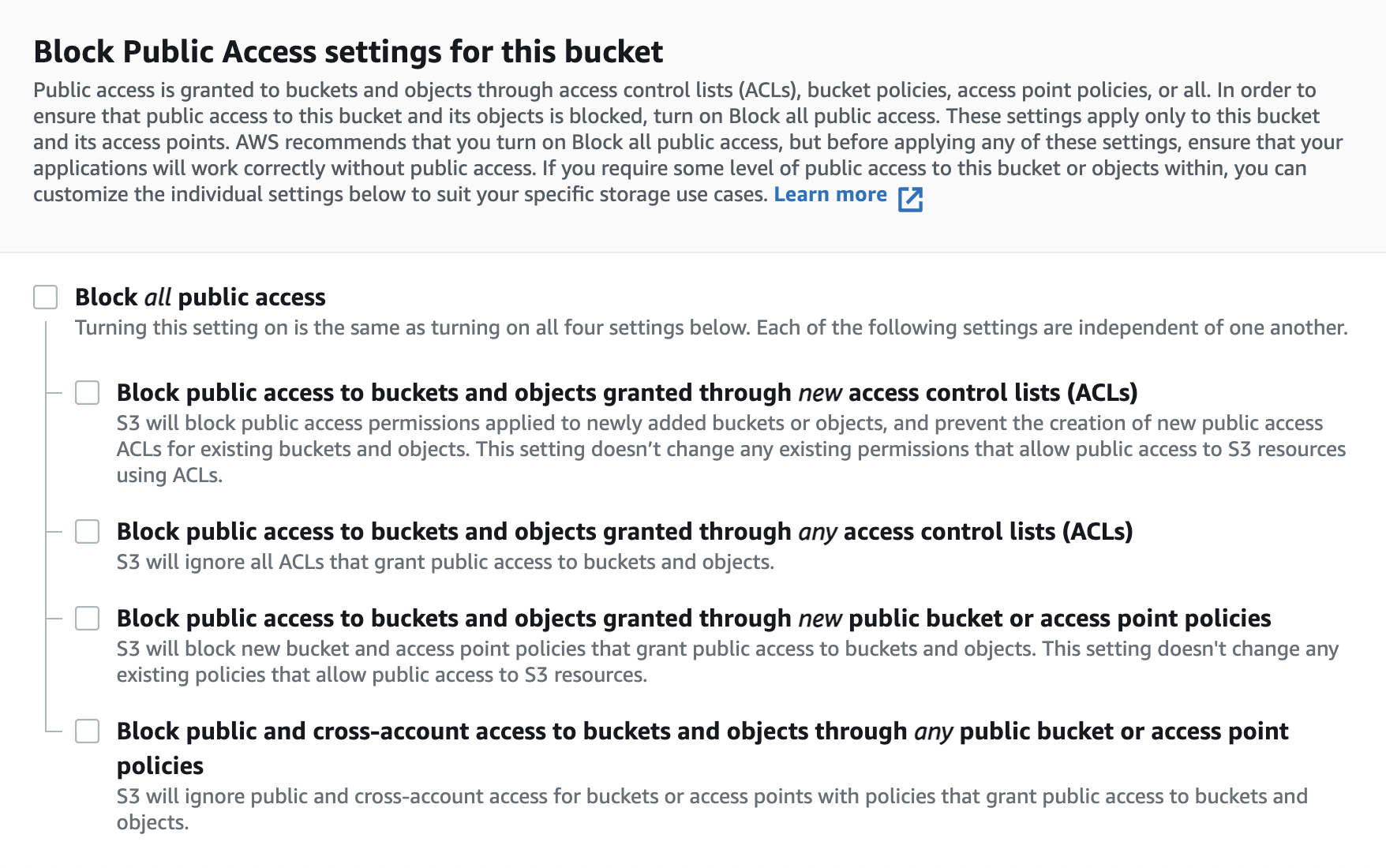

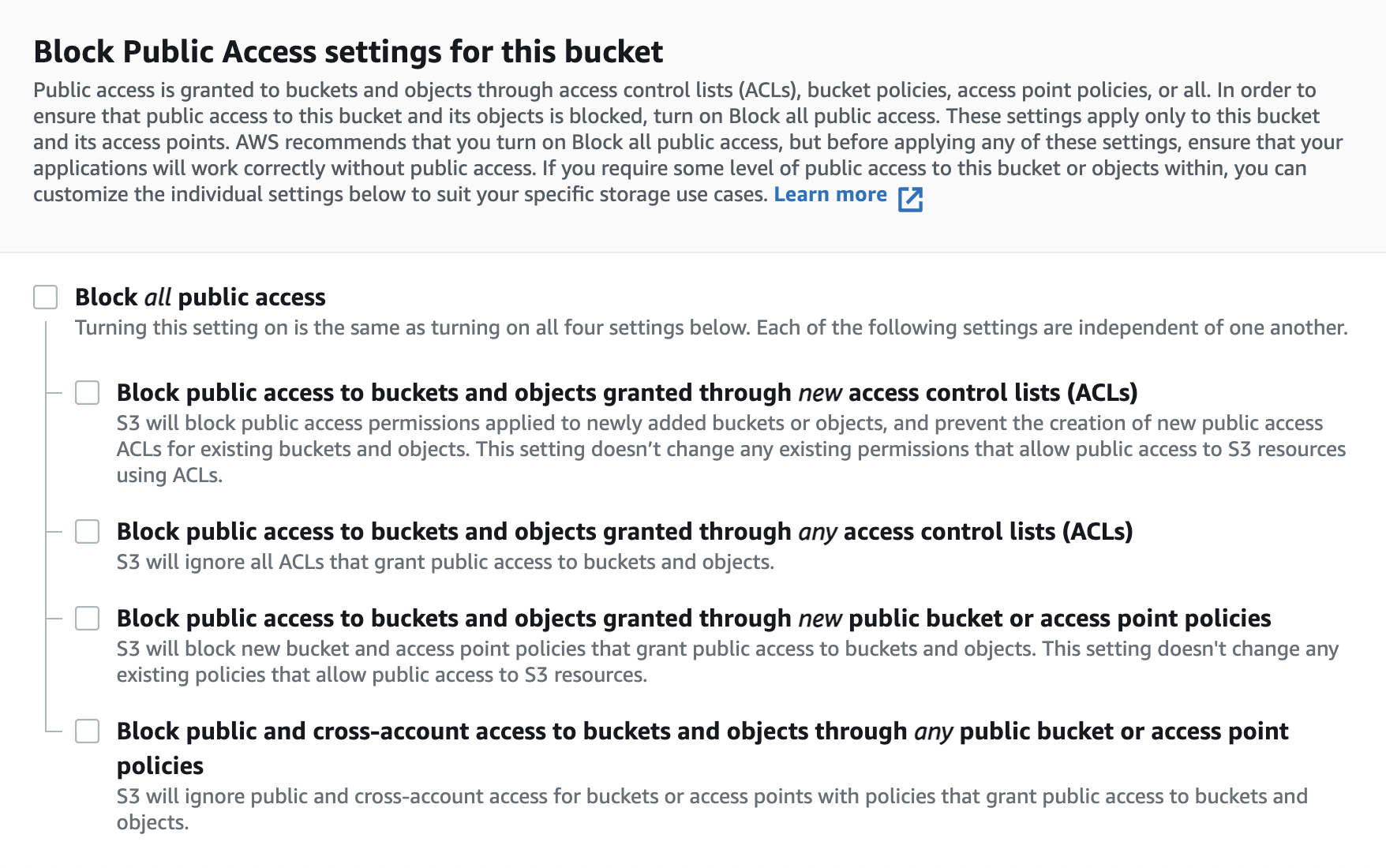

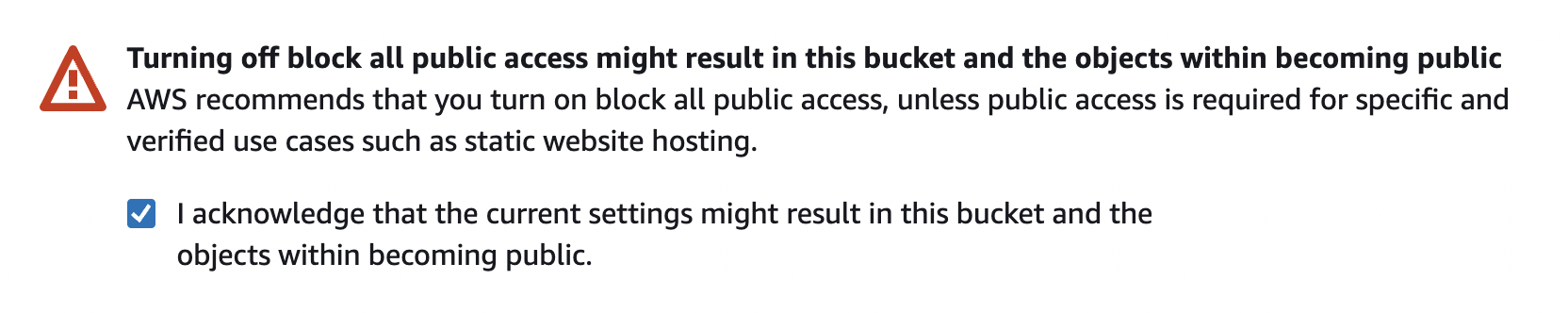

+ * Uncheck the **Block Public Access settings for this bucket** in order to allow the modification of the **Bucket policy** after the creation of the bucket.

+

+

+

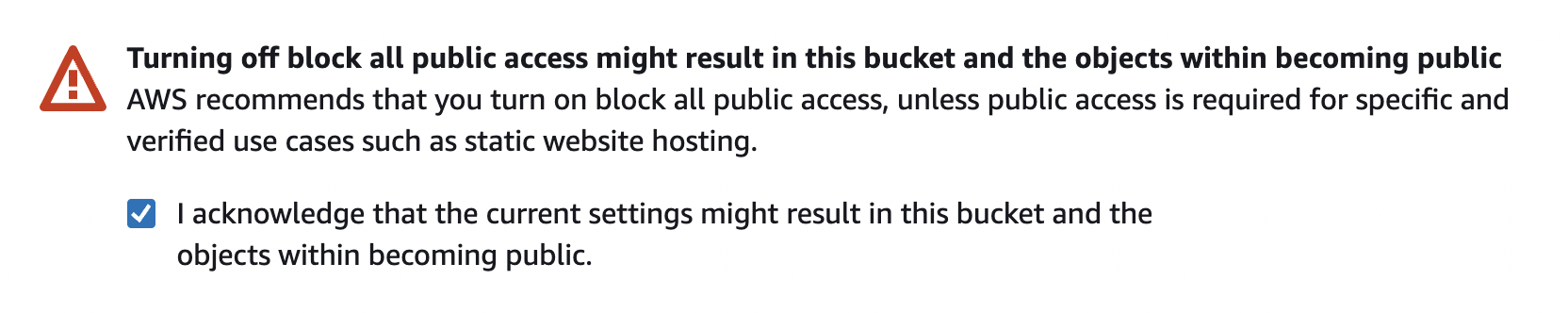

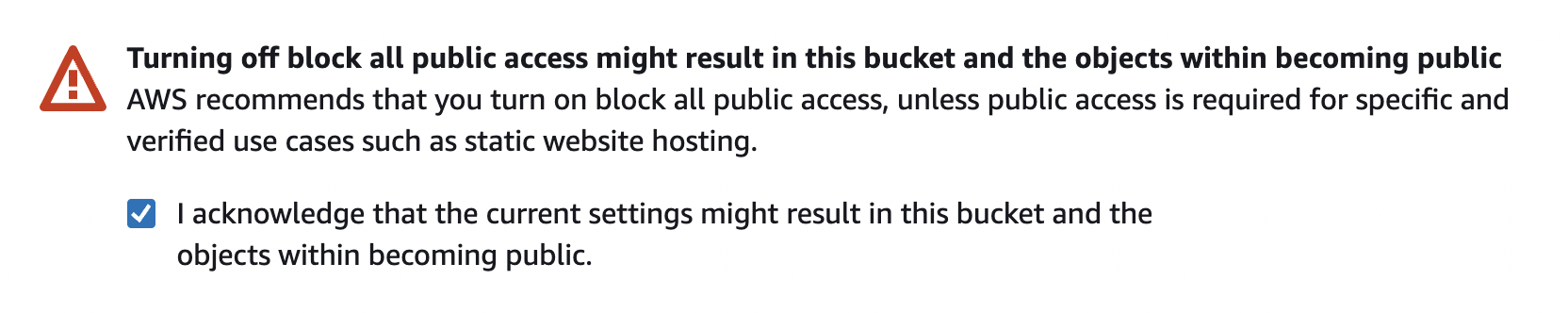

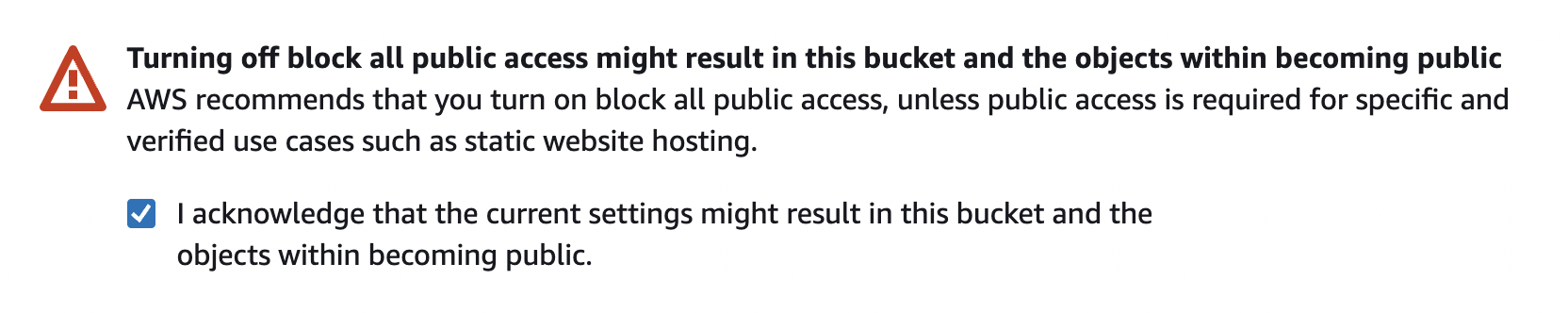

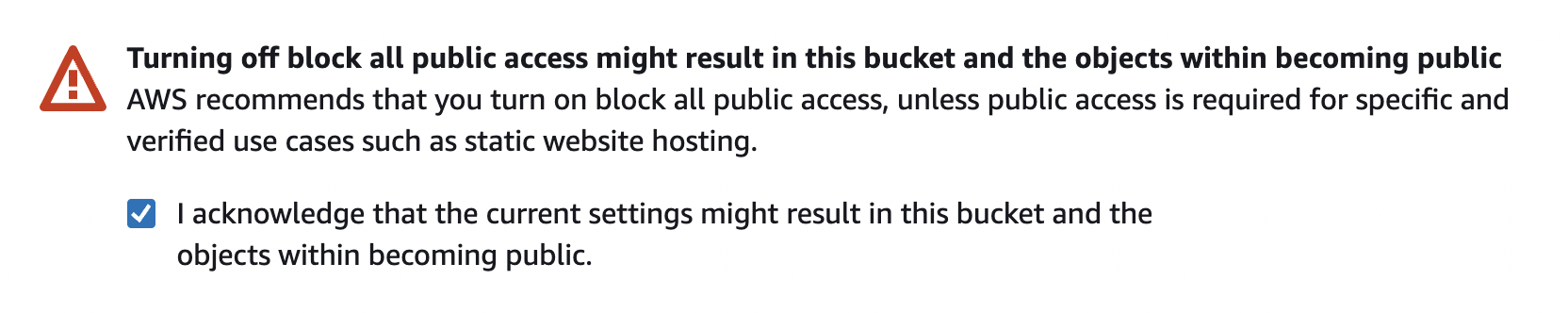

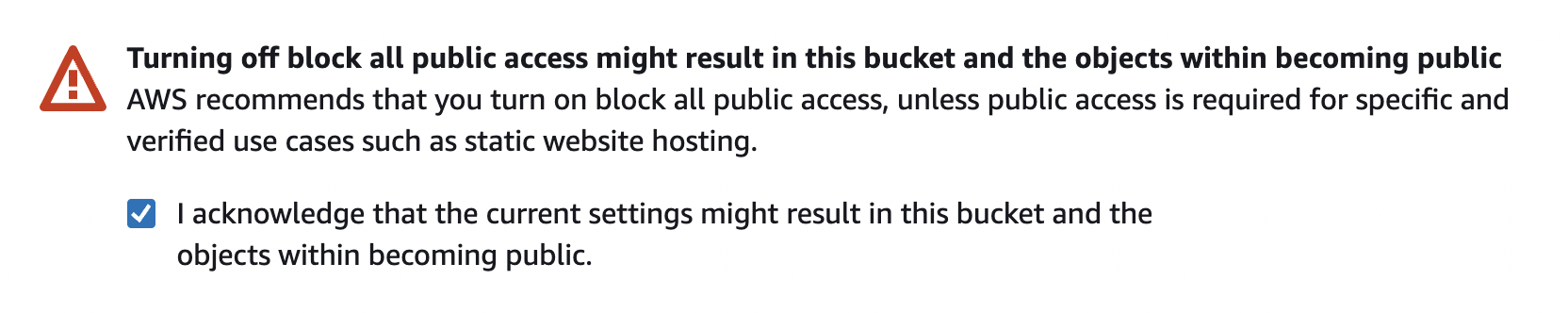

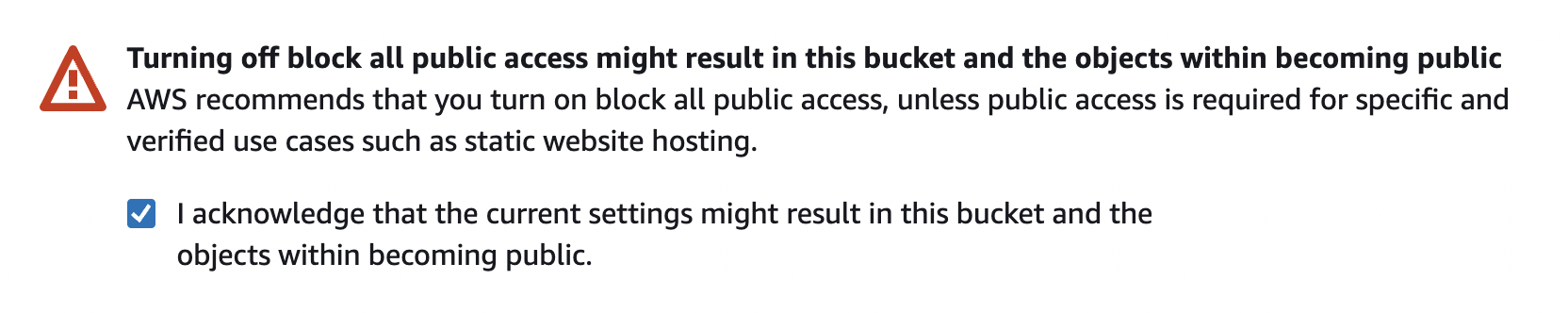

+ > Don't forget to check the fact that "*the current settings might result in this bucket and the objects within becoming public*"

+

+  +

+

+

+ * Click on "Create bucket" :

+  +

+

> Your bucket is ready!

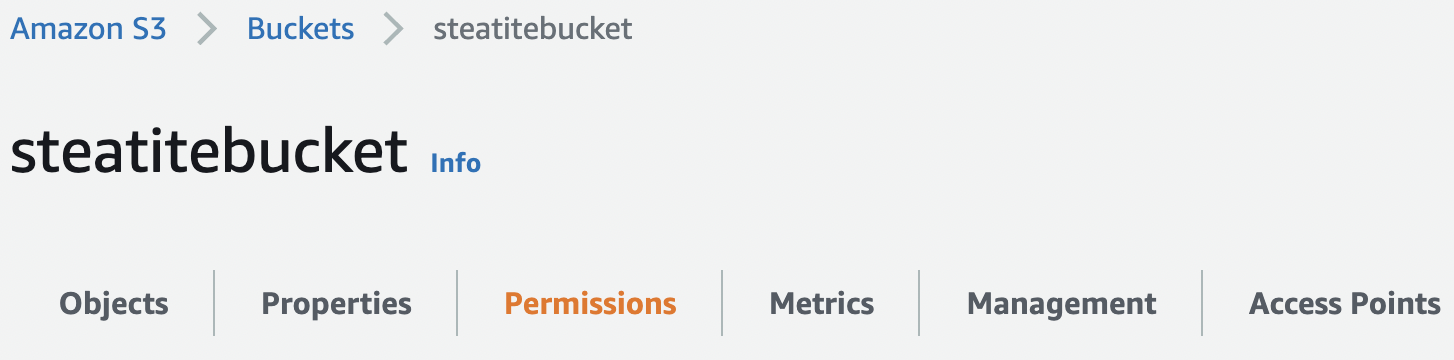

+##### **2. CHANGE BUCKET POLICY AND MAKE THE BUCKET PRIVATE.**

+

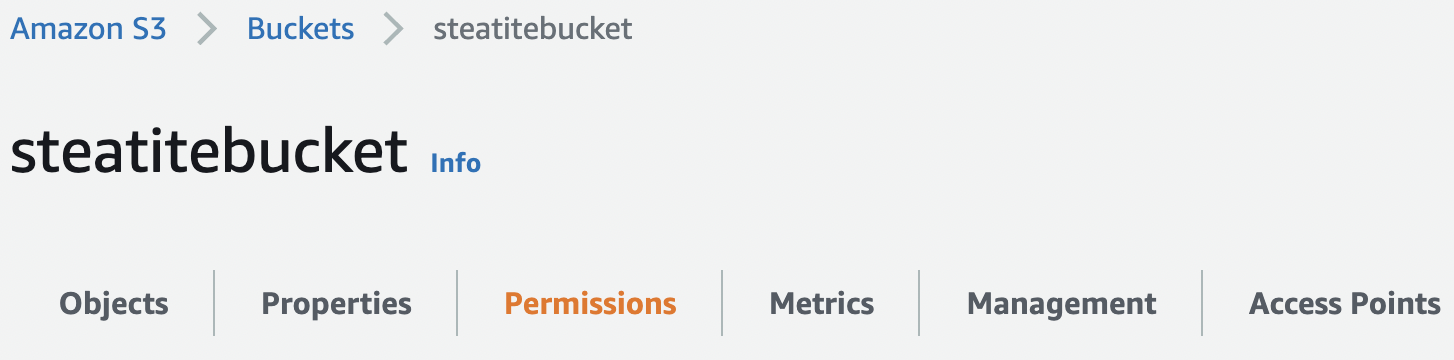

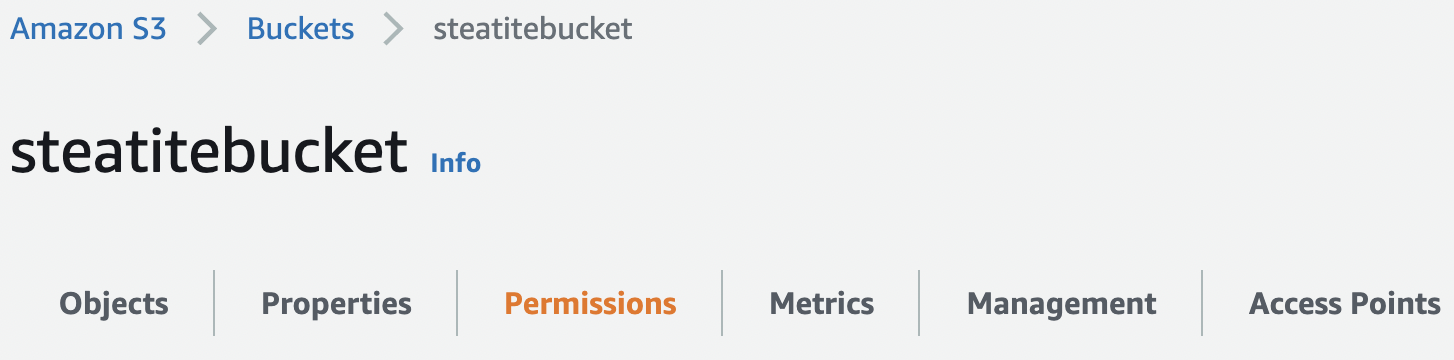

+* In your bucket list, access your bucket by clicking on its name.

+* Go to the "Permissions" tab.

+

+  +

+

+* Scroll down to the "Bucket policy" part and click on "Edit".

+* Copy this policy :

+

+ ```

+ {

+ "Version":"2012-10-17",

+ "Statement":[

+ {

+ "Effect":"Allow",

+ "Principal": "*",

+ "Action":[

+ "s3:PutObject",

+ "s3:PutObjectAcl",

+ "s3:GetObject",

+ "s3:GetObjectAcl",

+ "s3:DeleteObject"

+ ],

+ "Resource":"arn:aws:s3:::steatitebucket/*"

+ }

+ ]

+ }

+ ```

+ > Change the "Resource" with your bucket name if it's not the same as the exemple.

+* Click on "Save changes" :

+

+  +

+

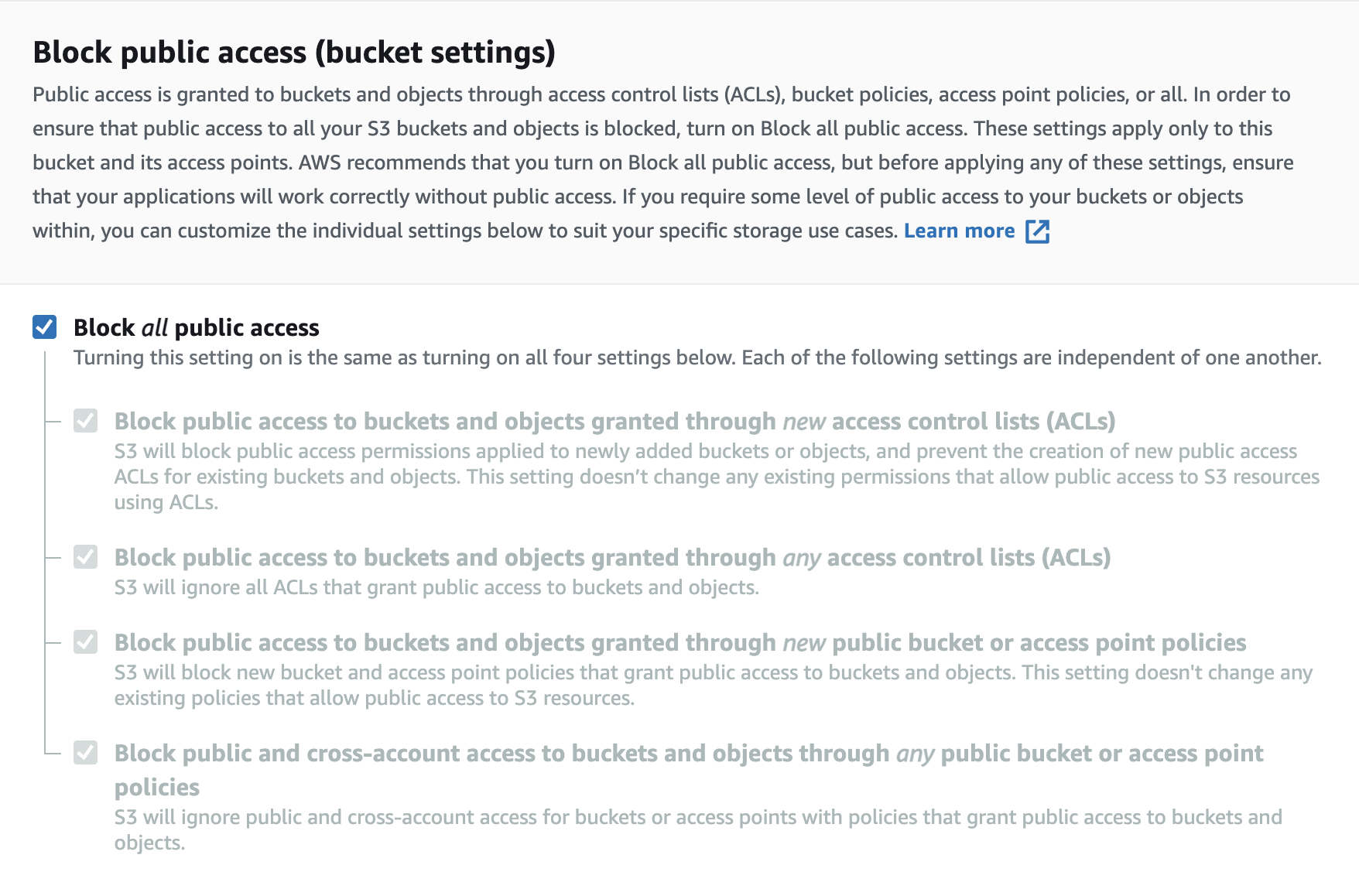

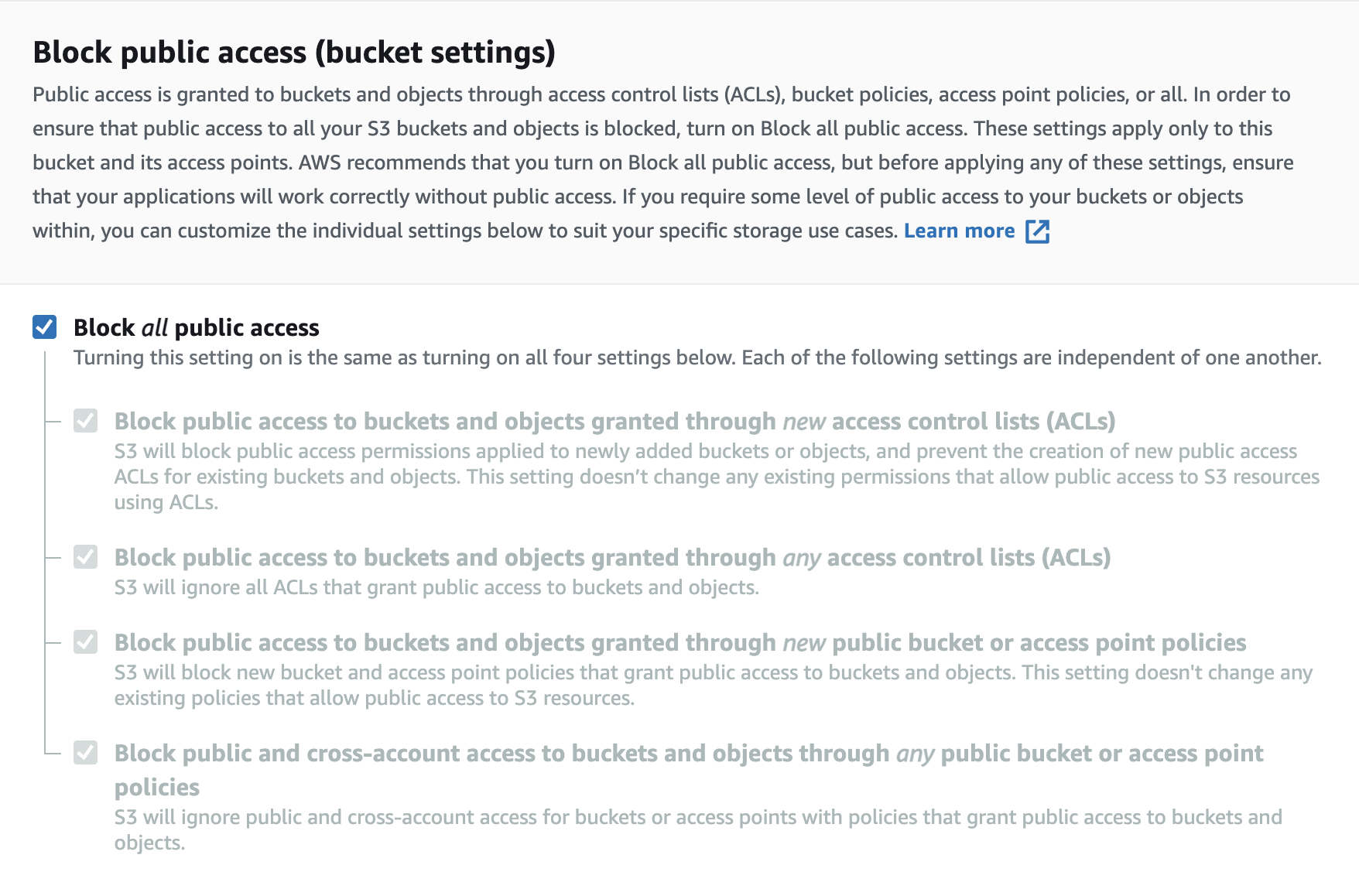

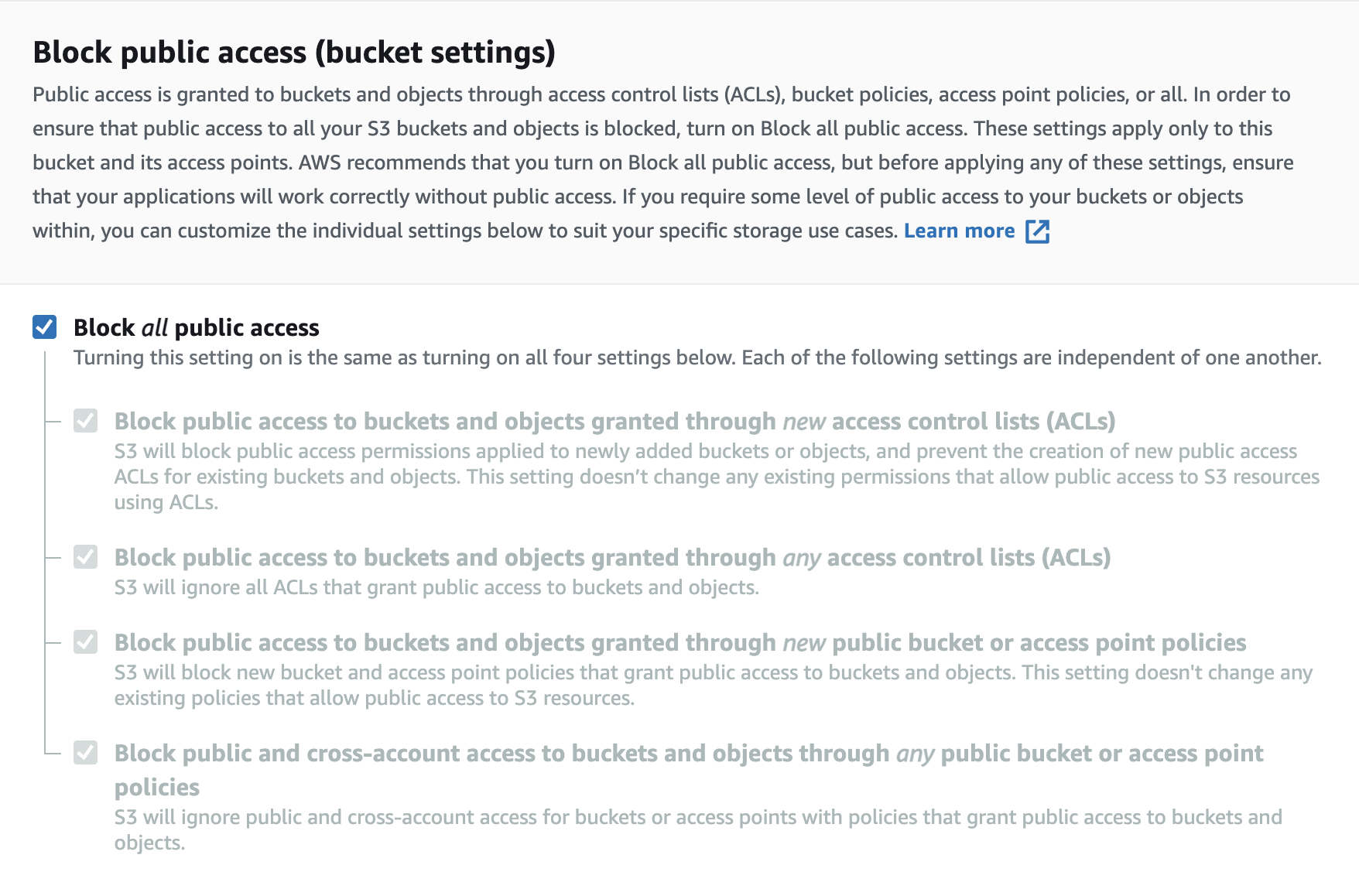

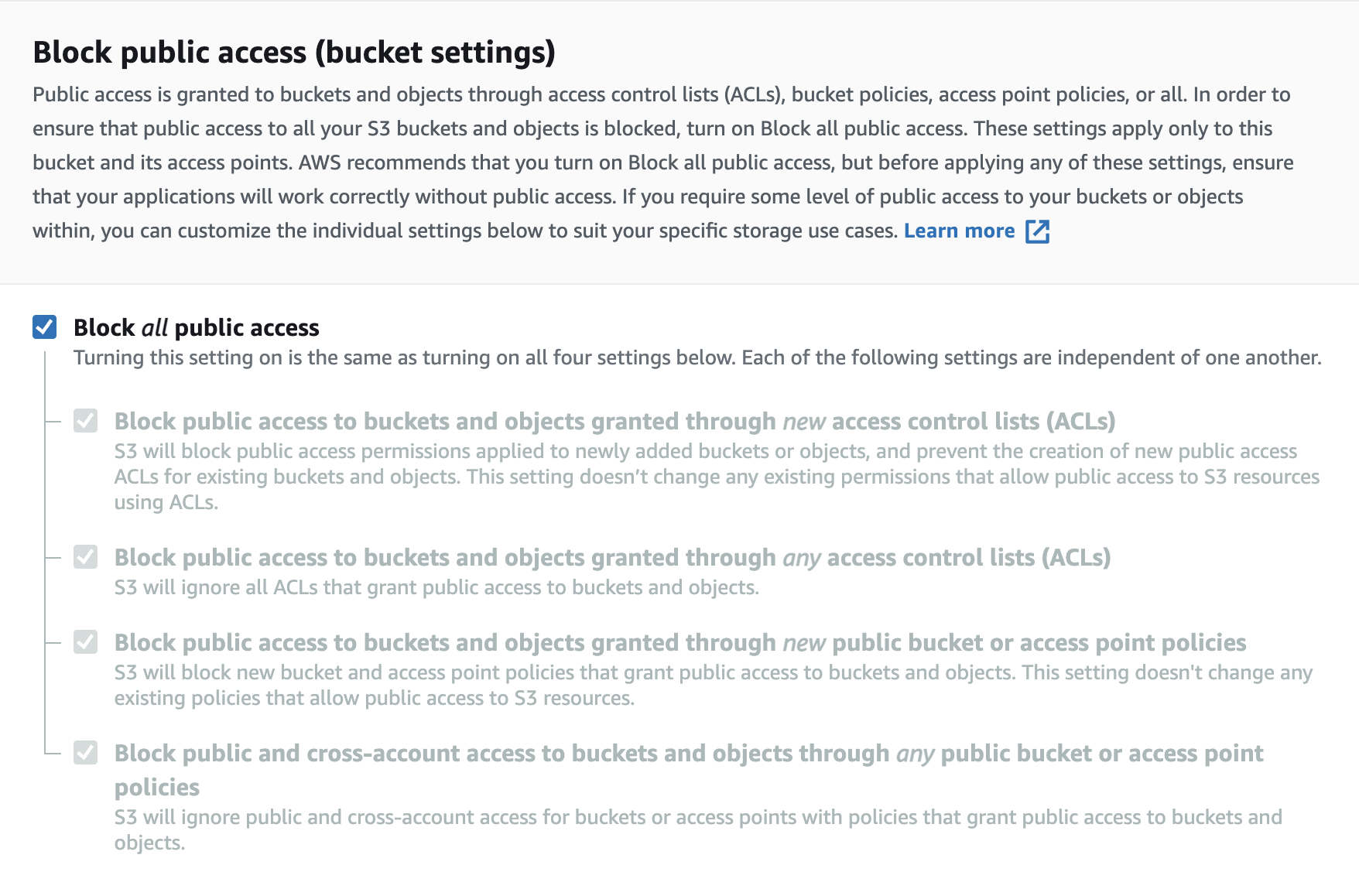

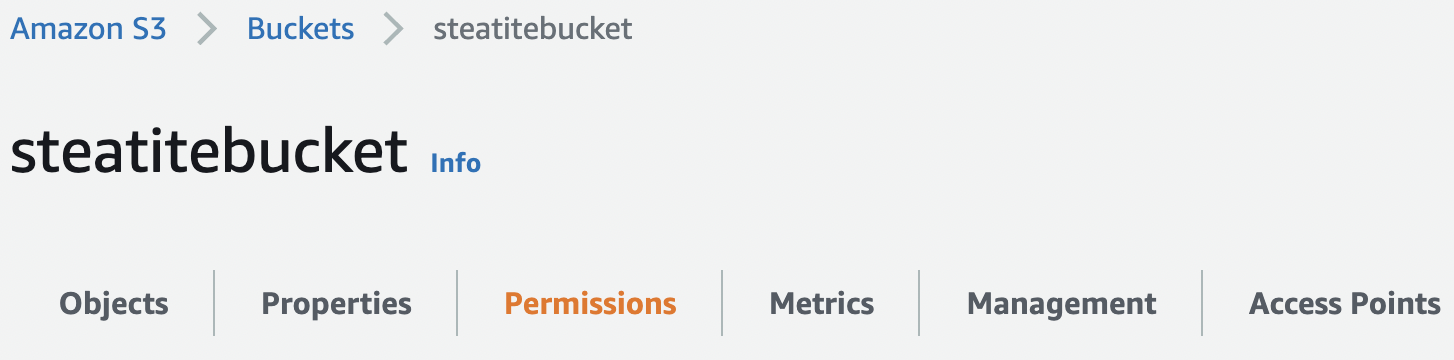

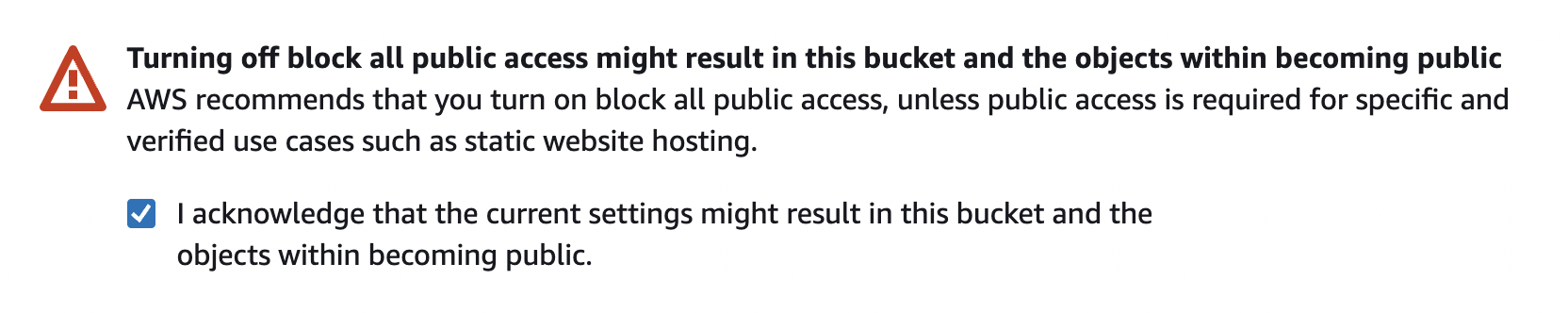

+* Scroll down to the "Block public access (bucket settings)" part and click on "Edit".

+* Check the "Block all public access" in order to protect your bucket.

+

+  +

+

+* Click on "Save changes" :

+

+  +

+

+* Confirm the modification.

+

+> Your bucket is not fully ready and configured !

+

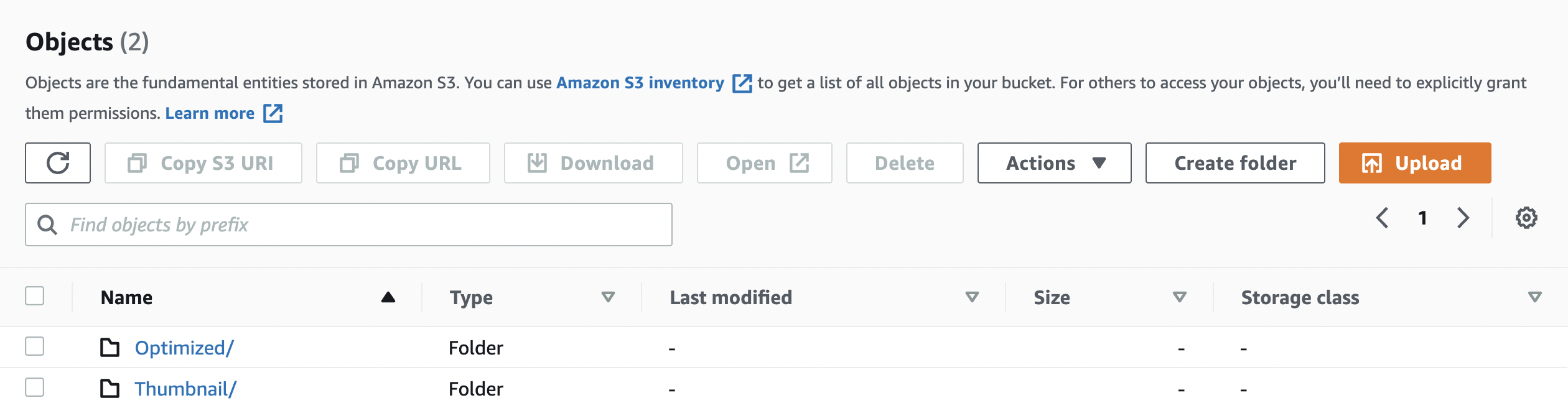

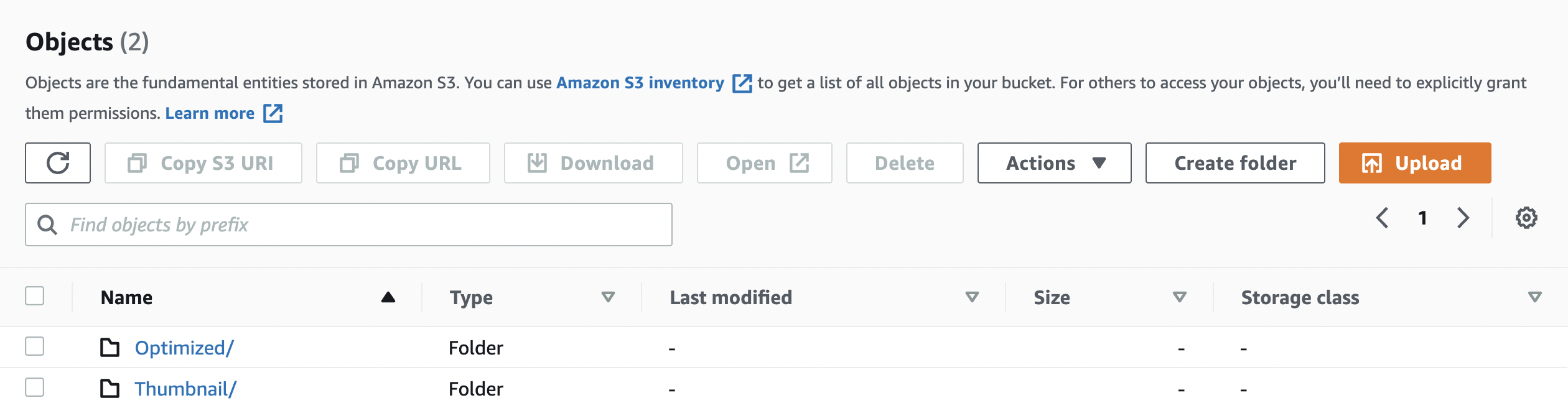

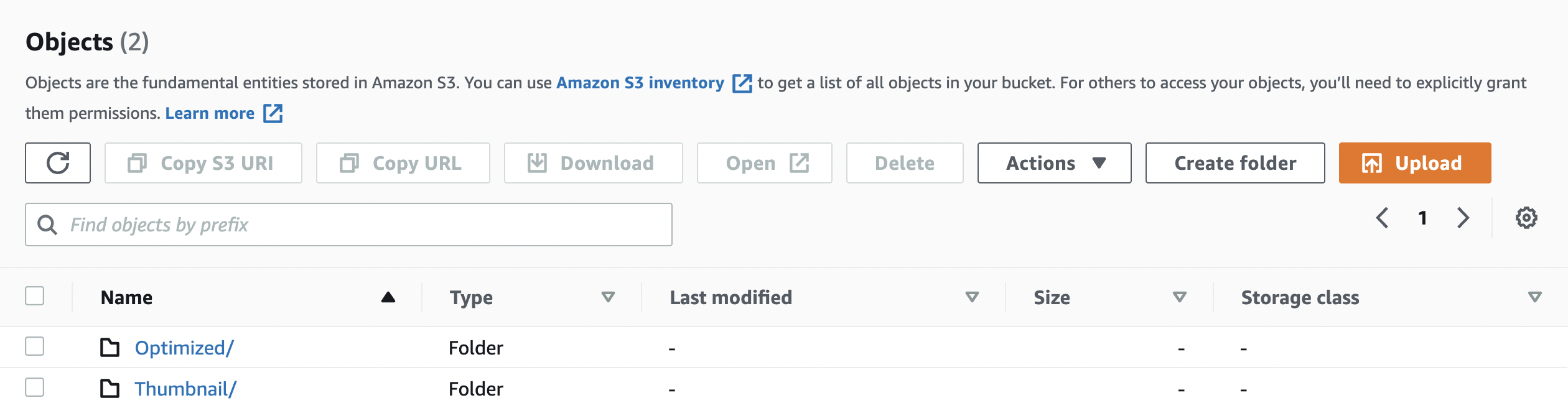

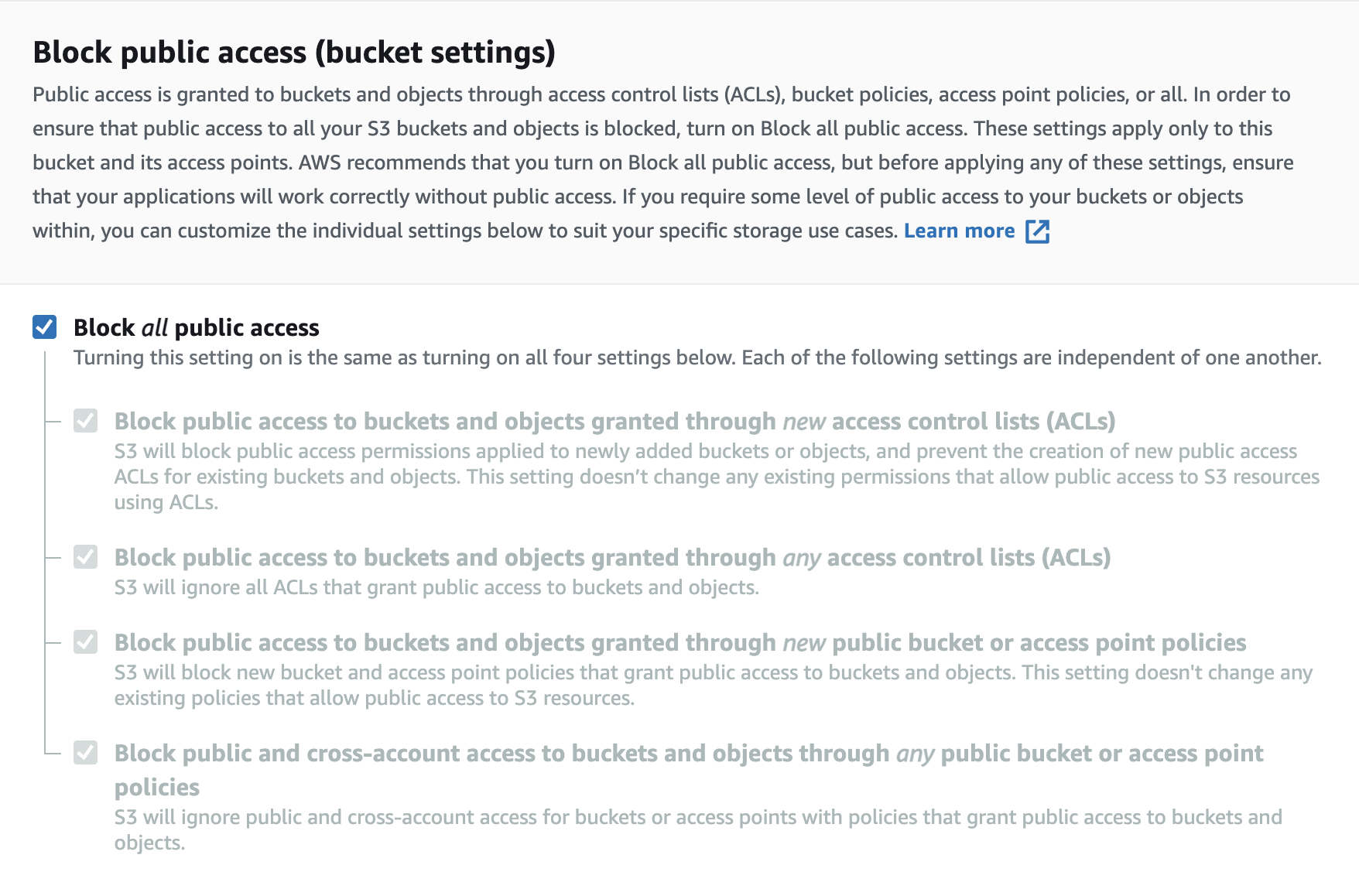

+##### **3. CREATE THE "THUMBNAIL" AND "OPTIMIZED" DEFAULT FOLDER.**

+

+* Click on "Create folder".

+* Name it "_Thumbnail_".

+* Click on "Create folder" to validate the creation.

+* Make the same with the "_Optimized_" folder.

+* Here is how your bucket should looks like now :

+

+  +

+

+

+---

#### **Lambda configuration**

##### *1. Put an item*

Create the lambda function:

From 5dac305cdd0612a8b9cc3eda5bf6ba82241d340e Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Desprez=20Aur=C3=A9lien?=

<45626870+aurelien45100@users.noreply.github.com>

Date: Mon, 20 Jun 2022 14:36:43 +0200

Subject: [PATCH 2/4] =?UTF-8?q?UPDATE:=20Mise=20=C3=A0=20jour=20du=20fichi?=

=?UTF-8?q?er=20INSTALL.md?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

Co-authored : @callistas

---

INSTALL.md | 749 +++++++++++++++++++++++++++--------------------------

1 file changed, 386 insertions(+), 363 deletions(-)

diff --git a/INSTALL.md b/INSTALL.md

index 6d1d3bf..339a9d0 100644

--- a/INSTALL.md

+++ b/INSTALL.md

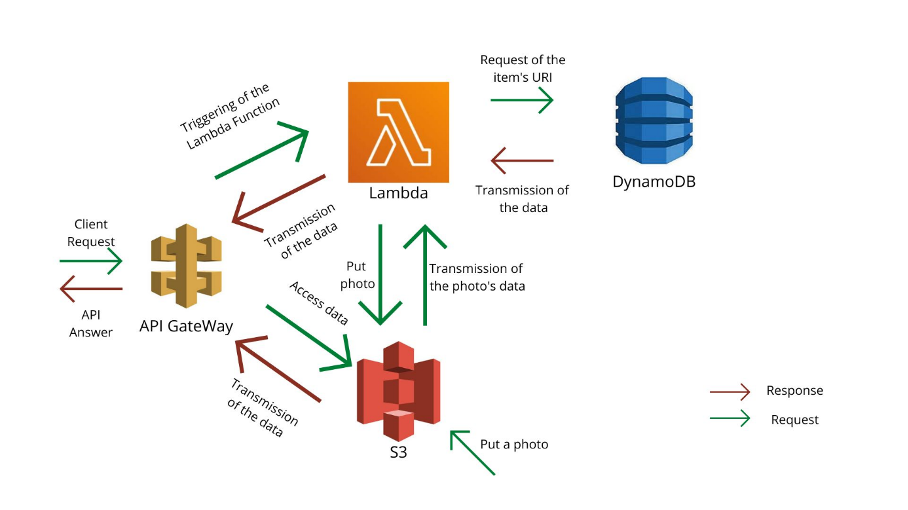

@@ -21,25 +21,30 @@ To better understand the links between the different entities, here is a schema

+---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-#### **S3 Configuration**

+---

+#### ***S3 Configuration***

For this project, we need to do multiple steps for s3.

-1. Create an "S3" bucket.

+1. Create an "**S3**" bucket.

+

2. Change bucket policy and make the bucket private.

-3. Create the "Thumbnail" and "Optimized" default folder.

+3. Create the "**Thumbnail**" and "**Optimized**" default folder.

+---

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "Create bucket" :

+---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-#### **S3 Configuration**

+---

+#### ***S3 Configuration***

For this project, we need to do multiple steps for s3.

-1. Create an "S3" bucket.

+1. Create an "**S3**" bucket.

+

2. Change bucket policy and make the bucket private.

-3. Create the "Thumbnail" and "Optimized" default folder.

+3. Create the "**Thumbnail**" and "**Optimized**" default folder.

+---

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "Create bucket" :

-  -

-

+* Click on "**Create bucket**" :

+

+  +

+

* Configurate your bucket:

* Give a name (*for exemple "steatitebucket"*).

@@ -47,29 +52,35 @@ For this project, we need to do multiple steps for s3.

- * Uncheck the **Block Public Access settings for this bucket** in order to allow the modification of the **Bucket policy** after the creation of the bucket.

-

-

+ * Uncheck the **Block Public Access settings for this bucket** in order to allow the modification of the **Bucket policy** after the creation of the bucket.

+

+

+  +

+

> Don't forget to check the fact that "*the current settings might result in this bucket and the objects within becoming public*"

-

-  -

-

+

+

+  +

+

+

+ * Click on "**Create bucket**" :

- * Click on "Create bucket" :

+

> Your bucket is ready!

-

+---

##### **2. CHANGE BUCKET POLICY AND MAKE THE BUCKET PRIVATE.**

* In your bucket list, access your bucket by clicking on its name.

-* Go to the "Permissions" tab.

+* Go to the "**Permissions**" tab.

-* Scroll down to the "Bucket policy" part and click on "Edit".

+

+* Scroll down to the "**Bucket policy**" part and click on "**Edit**".

* Copy this policy :

```

@@ -91,17 +102,17 @@ For this project, we need to do multiple steps for s3.

]

}

```

- > Change the "Resource" with your bucket name if it's not the same as the exemple.

-* Click on "Save changes" :

+ > Change the "**Resource**" with your bucket name if it's not the same as the exemple.

+* Click on "**Save changes**" :

-* Scroll down to the "Block public access (bucket settings)" part and click on "Edit".

-* Check the "Block all public access" in order to protect your bucket.

+* Scroll down to the "**Block public access (bucket settings)**" part and click on "**Edit**".

+* Check the "**Block all public access**" in order to protect your bucket.

-* Click on "Save changes" :

+* Click on "**Save changes**" :

@@ -109,398 +120,410 @@ For this project, we need to do multiple steps for s3.

> Your bucket is not fully ready and configured !

+---

+

##### **3. CREATE THE "THUMBNAIL" AND "OPTIMIZED" DEFAULT FOLDER.**

-* Click on "Create folder".

-* Name it "_Thumbnail_".

-* Click on "Create folder" to validate the creation.

-* Make the same with the "_Optimized_" folder.

+* Click on "**Create folder**".

+* Name it "**_Thumbnail_**".

+* Click on "**Create folder**" to validate the creation.

+* Make the same with the "**_Optimized_**" folder.

* Here is how your bucket should looks like now :

+

---

-#### **Lambda configuration**

-##### *1. Put an item*

-Create the lambda function:

-* Go to the AWS console: https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions

-* Click on "*Create function*"

-* Configure the function:

- * Click on "*Author from scratch*" to create it from scratch.

- * Name your function.

- * You want your code to be in node.js, you can choose the "*Node.js 14.x*" version, the one we chose.

- * Make sure that you are going to create a new role for your function under "*Change default execution role*".

- * Click on "*Create function*"

-

- -

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

-

-

-

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

-

- -

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action put item.

-

-

-

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action put item.

-

- -

-* Add an ARN to make sur that the function can only modify the database you’ve just created

-

-

-

-

-* Add an ARN to make sur that the function can only modify the database you’ve just created

-

-

- -

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

-

-In the code section, add this and modify the name of the database and the URI of the bucket:

+#### ***Lambda configuration***

-```

-const AWS = require("aws-sdk")

-const S3 = new AWS.S3();

-// Connection to the table

-const DynamoDB = new AWS.DynamoDB();

-const tableName = "DYNAMODB_TABLE_NAME"

+All the lambda functions are already developed and available on the [Github Steatite's repository](https://github.com/Hypertopic/Steatite/tree/v7/src).

-let crypto = require('crypto')

-let finalEtag = crypto.createHash('sha1')

+We can list 4 different functions :

+* HandleFileAddedInS3

+* getAttributes

+* getOptimizedPicture

+* getThumbnailOfImage

-exports.handler = (event) => {

- //gets the info from the triggered event (= put in S3 bucket) : bucket name and file name

+They will be explained later in each of there parts.

- const s3_object = event['Records'][0]['s3']

- const source_bucket_name = s3_object['bucket']['name']

- const file_name = s3_object['object']['key']

+In order to add a lambda function, here is the steps to follow :

+* Go to the [Lambda functions page](https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions).

+* Click on "**Create function**" :

- const paramsGetObject = {

- Bucket: source_bucket_name,

- Key: file_name

- }

+

-

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

-

-In the code section, add this and modify the name of the database and the URI of the bucket:

+#### ***Lambda configuration***

-```

-const AWS = require("aws-sdk")

-const S3 = new AWS.S3();

-// Connection to the table

-const DynamoDB = new AWS.DynamoDB();

-const tableName = "DYNAMODB_TABLE_NAME"

+All the lambda functions are already developed and available on the [Github Steatite's repository](https://github.com/Hypertopic/Steatite/tree/v7/src).

-let crypto = require('crypto')

-let finalEtag = crypto.createHash('sha1')

+We can list 4 different functions :

+* HandleFileAddedInS3

+* getAttributes

+* getOptimizedPicture

+* getThumbnailOfImage

-exports.handler = (event) => {

- //gets the info from the triggered event (= put in S3 bucket) : bucket name and file name

+They will be explained later in each of there parts.

- const s3_object = event['Records'][0]['s3']

- const source_bucket_name = s3_object['bucket']['name']

- const file_name = s3_object['object']['key']

+In order to add a lambda function, here is the steps to follow :

+* Go to the [Lambda functions page](https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions).

+* Click on "**Create function**" :

- const paramsGetObject = {

- Bucket: source_bucket_name,

- Key: file_name

- }

+

+  +

+

+* Select "**Author from scratch**".

+* Give the good name to the function, one of the name listed above.

+* Choose "**Node.js 14.x**" as the runtime environment.

+* In the "**Permissions**" tab, you will have a basic permission role for the function that will be created in your [IAM environment](https://us-east-1.console.aws.amazon.com/iamv2/home?region=us-east-1#/roles). Its name will be like "*functionname-role-randomstring"*.

+

+ * The IAM permissions for a specific function are different, some need full access to DynamoDB, others to S3. This specification will be explained later in there function explanation.

+* Click on "**Create function**" :

- S3.getObject(paramsGetObject, function(err, data) {

- if (err) {

- console.log("[ERROR : getObject] :", err, err.stack); // an error occurred

- }

- else {

- // if the object is added,creates the new item

- const file_content = data['Body']

- console.log(data)

- let etag = data['ETag'].replace(/"/gi, '')

-

- // create the final etag encrypted

- finalEtag.update(etag)

- etag = finalEtag.digest('hex')

-

- // set the creation date

- var creationDate = new Date().toISOString().slice(0, 10);

-

- const resource = "BUCKET_URI/" + file_name

- const paramsPutItem = {

- Item: {

- hash: {

- S: etag

- },

- resource: {

- S: resource

- },

- name: {

- S: file_name

- },

- created: {

- S: creationDate

- }

- },

- TableName: tableName

- }

- //Put the new item in DynamoDB

- DynamoDB.putItem(paramsPutItem, function(err, data) {

- if (err) {

- console.log("[ERROR : putItem] :", err, err.stack); // an error occurred

- }

- else {

- console.log("[SUCCESS] : item successfully added to the table ",tableName, " at the uri : ", resource)

- }

- })

- }

- })

-}

-```

+

+  +

+

+* You now have a brand new function, here is how you add the existing code :

+

+ * On Github, go to the src/ folder, where all the functions are.

+ * Click on the function you would like to copy.

+ * Copy the URI of the function, for exemple *https://github.com/Hypertopic/Steatite/tree/v7/src/getAttributes*

+ * We are going to use an external website to easily create our zip folder. Go on [GitZip](http://kinolien.github.io/gitzip/).

+

+ * Paste your URI in the bar on the top of the page.

+ * Click on "**Download**".

+ * Your zip is now downloaded.

+ * Go back to your function page, normally you are on the "Code" tab.

+ * Click on the upper right button called "**Upload from**"

+

+  +

+

+ * Click on "**.zip file**".

+ * Click on "**Upload**".

+ * Go to your zip location and select it.

+ * Click on "**Save**".

-* Click on "*Deploy*"

+> The function is now uploaded into Lambda, some of our functions need modules to work, so they will not be visible in the "Code source" part under the "Code" tab because they are too large.

-Then, we can test it :

-* Click on the arrow on the button "*Test*".

-* Click on "*Create new event*".

-* Name your event.

-* Chose the S3 model and change the data according to your information (region, bucket name, object).

- Here is an example of a test (before doing this test, you should add an element in your S3 bucket):

+Do the same thing for all the functions and then we will configure the IAM permissions for each of them.

-```

+##### ***Adding environment variables***

+

+We are going to add environment variables in some of our functions in order to make our code dynamic and avoid useless code modification.

+

+For each of the next functions :

+1. Go to the function in Lambda.

+2. Go to the "**Configuration**" tab.

+3. Click on "**Edit**" on "**Environment variables**".

+4. Add the attributes.

+5. Click on "**Save**".

+

+**HandleFileAddedInS3**

+

+* [Key name : DynamoDBTableName;

+Value : The name of your DynamoDB table.]

+

+**getAttributes**

+

+* [Key name : DynamoDBTableName;

+Value : The name of your DynamoDB table.]

+

+**getOptimizedPicture**

+

+* [Key name : DynamoDBTableName;

+Value : The name of your DynamoDB table.]

+

+* [Key name : S3BucketName;

+Value : The name of your S3 bucket.]

+

+**getThumbnailOfImage**

+

+* [Key name : DynamoDBTableName;

+Value : The name of your DynamoDB table.]

+

+* [Key name : S3BucketName;

+Value : The name of your S3 bucket.]

+

+---

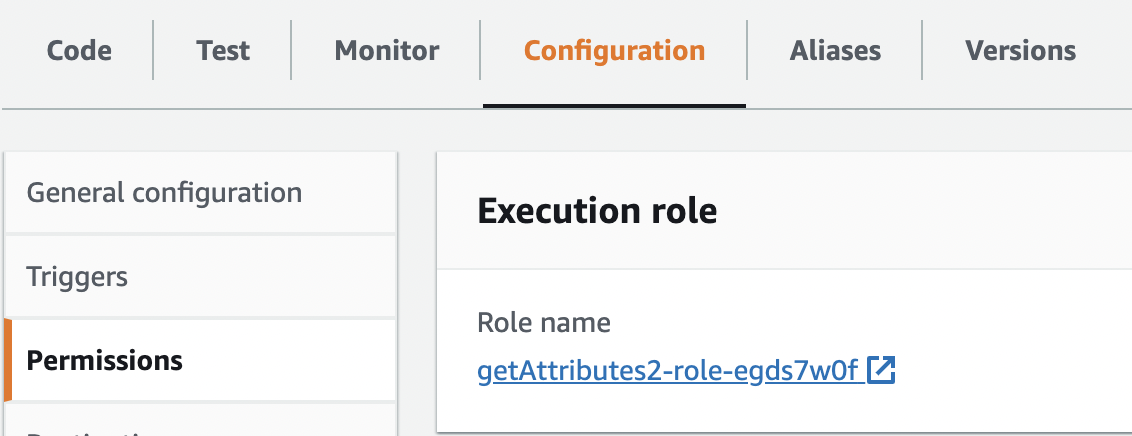

+##### ***IAM PERMISSIONS***

+

+In order to access to your IAM role for a specific function :

+* Access to the [IAM role page](https://us-east-1.console.aws.amazon.com/iamv2/home#/roles).

+* Click on your role name.

+

+**OR**

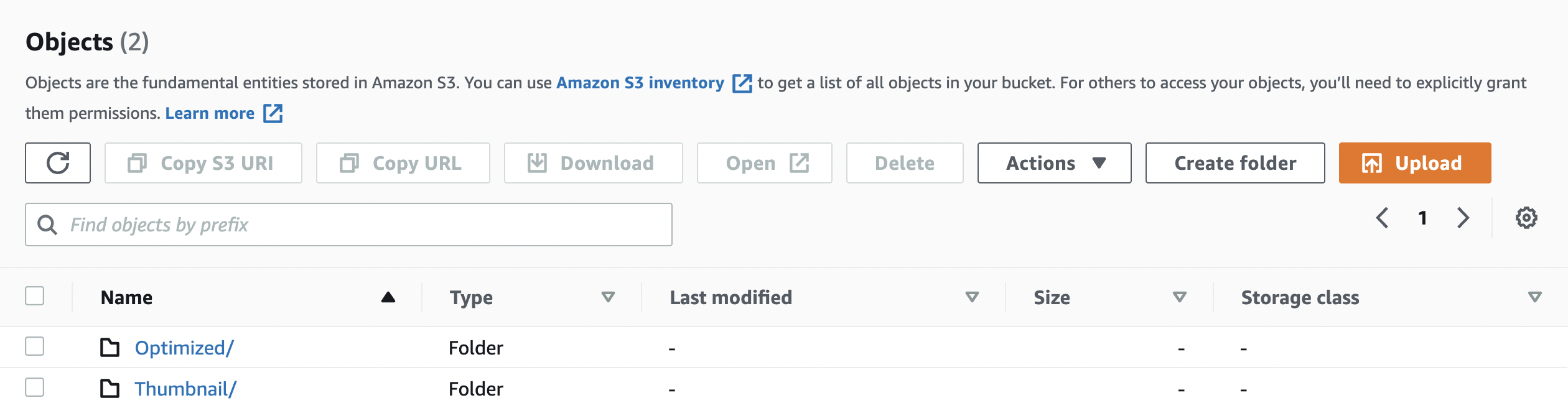

+* Directly access to your role via your Lambda function page.

+

+ * Go to the "**Configuration**" tab.

+ * Click on "**Permissions**" on the left tab.

+ * In the "**Execution role**", click on your role name.

+

+

+  +

+

+

+> You are now on your role page. The different permissions are listed below.

+You can also see that you have normally a default permission called ***AWSLambdaBasicExecutionRole***.

+

+If you want to add a permission to a role :

+

+* Click on the upper right button "**Add permissions**".

+* Select "**Attach policies**".

+* You can filter by the name you want and select multiples policies.

+* Once you are finished with your selection, click on the lower right button "**Attach policies**".

+* Your policies are not attached to your role.

+

+**HandleFileAddedInS3**

+

+We will need for this function :

+

+* Full access on S3 called ***AmazonS3FullAccess***

+* Full access on AmazonDB called ***AmazonDynamoDBFullAccess***

+

+**getAttributes**

+

+We will need for this function :

+

+* Full access on S3 called ***AmazonS3FullAccess***

+* Full access on AmazonDB called ***AmazonDynamoDBFullAccess***

+

+**getOptimizedPicture**

+

+* Full access on S3 called ***AmazonS3FullAccess***

+* Full access on AmazonDB called ***AmazonDynamoDBFullAccess***

+

+**getThumbnailOfImage**

+

+* Full access on S3 called ***AmazonS3FullAccess***

+* Full access on AmazonDB called ***AmazonDynamoDBFullAccess***

+

+---

+#### ***DynamoDB configuration***

+

+In this part, we are going to create and configure the table that will store datas used later in lambda functions.

+

+1. Go on "**Tables**" tab at this [link](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables).

+2. Click on "**Create table**".

+3. Enter whatever you want as the table name.

+4. Enter "**hash**" as the partition key, let the default type which is *String*.

+5. Let the default settings and click on "**Create table**".

+6. Your table will be available when the status attribute goes to "**Active**", it could take few seconds.

+---

+#### ***API Gateway configuration***

+

+Here we are going to configure our api calls, we will use a json file in order to facilitate the configuration.

+

+```json

{

- "Records": [

- {

- "eventVersion": "2.0",

- "eventSource": "aws:s3",

- "awsRegion": "eu-west-3",

- "eventTime": "1970-01-01T00:00:00.000Z",

- "eventName": "ObjectCreated:Put",

- "userIdentity": {

- "principalId": "EXAMPLE"

- },

- "requestParameters": {

- "sourceIPAddress": "127.0.0.1"

- },

- "responseElements": {

- "x-amz-request-id": "EXAMPLE123456789",

- "x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

- },

- "s3": {

- "s3SchemaVersion": "1.0",

- "configurationId": "testConfigRule",

- "bucket": {

- "name": "BUCKET_NAME",

- "ownerIdentity": {

- "principalId": "EXAMPLE"

- },

- "arn": "arn:aws:s3:::BUCKET_NAME"

- },

- "object": {

- "key": "ADDED_OBJECT’S_ NAME “,

- “size”: 1024,

- “eTag”: “0123456789abcdef0123456789abcdef”,

- “sequencer”: “0A1B2C3D4E5F678901”

+ "swagger" : "2.0",

+ "info" : {

+ "version" : "2022-06-15T16:37:00Z",

+ "title" : "Steatite"

+ },

+ "host" : "rzi0pem2eh.execute-api.eu-west-3.amazonaws.com",

+ "basePath" : "/Steatite",

+ "schemes" : [ "https" ],

+ "paths" : {

+ "/item/{corpus}/{hash+}" : {

+ "get" : {

+ "produces" : [ "application/json" ],

+ "parameters" : [ {

+ "name" : "corpus",

+ "in" : "path",

+ "required" : true,

+ "type" : "string"

+ }, {

+ "name" : "hash",

+ "in" : "path",

+ "required" : true,

+ "type" : "string"

+ } ],

+ "responses" : {

+ "200" : {

+ "description" : "200 response",

+ "schema" : {

+ "$ref" : "#/definitions/Empty"

+ }

+ }

+ }

+ }

+ },

+ "/optimized/{hash+}" : {

+ "x-amazon-apigateway-any-method" : {

+ "produces" : [ "image/jpeg" ],

+ "parameters" : [ {

+ "name" : "hash",

+ "in" : "path",

+ "required" : true,

+ "type" : "string"

+ } ],

+ "responses" : {

+ "200" : {

+ "description" : "200 response",

+ "schema" : {

+ "$ref" : "#/definitions/ImageJPEG"

+ },

+ "headers" : {

+ "Content-Type" : {

+ "type" : "string"

+ }

+ }

+ }

+ }

+ }

+ },

+ "/thumbnail/{hash+}" : {

+ "x-amazon-apigateway-any-method" : {

+ "produces" : [ "image/jpeg" ],

+ "parameters" : [ {

+ "name" : "hash",

+ "in" : "path",

+ "required" : true,

+ "type" : "string"

+ } ],

+ "responses" : {

+ "200" : {

+ "description" : "200 response",

+ "schema" : {

+ "$ref" : "#/definitions/ImageJPEG"

+ },

+ "headers" : {

+ "Content-Type" : {

+ "type" : "string"

+ }

+ }

+ }

}

}

}

- ]

+ },

+ "definitions" : {

+ "Empty" : {

+ "type" : "object",

+ "title" : "Empty Schema"

+ },

+ "ImageJPEG" : { }

+ }

}

```

-Now, make sure that the element is well added into the database when you upload an element into your S3 bucket.

+1. On your laptop, create a file called "*Steatite-swagger.json*" and paste the code above.

+2. Go on the [API Gateway page](https://eu-west-3.console.aws.amazon.com/apigateway/main/apis?region=eu-west-3).

+3. Click on "**Create API**".

+4. You now have a list of different solution, we will use "**REST API**", be careful, do not choose "**REST API Private**". Click on "**Import**".

+5. Click on "**Select Swagger File**".

+6. Select the file you created called "*Steatite-swagger.json*".

+7. Click on "**Import**".

-##### *2. Get an item*

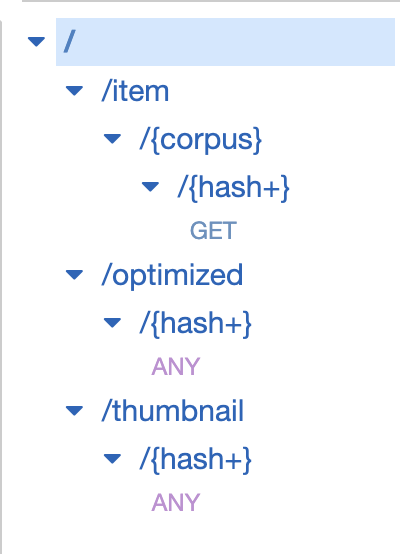

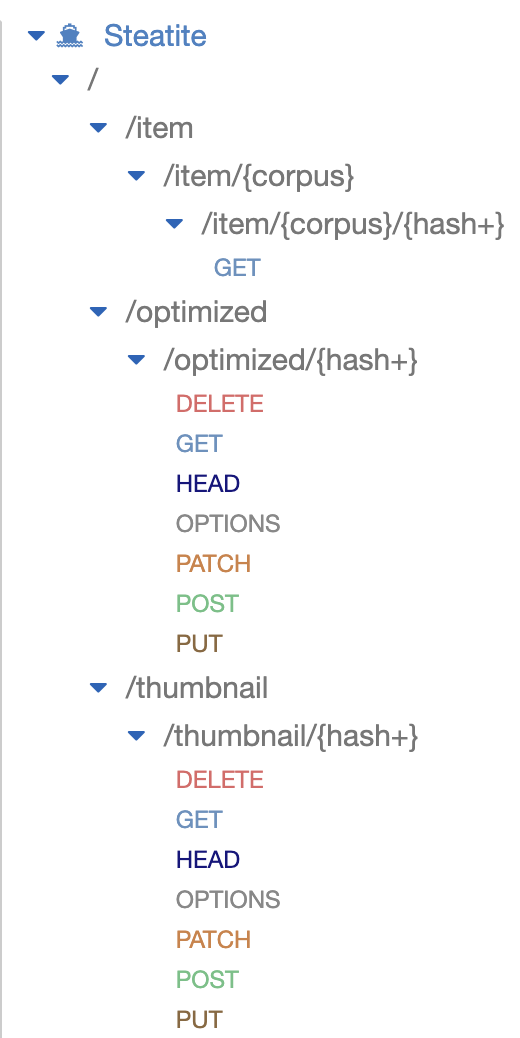

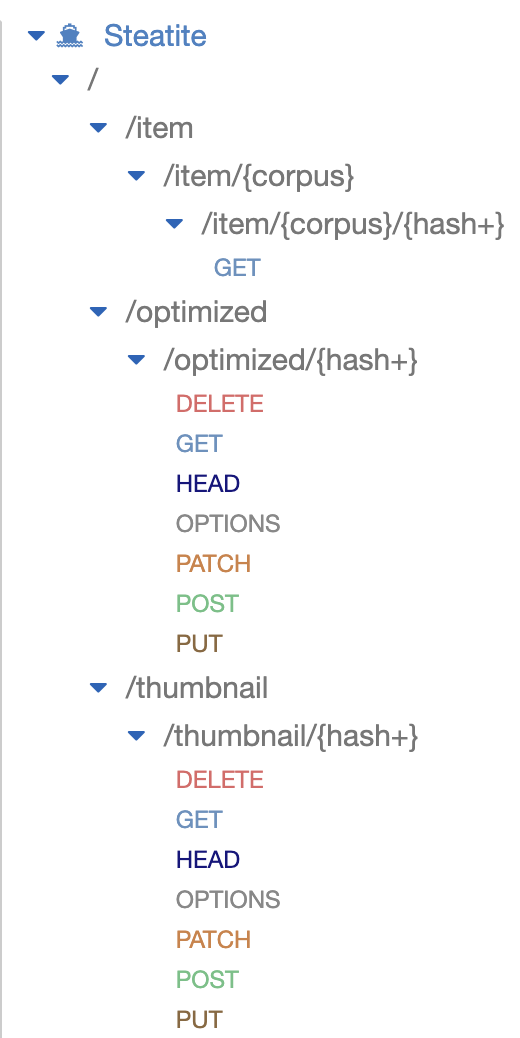

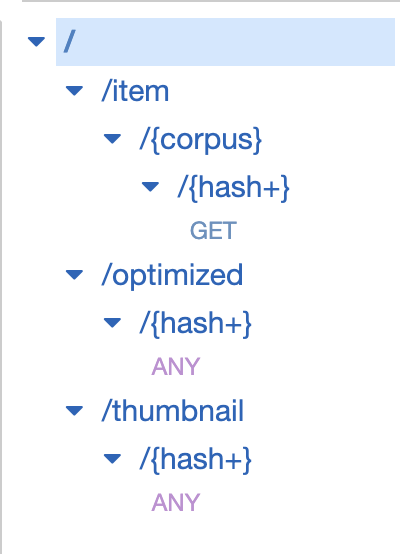

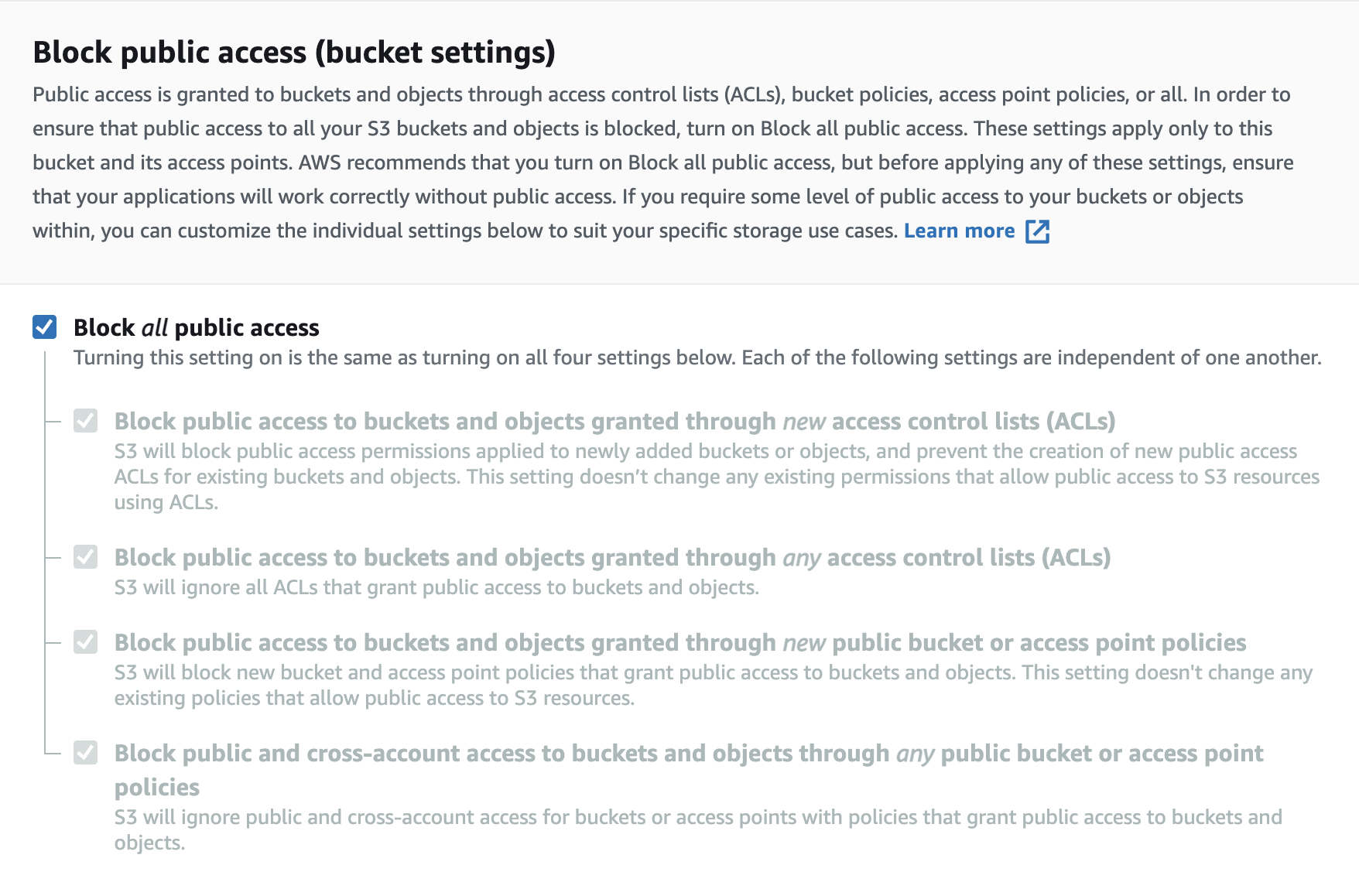

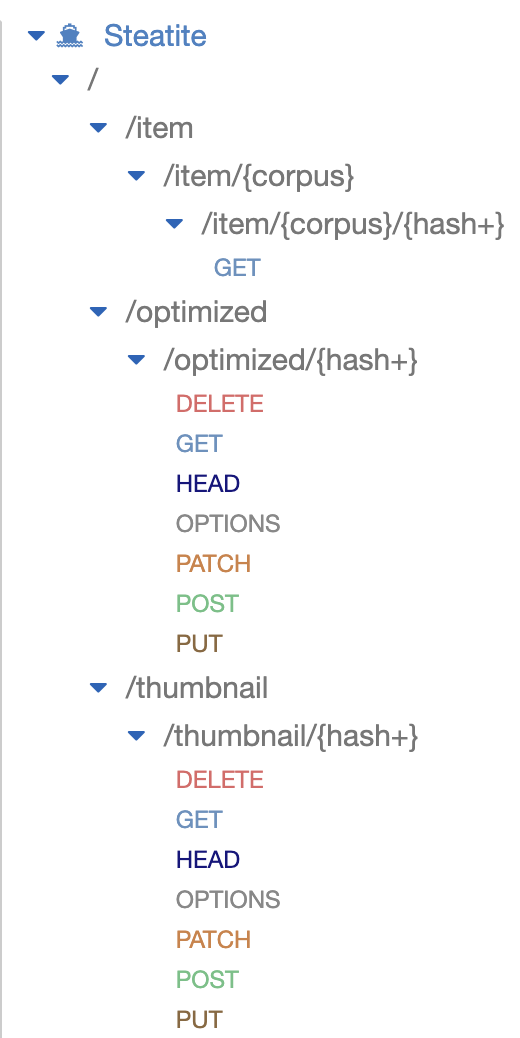

+You now have this view :

+

+  +

+

+We are no going to configure each of API calls.

-Create the lambda function:

-* Go to the AWS console: https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions

-* Click on "*Create function*"

-* Configure the function:

- * Click on "*Author from scratch*" to create it from scratch.

- * Name your function.

- * You want your code to be in node.js, you can choose the "*Node.js 14.x*" version, the one we chose.

- * Make sure that you are going to create a new role for your function under "*Change default execution role*".

- * Click on "*Create function*"

+**/item/{corpus}/{hash+}**

-

- +1. Click on "**GET**".

+2. In the Lambda Function input, write "**getAttributes**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

+**+/optimized/{hash+}**

-

+1. Click on "**GET**".

+2. In the Lambda Function input, write "**getAttributes**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

+**+/optimized/{hash+}**

-  +1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getOptimizedPicture**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action get item.

-* Add an ARN to make sur that the function can only modify the database you’ve just created.

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

+**+/thumbnail/{hash+}**

-In the code section, add this and modify the name of the database:

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getThumbnailOfImage**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-```

-const AWS = require("aws-sdk");

-const S3 = new AWS.S3();

-var DynamoDB = new AWS.DynamoDB();

-const tableName = "TABLE_NAME";

-let responseObject = {};

-

-exports.lambda_handler = async (event, context) => {

- // 1. Parse out query string parameters

- console.log("event : ",event)

- console.log("context : ",context)

- const imageEtag = event['pathParameters']['hash']

-

- console.log("********** imageEtag **********");

- console.log(imageEtag);

-

- // 2. Get the information from DynamoDB

- let paramsGetItem = {

- TableName: tableName,

- Key: {

- "hash" : {

- "S" : imageEtag

- }

- }

- };

-

- let result = await DynamoDB.getItem(paramsGetItem, (err, data) => {

- if (err) {

- console.log("[ERROR : getItem] :",err, err.stack); // an error occurred

- responseObject['statusCode'] = 404

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'application/json'

- responseObject['body'] = "Picture with hash "+imageEtag+" not found"

-

- return responseObject

- }

- else {

- console.log("********** imageInfo **********");

- // console.log(imageInfo)

- console.log(data);

- // Construct the body of the response object

- let imageResponse = {}

- imageResponse['hash'] = imageEtag;

- imageResponse['resource'] = data['Item']['resource']

-

- const uriImage = data['Item']['resource']['S']

- console.log(uriImage)

-

- const htmlResponse =

- `

-

-

-

- `+imageEtag+`

-

-

-

-

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getOptimizedPicture**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action get item.

-* Add an ARN to make sur that the function can only modify the database you’ve just created.

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

+**+/thumbnail/{hash+}**

-In the code section, add this and modify the name of the database:

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getThumbnailOfImage**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-```

-const AWS = require("aws-sdk");

-const S3 = new AWS.S3();

-var DynamoDB = new AWS.DynamoDB();

-const tableName = "TABLE_NAME";

-let responseObject = {};

-

-exports.lambda_handler = async (event, context) => {

- // 1. Parse out query string parameters

- console.log("event : ",event)

- console.log("context : ",context)

- const imageEtag = event['pathParameters']['hash']

-

- console.log("********** imageEtag **********");

- console.log(imageEtag);

-

- // 2. Get the information from DynamoDB

- let paramsGetItem = {

- TableName: tableName,

- Key: {

- "hash" : {

- "S" : imageEtag

- }

- }

- };

-

- let result = await DynamoDB.getItem(paramsGetItem, (err, data) => {

- if (err) {

- console.log("[ERROR : getItem] :",err, err.stack); // an error occurred

- responseObject['statusCode'] = 404

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'application/json'

- responseObject['body'] = "Picture with hash "+imageEtag+" not found"

-

- return responseObject

- }

- else {

- console.log("********** imageInfo **********");

- // console.log(imageInfo)

- console.log(data);

- // Construct the body of the response object

- let imageResponse = {}

- imageResponse['hash'] = imageEtag;

- imageResponse['resource'] = data['Item']['resource']

-

- const uriImage = data['Item']['resource']['S']

- console.log(uriImage)

-

- const htmlResponse =

- `

-

-

-

- `+imageEtag+`

-

-

-

-  -

-

-

- `

-

- // 4. Construct http repsonse object

- responseObject['statusCode'] = 200

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'text/html'

- responseObject['body'] = htmlResponse

-

- //5. Return the response object

- console.log("****** response *******")

- console.log(responseObject)

- //return responseObject;

- return responseObject

-

- }

- }).promise();

-

- return responseObject;

-};

-```

+> Now that all the functions are configured, we can deploy our API.

-In the API gateway :

-* Create a GET method on the Hash resource : check that it is a proxy from a lambda function, the region and add the lambda function.

-* Click on "*Action*" > "*Deploy*" the API and add a name.

-* Click on "*Deploy*"

+1. Click on "**Actions**".

+2. In the "**API ACTIONS**" tab, click on "**Deploy API**".

+3. In the "**Deployment stage**" select "**[New stage]**".

+4. In "**Stage name**", you can put whatever you want, "**Steatite**" seems fine.

+5. Click on "**Deploy**".

-![deploy_api]()

+> The API should be deployed now, here is the view :

-In the S3 bucket :

-* Choose "*Permissions*".

-* Under "*Block public access (bucket settings)*", choose "*Edit*".

-* Clear "*Block all public access*", and choose "*Save changes*".

-* Under "*Bucket Policy*", choose "*Edit*".

-* Copy the following bucket policy, paste it in the "*Bucket policy editor*" and modify the bucket name.

-```

- {

- "Version": "2012-10-17",

- "Statement": [

- {

- "Sid": "PublicReadGetObject",

- "Effect": "Allow",

- "Principal": "*",

- "Action": [

- "s3:GetObject"

- ],

- "Resource": [

- "arn:aws:s3:::Bucket-Name/*"

- ]

- }

- ]

-}

-```

-* Update the "*Resource*" to your bucket name.

-* Choose "*Save changes*".

+

-

-

-

- `

-

- // 4. Construct http repsonse object

- responseObject['statusCode'] = 200

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'text/html'

- responseObject['body'] = htmlResponse

-

- //5. Return the response object

- console.log("****** response *******")

- console.log(responseObject)

- //return responseObject;

- return responseObject

-

- }

- }).promise();

-

- return responseObject;

-};

-```

+> Now that all the functions are configured, we can deploy our API.

-In the API gateway :

-* Create a GET method on the Hash resource : check that it is a proxy from a lambda function, the region and add the lambda function.

-* Click on "*Action*" > "*Deploy*" the API and add a name.

-* Click on "*Deploy*"

+1. Click on "**Actions**".

+2. In the "**API ACTIONS**" tab, click on "**Deploy API**".

+3. In the "**Deployment stage**" select "**[New stage]**".

+4. In "**Stage name**", you can put whatever you want, "**Steatite**" seems fine.

+5. Click on "**Deploy**".

-![deploy_api]()

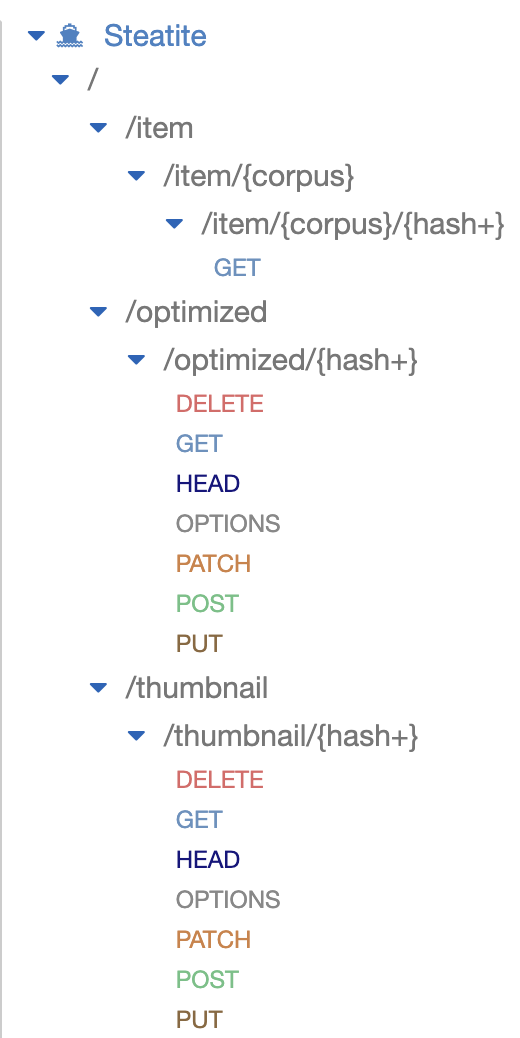

+> The API should be deployed now, here is the view :

-In the S3 bucket :

-* Choose "*Permissions*".

-* Under "*Block public access (bucket settings)*", choose "*Edit*".

-* Clear "*Block all public access*", and choose "*Save changes*".

-* Under "*Bucket Policy*", choose "*Edit*".

-* Copy the following bucket policy, paste it in the "*Bucket policy editor*" and modify the bucket name.

-```

- {

- "Version": "2012-10-17",

- "Statement": [

- {

- "Sid": "PublicReadGetObject",

- "Effect": "Allow",

- "Principal": "*",

- "Action": [

- "s3:GetObject"

- ],

- "Resource": [

- "arn:aws:s3:::Bucket-Name/*"

- ]

- }

- ]

-}

-```

-* Update the "*Resource*" to your bucket name.

-* Choose "*Save changes*".

+

+  +

+

+

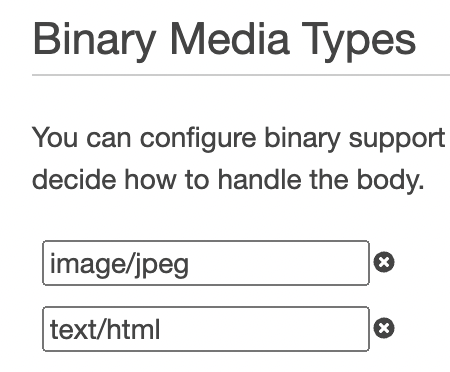

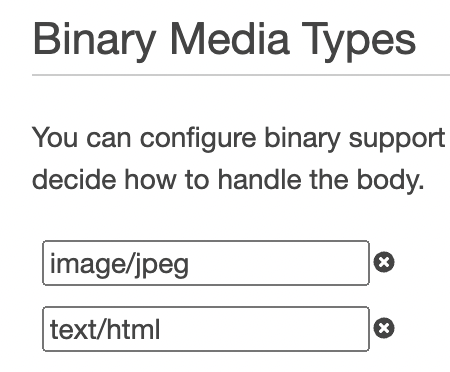

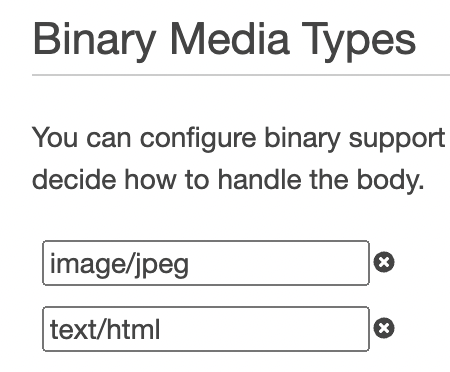

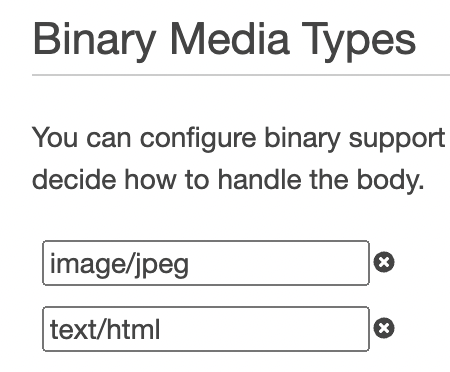

+> VERY IMPORTANT

-#### **API Gateway configuration**

+We need to add the type of binary files accepted by the API in order to allow our response to send images when we will call for the optimized and the thumbnail image.

-Create the API:

-* Go to the AWS console: https://eu-west-3.console.aws.amazon.com/apigateway/main/apis?region=eu-west-3.

-* Click on "*Create API*".

-* Click on "*Build*" in the REST API block.

-* Make sure you configurate it well :

+1. In your API, click on "**Settings**" in the left menu.

+2. Scroll down to "**Binary Media Types**".

+3. Add this configuration :

- +

+

+  +

+

+4. Go to "**Resources**" and deploy your API.

-* Name your API

-* Click on "*Create API*"

+> We now want to add an image to the S3 bucket (into a folder which will take the name of the corpus).

-When the API is created, you can access its paramaters, if you want to create a resource :

-* Click on "*Resources*".

-* Click on "*Actions*" > "*Create Resource*".

-* Give the resource a name :

+### Test phase

- +#### **Add a file to S3 and verify if it added in the Thumbnail folder**

-* Click on "*Create Resource*".

+In order to execute our Lambda function "**HandleFileAddedInS3**", we need to link it with our S3 bucket. Here is how we do it :

-> The resource is created !

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Go to the "**Properties**" tab.

+4. Scroll down to "**Event notifications**".

+5. Click on "**Create event notification**".

+6. Give it a name, such as "**HandleFileAddedInS3Event**".

+6. In the "**Event types**", select "**All object create events**".

+7. In "**Destination**", Select your Lambda function name (*HandleFileAddedInS3*).

+8. Click on "**Save changes**".

-* If you want to create a sub resource under the one created, here *picture*, click on it.

-* Click on "*Actions*" > "*Create Resource*".

- * Check the proxy resource :

+Now we want to see if we can add a file and make it automatically pasted in the thumbnail folder.

- > *Checking the proxy will allow to get and treat all the demands made to the sub resource, for exemple, a hash that could be use to get an image.*

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Click on "**Create folder**" in order to name your corpus.

+4. Click on the folder created to enter it.

+5. Click on "**Upload**".

+6. Select a photo.

+7. Click on "**Upload**".

+8. When the upload is successful, you will have a green message with "**Upload succeeded**".

+9. Click on "**Close**".

- * Give a name: {hash+}

+The image should be here, in your brand new folder. Now we want to verify if the file was pasted as a thumbnail in the folder.

-

+#### **Add a file to S3 and verify if it added in the Thumbnail folder**

-* Click on "*Create Resource*".

+In order to execute our Lambda function "**HandleFileAddedInS3**", we need to link it with our S3 bucket. Here is how we do it :

-> The resource is created !

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Go to the "**Properties**" tab.

+4. Scroll down to "**Event notifications**".

+5. Click on "**Create event notification**".

+6. Give it a name, such as "**HandleFileAddedInS3Event**".

+6. In the "**Event types**", select "**All object create events**".

+7. In "**Destination**", Select your Lambda function name (*HandleFileAddedInS3*).

+8. Click on "**Save changes**".

-* If you want to create a sub resource under the one created, here *picture*, click on it.

-* Click on "*Actions*" > "*Create Resource*".

- * Check the proxy resource :

+Now we want to see if we can add a file and make it automatically pasted in the thumbnail folder.

- > *Checking the proxy will allow to get and treat all the demands made to the sub resource, for exemple, a hash that could be use to get an image.*

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Click on "**Create folder**" in order to name your corpus.

+4. Click on the folder created to enter it.

+5. Click on "**Upload**".

+6. Select a photo.

+7. Click on "**Upload**".

+8. When the upload is successful, you will have a green message with "**Upload succeeded**".

+9. Click on "**Close**".

- * Give a name: {hash+}

+The image should be here, in your brand new folder. Now we want to verify if the file was pasted as a thumbnail in the folder.

- +1. Go back to your main page of your bucket.

+2. Click on "**Thumbnail**".

+

+> So now you have a file added in a corpus and one is added in the thumbnail folder. We want to verify if it is well added in the table in DynamoDB, and if the api calls work.

+

+---

+#### **Check if a file is well added in the picture table in DynamoDB**

+

+1. Go to the "[Tables](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables)" page.

+2. Access it by clicking on it.

+3. Click on "**Explore table items**".

+4. You should see a line with the same hash and the corpus.

+

+> Now that the file is well added in DynamoDB, let's check if we can make our API calls.

+

+---

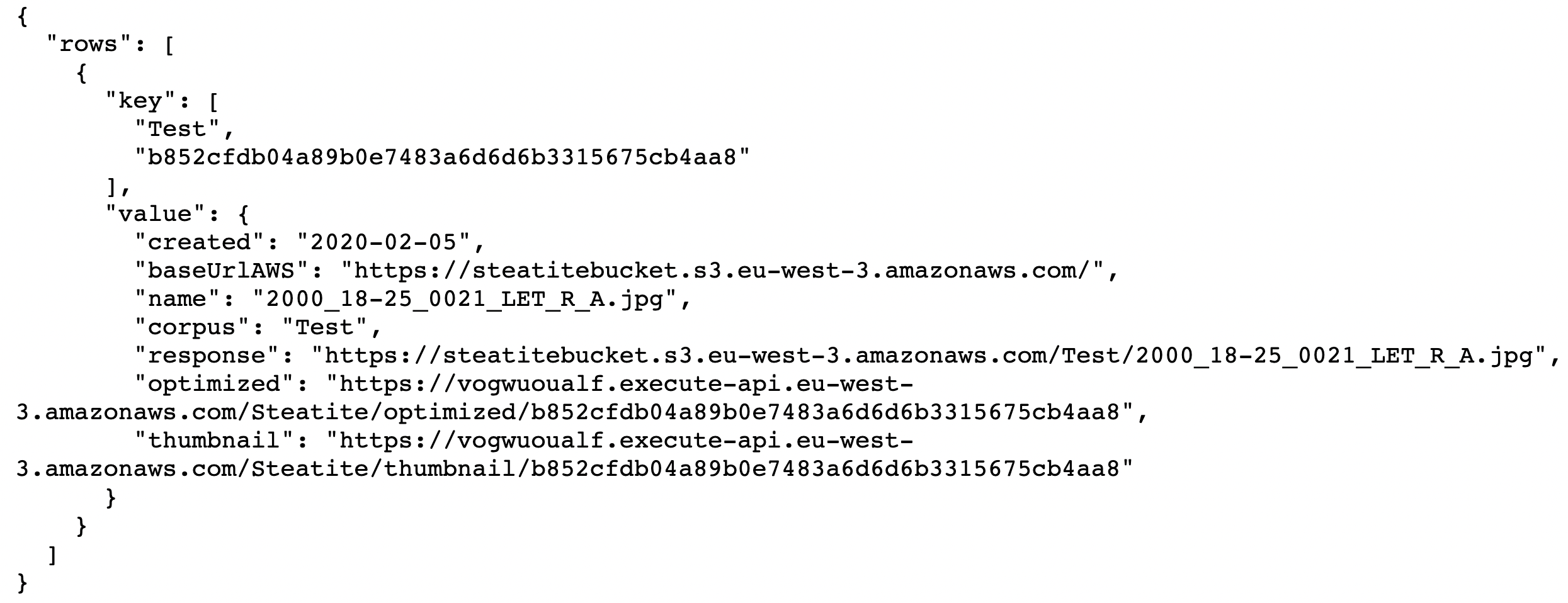

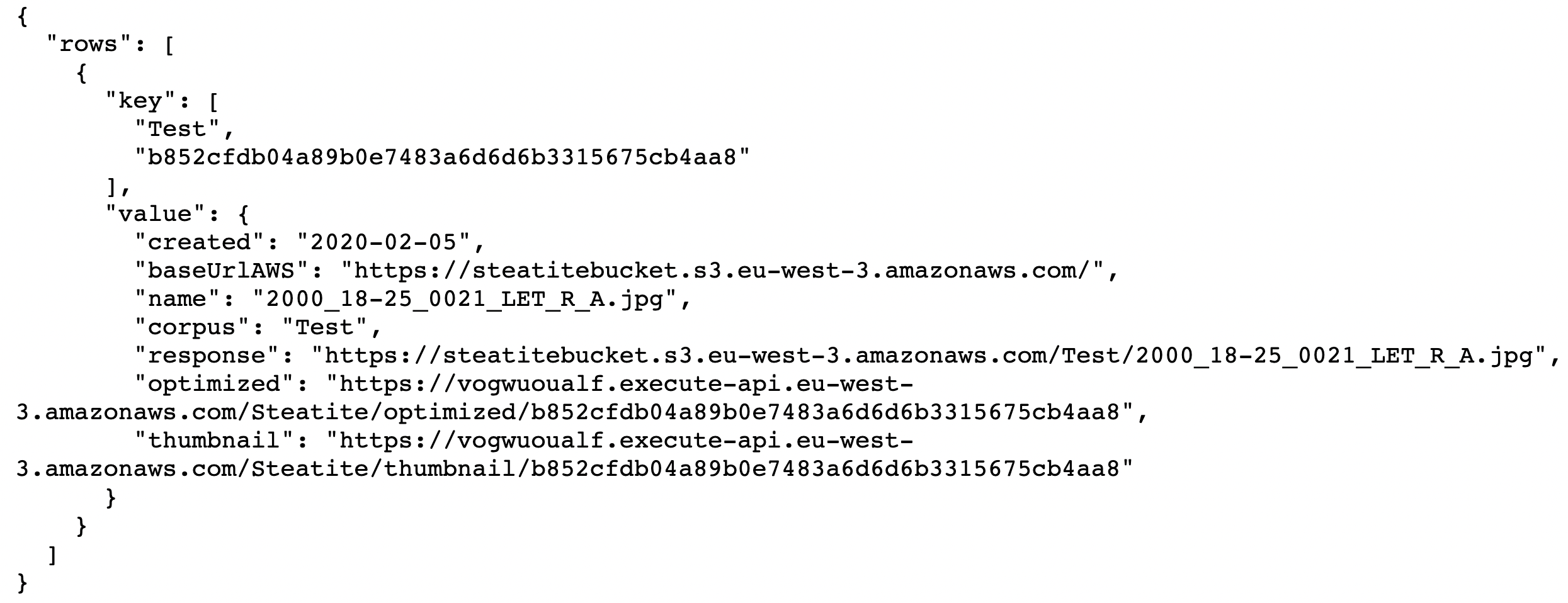

+#### **Check if our API Gateway calls work**

+

+1. Go to [API Gateway](https://eu-west-3.console.aws.amazon.com/apigateway/main/apis?region=eu-west-3).

+2. Access your API by clicking on it.

+3. Go to "**Stages**" on the left menu.

+4. Open the menu of your API by clicking on it.

+

+**/item/{corpus}/{hash+}**

+

+1. Click on "**GET**".

+2. Click on the "**Invoke URL**" link.

+3. Replace corpus by the corpus name and the hash by the right hash.

+> Go to your DynamoDB table in order to know it.

+4. You should have this kind of JSON response :

+

+

+1. Go back to your main page of your bucket.

+2. Click on "**Thumbnail**".

+

+> So now you have a file added in a corpus and one is added in the thumbnail folder. We want to verify if it is well added in the table in DynamoDB, and if the api calls work.

+

+---

+#### **Check if a file is well added in the picture table in DynamoDB**

+

+1. Go to the "[Tables](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables)" page.

+2. Access it by clicking on it.

+3. Click on "**Explore table items**".

+4. You should see a line with the same hash and the corpus.

+

+> Now that the file is well added in DynamoDB, let's check if we can make our API calls.

+

+---

+#### **Check if our API Gateway calls work**

+

+1. Go to [API Gateway](https://eu-west-3.console.aws.amazon.com/apigateway/main/apis?region=eu-west-3).

+2. Access your API by clicking on it.

+3. Go to "**Stages**" on the left menu.

+4. Open the menu of your API by clicking on it.

+

+**/item/{corpus}/{hash+}**

+

+1. Click on "**GET**".

+2. Click on the "**Invoke URL**" link.

+3. Replace corpus by the corpus name and the hash by the right hash.

+> Go to your DynamoDB table in order to know it.

+4. You should have this kind of JSON response :

+

+

+  +

+

+---

- > ⚠️️️ *Do not forget to add the "+" at the end of the resource's name as it is showed in the exemple* ⚠️️️

-

- * Click on "*Create Resource*".

+**/optimized/{hash+}**

-We will finalise the configuration later. The tree should look like this:

+For this part you can use the JSON response you had before and copy paste the "**optimized**" attribute value.

- +You should receive the optimized version of your image.

-#### **DynamoDB configuration**

+**/thumbnail/{hash+}**

-* Go to the AWS console : https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#service.

-* Click on "*Tables*" to go go into your tables on the left sidebar.

-* Click on "*Create table*".

-* Configurate our table :

- * Give a name.

- * Give a name to you partition key : hash.

- * Click on "*Create table*"

+For this part you can use the JSON response you had before and copy paste the "**thumbnail**" attribute value.

-> Your table is ready!

+You should receive the thumbnail of your image.

\ No newline at end of file

From 0f209fb0b6373950e367473cffaebbd3f96acd14 Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Desprez=20Aur=C3=A9lien?=

<45626870+aurelien45100@users.noreply.github.com>

Date: Mon, 20 Jun 2022 19:25:56 +0200

Subject: [PATCH 3/4] =?UTF-8?q?UPDATE:=20Mise=20=C3=A0=20jour=20du=20fichi?=

=?UTF-8?q?er=20INSTALL.md?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

Test du fichier sur le compte personnel d'Aurélien, modification et ajout d'informations pour le perfectionner.

Co-authored : @callistas

---

INSTALL.md | 96 +++++++++++++++++++++++++++++++++---------------------

1 file changed, 58 insertions(+), 38 deletions(-)

diff --git a/INSTALL.md b/INSTALL.md

index 339a9d0..bb8d993 100644

--- a/INSTALL.md

+++ b/INSTALL.md

@@ -41,11 +41,7 @@ For this project, we need to do multiple steps for s3.

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "**Create bucket**" :

-

+You should receive the optimized version of your image.

-#### **DynamoDB configuration**

+**/thumbnail/{hash+}**

-* Go to the AWS console : https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#service.

-* Click on "*Tables*" to go go into your tables on the left sidebar.

-* Click on "*Create table*".

-* Configurate our table :

- * Give a name.

- * Give a name to you partition key : hash.

- * Click on "*Create table*"

+For this part you can use the JSON response you had before and copy paste the "**thumbnail**" attribute value.

-> Your table is ready!

+You should receive the thumbnail of your image.

\ No newline at end of file

From 0f209fb0b6373950e367473cffaebbd3f96acd14 Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Desprez=20Aur=C3=A9lien?=

<45626870+aurelien45100@users.noreply.github.com>

Date: Mon, 20 Jun 2022 19:25:56 +0200

Subject: [PATCH 3/4] =?UTF-8?q?UPDATE:=20Mise=20=C3=A0=20jour=20du=20fichi?=

=?UTF-8?q?er=20INSTALL.md?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

Test du fichier sur le compte personnel d'Aurélien, modification et ajout d'informations pour le perfectionner.

Co-authored : @callistas

---

INSTALL.md | 96 +++++++++++++++++++++++++++++++++---------------------

1 file changed, 58 insertions(+), 38 deletions(-)

diff --git a/INSTALL.md b/INSTALL.md

index 339a9d0..bb8d993 100644

--- a/INSTALL.md

+++ b/INSTALL.md

@@ -41,11 +41,7 @@ For this project, we need to do multiple steps for s3.

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "**Create bucket**" :

-

-  -

-

-

+* Click on "**Create bucket**".

* Configurate your bucket:

* Give a name (*for exemple "steatitebucket"*).

* Make sure the region is the one you want to use.

@@ -61,14 +57,10 @@ For this project, we need to do multiple steps for s3.

> Don't forget to check the fact that "*the current settings might result in this bucket and the objects within becoming public*"

-  +

+

- * Click on "**Create bucket**" :

-

-

-  -

-

+ * Click on "**Create bucket**".

> Your bucket is ready!

---

@@ -103,19 +95,13 @@ For this project, we need to do multiple steps for s3.

}

```

> Change the "**Resource**" with your bucket name if it's not the same as the exemple.

-* Click on "**Save changes**" :

-

-  -

-

+* Click on "**Save changes**".

* Scroll down to the "**Block public access (bucket settings)**" part and click on "**Edit**".

* Check the "**Block all public access**" in order to protect your bucket.

-* Click on "**Save changes**" :

-

-  -

-

+* Click on "**Save changes**".

* Confirm the modification.

> Your bucket is not fully ready and configured !

@@ -124,6 +110,7 @@ For this project, we need to do multiple steps for s3.

##### **3. CREATE THE "THUMBNAIL" AND "OPTIMIZED" DEFAULT FOLDER.**

+* Go to the "**Objects**" tab.

* Click on "**Create folder**".

* Name it "**_Thumbnail_**".

* Click on "**Create folder**" to validate the creation.

@@ -149,22 +136,14 @@ They will be explained later in each of there parts.

In order to add a lambda function, here is the steps to follow :

* Go to the [Lambda functions page](https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions).

-* Click on "**Create function**" :

-

-

-  -

-

+* Click on "**Create function**".

* Select "**Author from scratch**".

* Give the good name to the function, one of the name listed above.

* Choose "**Node.js 14.x**" as the runtime environment.

* In the "**Permissions**" tab, you will have a basic permission role for the function that will be created in your [IAM environment](https://us-east-1.console.aws.amazon.com/iamv2/home?region=us-east-1#/roles). Its name will be like "*functionname-role-randomstring"*.

* The IAM permissions for a specific function are different, some need full access to DynamoDB, others to S3. This specification will be explained later in there function explanation.

-* Click on "**Create function**" :

-

-

-  -

-

+* Click on "**Create function**".

* You now have a brand new function, here is how you add the existing code :

* On Github, go to the src/ folder, where all the functions are.

@@ -193,12 +172,16 @@ Do the same thing for all the functions and then we will configure the IAM permi

We are going to add environment variables in some of our functions in order to make our code dynamic and avoid useless code modification.

+You will be asked to add the name of your future DynamoDB. It could be whatever you want but you must use the same name between your environment variables and your DynamoDB table's name.

+

For each of the next functions :

1. Go to the function in Lambda.

2. Go to the "**Configuration**" tab.

-3. Click on "**Edit**" on "**Environment variables**".

-4. Add the attributes.

-5. Click on "**Save**".

+3. Go to "**Environment variables**" on the left menu.

+4. Click on "**Edit**" on "**Environment variables**".

+5. Click on "**Add environment variable**".

+6. Add the attributes.

+7. Click on "**Save**".

**HandleFileAddedInS3**

@@ -226,6 +209,40 @@ Value : The name of your DynamoDB table.]

* [Key name : S3BucketName;

Value : The name of your S3 bucket.]

+---

+

+##### ***Changing memory and timeout of our functions***

+

+We need to modify the memory allocated to some of our function and also the default timeout in order to let our functions do there job.

+

+In order to modify the configuration of a function, you need to follow these steps :

+

+1. Go to your function.

+2. Go to the "**Configuration**" tab.

+3. Select "**General configuration**" on the left menu.

+4. Click on "**Edit**".

+5. Make your modifications and click on "**Save**".

+

+**HandleFileAddedInS3**

+

+* Memory : 512MB.

+* Timeout : 1 minute.

+

+**getAttributes**

+

+* Memory : 128MB.

+* Timeout : 40 secondes.

+

+**getOptimizedPicture**

+

+* Memory : 1536MB.

+* Timeout : 10 secondes.

+

+**getThumbnailOfImage**

+

+* Memory : 128MB.

+* Timeout : 1 minute.

+

---

##### ***IAM PERMISSIONS***

@@ -253,7 +270,7 @@ If you want to add a permission to a role :

* Select "**Attach policies**".

* You can filter by the name you want and select multiples policies.

* Once you are finished with your selection, click on the lower right button "**Attach policies**".

-* Your policies are not attached to your role.

+* Your policies are now attached to your role.

**HandleFileAddedInS3**

@@ -286,7 +303,7 @@ In this part, we are going to create and configure the table that will store dat

1. Go on "**Tables**" tab at this [link](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables).

2. Click on "**Create table**".

-3. Enter whatever you want as the table name.

+3. Enter whatever you want as the table name (the same as the environment variable you add before).

4. Enter "**hash**" as the partition key, let the default type which is *String*.

5. Let the default settings and click on "**Create table**".

6. Your table will be available when the status attribute goes to "**Active**", it could take few seconds.

@@ -409,13 +426,13 @@ We are no going to configure each of API calls.

2. In the Lambda Function input, write "**getAttributes**" and select it.

3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-**+/optimized/{hash+}**

+**/optimized/{hash+}**

1. Click on "**ANY**".

2. In the Lambda Function input, write "**getOptimizedPicture**" and select it.

3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-**+/thumbnail/{hash+}**

+**/thumbnail/{hash+}**

1. Click on "**ANY**".

2. In the Lambda Function input, write "**getThumbnailOfImage**" and select it.

@@ -429,7 +446,7 @@ We are no going to configure each of API calls.

4. In "**Stage name**", you can put whatever you want, "**Steatite**" seems fine.

5. Click on "**Deploy**".

-> The API should be deployed now, here is the view :

+> The API should be deployed now, here is the view after clicking on its name :

@@ -446,7 +463,8 @@ We need to add the type of binary files accepted by the API in order to allow ou

@@ -446,7 +463,8 @@ We need to add the type of binary files accepted by the API in order to allow ou

-4. Go to "**Resources**" and deploy your API.

+4. Click on "**Save changes**".

+5. Go to "**Resources**" and deploy your API. You can select the same stage you added before.

> We now want to add an image to the S3 bucket (into a folder which will take the name of the corpus).

@@ -522,6 +540,8 @@ For this part you can use the JSON response you had before and copy paste the "*

You should receive the optimized version of your image.

+> First call is the one that create the image in the Optimized folder into your S3 bucket. The response could take few seconds.

+

**/thumbnail/{hash+}**

For this part you can use the JSON response you had before and copy paste the "**thumbnail**" attribute value.

From 92d8dc60ce218ae34306f01a66a5c920924a44fa Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Desprez=20Aur=C3=A9lien?=

<45626870+aurelien45100@users.noreply.github.com>

Date: Wed, 22 Jun 2022 17:18:55 +0200

Subject: [PATCH 4/4] =?UTF-8?q?UPDATE:=20Mise=20=C3=A0=20jour=20du=20fichi?=

=?UTF-8?q?er=20INSTALL.md?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

Mise à jour du schema qui explique les liens entre les composants AWS.

Co-authored : @callistas

---

INSTALL.md | 7 +------

1 file changed, 1 insertion(+), 6 deletions(-)

diff --git a/INSTALL.md b/INSTALL.md

index bb8d993..595ed7b 100644

--- a/INSTALL.md

+++ b/INSTALL.md

@@ -5,9 +5,6 @@ AWS Account with access to :

* [Lambda](https://aws.amazon.com/fr/lambda/)

* [API Gateway](https://aws.amazon.com/fr/api-gateway/)

* [DynamoDB](https://aws.amazon.com/fr/dynamodb/)

-

-

-

## Installation procedure

### Brief presentation of the AWS components

@@ -19,15 +16,13 @@ In this project, we use 4 AWS components :

To better understand the links between the different entities, here is a schema of what happens when one makes a request to have information used in this project :

- +

+ ---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

-We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-

---

#### ***S3 Configuration***

---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

-We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-

---

#### ***S3 Configuration***

+

+ +

+ +

+

+

+  +

+  +

+  +

+  +

+  +

+  +

+  +---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-#### **S3 Configuration**

+---

+#### ***S3 Configuration***

For this project, we need to do multiple steps for s3.

-1. Create an "S3" bucket.

+1. Create an "**S3**" bucket.

+

2. Change bucket policy and make the bucket private.

-3. Create the "Thumbnail" and "Optimized" default folder.

+3. Create the "**Thumbnail**" and "**Optimized**" default folder.

+---

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "Create bucket" :

+---

### Configuration

> For all the configurations, we recommend using the AWS region closest to you (*in our case, eu-west-3 | Europe Paris*)

We are going to start by configurating all the aws elements of the project and finish with the lambda. Every lambda function represents a functional feature that we want to implement.

-#### **S3 Configuration**

+---

+#### ***S3 Configuration***

For this project, we need to do multiple steps for s3.

-1. Create an "S3" bucket.

+1. Create an "**S3**" bucket.

+

2. Change bucket policy and make the bucket private.

-3. Create the "Thumbnail" and "Optimized" default folder.

+3. Create the "**Thumbnail**" and "**Optimized**" default folder.

+---

##### **1. CREATE AN "S3" BUCKET**

* Go to your AWS console (https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3)

-* Click on "Create bucket" :  -

- +

+

+

+  -

-  +

+

-

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

-

-

-

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

-

- -

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action put item.

-

-

-

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action put item.

-

- -

-* Add an ARN to make sur that the function can only modify the database you’ve just created

-

-

-

-

-* Add an ARN to make sur that the function can only modify the database you’ve just created

-

-

- -

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

-

-In the code section, add this and modify the name of the database and the URI of the bucket:

+#### ***Lambda configuration***

-```

-const AWS = require("aws-sdk")

-const S3 = new AWS.S3();

-// Connection to the table

-const DynamoDB = new AWS.DynamoDB();

-const tableName = "DYNAMODB_TABLE_NAME"

+All the lambda functions are already developed and available on the [Github Steatite's repository](https://github.com/Hypertopic/Steatite/tree/v7/src).

-let crypto = require('crypto')

-let finalEtag = crypto.createHash('sha1')

+We can list 4 different functions :

+* HandleFileAddedInS3

+* getAttributes

+* getOptimizedPicture

+* getThumbnailOfImage

-exports.handler = (event) => {

- //gets the info from the triggered event (= put in S3 bucket) : bucket name and file name

+They will be explained later in each of there parts.

- const s3_object = event['Records'][0]['s3']

- const source_bucket_name = s3_object['bucket']['name']

- const file_name = s3_object['object']['key']

+In order to add a lambda function, here is the steps to follow :

+* Go to the [Lambda functions page](https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions).

+* Click on "**Create function**" :

- const paramsGetObject = {

- Bucket: source_bucket_name,

- Key: file_name

- }

+

-

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

-

-In the code section, add this and modify the name of the database and the URI of the bucket:

+#### ***Lambda configuration***

-```

-const AWS = require("aws-sdk")

-const S3 = new AWS.S3();

-// Connection to the table

-const DynamoDB = new AWS.DynamoDB();

-const tableName = "DYNAMODB_TABLE_NAME"

+All the lambda functions are already developed and available on the [Github Steatite's repository](https://github.com/Hypertopic/Steatite/tree/v7/src).

-let crypto = require('crypto')

-let finalEtag = crypto.createHash('sha1')

+We can list 4 different functions :

+* HandleFileAddedInS3

+* getAttributes

+* getOptimizedPicture

+* getThumbnailOfImage

-exports.handler = (event) => {

- //gets the info from the triggered event (= put in S3 bucket) : bucket name and file name

+They will be explained later in each of there parts.

- const s3_object = event['Records'][0]['s3']

- const source_bucket_name = s3_object['bucket']['name']

- const file_name = s3_object['object']['key']

+In order to add a lambda function, here is the steps to follow :

+* Go to the [Lambda functions page](https://eu-west-3.console.aws.amazon.com/lambda/home?region=eu-west-3#/functions).

+* Click on "**Create function**" :

- const paramsGetObject = {

- Bucket: source_bucket_name,

- Key: file_name

- }

+  +

+  +

+  +

+  +

+  +

+  +1. Click on "**GET**".

+2. In the Lambda Function input, write "**getAttributes**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

+**+/optimized/{hash+}**

-

+1. Click on "**GET**".

+2. In the Lambda Function input, write "**getAttributes**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-Give the adequate role to your lambda function (in this case, we want our lambda function to be able to put an item in our database):

-* Go in your Lambda function.

-* Click on "*Configuration*".

-* Click on "*Permissions*".

-* Click on your role.

+**+/optimized/{hash+}**

-  +1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getOptimizedPicture**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action get item.

-* Add an ARN to make sur that the function can only modify the database you’ve just created.

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

+**+/thumbnail/{hash+}**

-In the code section, add this and modify the name of the database:

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getThumbnailOfImage**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-```

-const AWS = require("aws-sdk");

-const S3 = new AWS.S3();

-var DynamoDB = new AWS.DynamoDB();

-const tableName = "TABLE_NAME";

-let responseObject = {};

-

-exports.lambda_handler = async (event, context) => {

- // 1. Parse out query string parameters

- console.log("event : ",event)

- console.log("context : ",context)

- const imageEtag = event['pathParameters']['hash']

-

- console.log("********** imageEtag **********");

- console.log(imageEtag);

-

- // 2. Get the information from DynamoDB

- let paramsGetItem = {

- TableName: tableName,

- Key: {

- "hash" : {

- "S" : imageEtag

- }

- }

- };

-

- let result = await DynamoDB.getItem(paramsGetItem, (err, data) => {

- if (err) {

- console.log("[ERROR : getItem] :",err, err.stack); // an error occurred

- responseObject['statusCode'] = 404

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'application/json'

- responseObject['body'] = "Picture with hash "+imageEtag+" not found"

-

- return responseObject

- }

- else {

- console.log("********** imageInfo **********");

- // console.log(imageInfo)

- console.log(data);

- // Construct the body of the response object

- let imageResponse = {}

- imageResponse['hash'] = imageEtag;

- imageResponse['resource'] = data['Item']['resource']

-

- const uriImage = data['Item']['resource']['S']

- console.log(uriImage)

-

- const htmlResponse =

- `

-

-

-

-

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getOptimizedPicture**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-You can now see the roles that your function has.

-* Click on create an online politic

-* Indicate that it is for DynamoDB and for the action get item.

-* Add an ARN to make sur that the function can only modify the database you’ve just created.

-* Click on examine the strategy

-* Name your strategy

-* Click on create the strategy

-* Do the same for access to S3 with the action GetObject

+**+/thumbnail/{hash+}**

-In the code section, add this and modify the name of the database:

+1. Click on "**ANY**".

+2. In the Lambda Function input, write "**getThumbnailOfImage**" and select it.

+3. Click on "**Ok**" in order to add the correct permissions to the Lambda function.

-```

-const AWS = require("aws-sdk");

-const S3 = new AWS.S3();

-var DynamoDB = new AWS.DynamoDB();

-const tableName = "TABLE_NAME";

-let responseObject = {};

-

-exports.lambda_handler = async (event, context) => {

- // 1. Parse out query string parameters

- console.log("event : ",event)

- console.log("context : ",context)

- const imageEtag = event['pathParameters']['hash']

-

- console.log("********** imageEtag **********");

- console.log(imageEtag);

-

- // 2. Get the information from DynamoDB

- let paramsGetItem = {

- TableName: tableName,

- Key: {

- "hash" : {

- "S" : imageEtag

- }

- }

- };

-

- let result = await DynamoDB.getItem(paramsGetItem, (err, data) => {

- if (err) {

- console.log("[ERROR : getItem] :",err, err.stack); // an error occurred

- responseObject['statusCode'] = 404

- responseObject['headers'] = {}

- responseObject['headers']['Content-Type'] = 'application/json'

- responseObject['body'] = "Picture with hash "+imageEtag+" not found"

-

- return responseObject

- }

- else {

- console.log("********** imageInfo **********");

- // console.log(imageInfo)

- console.log(data);

- // Construct the body of the response object

- let imageResponse = {}

- imageResponse['hash'] = imageEtag;

- imageResponse['resource'] = data['Item']['resource']

-

- const uriImage = data['Item']['resource']['S']

- console.log(uriImage)

-

- const htmlResponse =

- `

-

-

-

-  +

+ +

+  +

+  +#### **Add a file to S3 and verify if it added in the Thumbnail folder**

-* Click on "*Create Resource*".

+In order to execute our Lambda function "**HandleFileAddedInS3**", we need to link it with our S3 bucket. Here is how we do it :

-> The resource is created !

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Go to the "**Properties**" tab.

+4. Scroll down to "**Event notifications**".

+5. Click on "**Create event notification**".

+6. Give it a name, such as "**HandleFileAddedInS3Event**".

+6. In the "**Event types**", select "**All object create events**".

+7. In "**Destination**", Select your Lambda function name (*HandleFileAddedInS3*).

+8. Click on "**Save changes**".

-* If you want to create a sub resource under the one created, here *picture*, click on it.

-* Click on "*Actions*" > "*Create Resource*".

- * Check the proxy resource :

+Now we want to see if we can add a file and make it automatically pasted in the thumbnail folder.

- > *Checking the proxy will allow to get and treat all the demands made to the sub resource, for exemple, a hash that could be use to get an image.*

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Click on "**Create folder**" in order to name your corpus.

+4. Click on the folder created to enter it.

+5. Click on "**Upload**".

+6. Select a photo.

+7. Click on "**Upload**".

+8. When the upload is successful, you will have a green message with "**Upload succeeded**".

+9. Click on "**Close**".

- * Give a name: {hash+}

+The image should be here, in your brand new folder. Now we want to verify if the file was pasted as a thumbnail in the folder.

-

+#### **Add a file to S3 and verify if it added in the Thumbnail folder**

-* Click on "*Create Resource*".

+In order to execute our Lambda function "**HandleFileAddedInS3**", we need to link it with our S3 bucket. Here is how we do it :

-> The resource is created !

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Go to the "**Properties**" tab.

+4. Scroll down to "**Event notifications**".

+5. Click on "**Create event notification**".

+6. Give it a name, such as "**HandleFileAddedInS3Event**".

+6. In the "**Event types**", select "**All object create events**".

+7. In "**Destination**", Select your Lambda function name (*HandleFileAddedInS3*).

+8. Click on "**Save changes**".

-* If you want to create a sub resource under the one created, here *picture*, click on it.

-* Click on "*Actions*" > "*Create Resource*".

- * Check the proxy resource :

+Now we want to see if we can add a file and make it automatically pasted in the thumbnail folder.

- > *Checking the proxy will allow to get and treat all the demands made to the sub resource, for exemple, a hash that could be use to get an image.*

+1. Go to "[**S3**](https://s3.console.aws.amazon.com/s3/buckets?region=eu-west-3®ion=eu-west-3)".

+2. Enter your bucket by clicking on it.

+3. Click on "**Create folder**" in order to name your corpus.

+4. Click on the folder created to enter it.

+5. Click on "**Upload**".

+6. Select a photo.

+7. Click on "**Upload**".

+8. When the upload is successful, you will have a green message with "**Upload succeeded**".

+9. Click on "**Close**".

- * Give a name: {hash+}

+The image should be here, in your brand new folder. Now we want to verify if the file was pasted as a thumbnail in the folder.

- +1. Go back to your main page of your bucket.

+2. Click on "**Thumbnail**".

+

+> So now you have a file added in a corpus and one is added in the thumbnail folder. We want to verify if it is well added in the table in DynamoDB, and if the api calls work.

+

+---

+#### **Check if a file is well added in the picture table in DynamoDB**

+

+1. Go to the "[Tables](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables)" page.

+2. Access it by clicking on it.

+3. Click on "**Explore table items**".

+4. You should see a line with the same hash and the corpus.

+

+> Now that the file is well added in DynamoDB, let's check if we can make our API calls.

+

+---

+#### **Check if our API Gateway calls work**

+

+1. Go to [API Gateway](https://eu-west-3.console.aws.amazon.com/apigateway/main/apis?region=eu-west-3).

+2. Access your API by clicking on it.

+3. Go to "**Stages**" on the left menu.

+4. Open the menu of your API by clicking on it.

+

+**/item/{corpus}/{hash+}**

+

+1. Click on "**GET**".

+2. Click on the "**Invoke URL**" link.

+3. Replace corpus by the corpus name and the hash by the right hash.

+> Go to your DynamoDB table in order to know it.

+4. You should have this kind of JSON response :

+

+

+1. Go back to your main page of your bucket.

+2. Click on "**Thumbnail**".

+

+> So now you have a file added in a corpus and one is added in the thumbnail folder. We want to verify if it is well added in the table in DynamoDB, and if the api calls work.

+

+---

+#### **Check if a file is well added in the picture table in DynamoDB**

+

+1. Go to the "[Tables](https://eu-west-3.console.aws.amazon.com/dynamodbv2/home?region=eu-west-3#tables)" page.

+2. Access it by clicking on it.

+3. Click on "**Explore table items**".

+4. You should see a line with the same hash and the corpus.

+

+> Now that the file is well added in DynamoDB, let's check if we can make our API calls.

+

+---