-

Notifications

You must be signed in to change notification settings - Fork 3

Open

Description

The LSDNet model trained by @ZeronSix is made on the wireframe dataset which is based on real-life images.

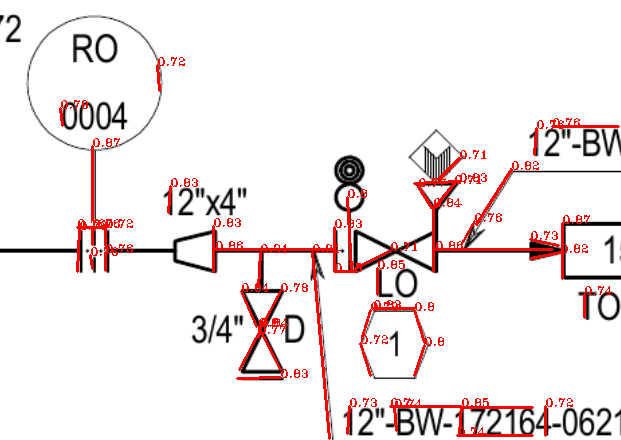

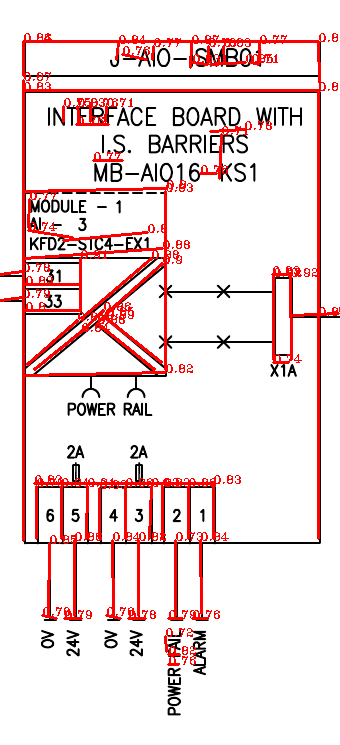

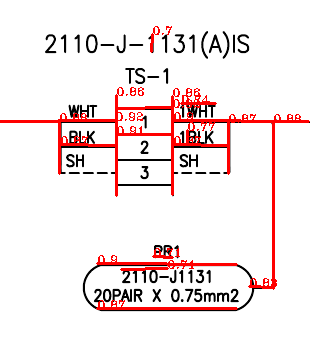

However, my use case is regarding using it on P&IDs to detect lines (horizontal, vertical and diagonal) in a robust way. These images are mostly black lines/characters on a white background which distinct lines.

A few plots of detections by running the pre-trained model on my images are attached below. The following observations were made-

- Image size has a huge impact on the detections

- At places edges of close numbers were detected as false positives.

- There are true-negatives at places which were unexplainable.

Could you please help with answering the following questions-

- What is the ideal image size to be used for inferences with the pretrained model?

- How can one train a custom LSD Net model / do transfer learning on top of the already trained model to adapt to my images?

- What could be the reason for missed detections in the below images?

Metadata

Metadata

Assignees

Labels

No labels