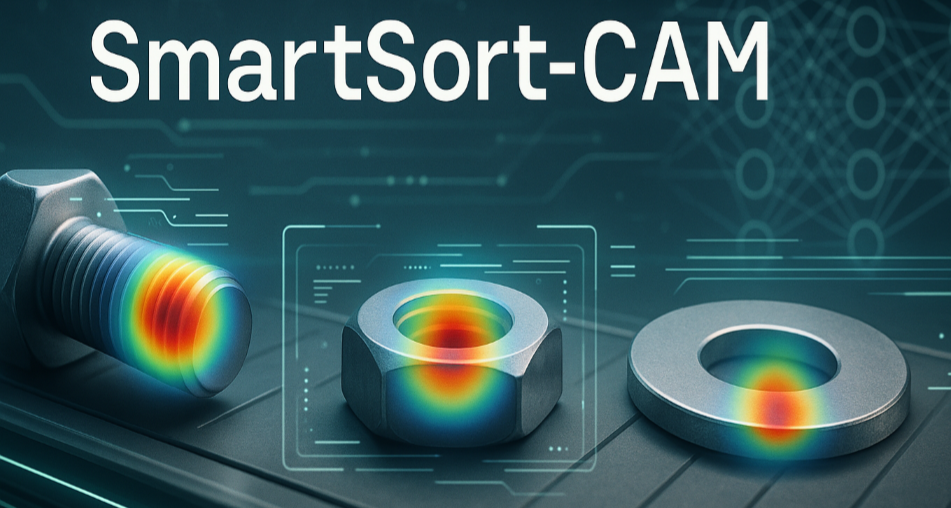

SmartSort-CAM is a comprehensive computer vision pipeline designed to classify industrial parts (bolts, nuts, washers, gears, bearings etc.) using a ConvNeXt-based deep learning model. The system includes a Blender-based synthetic dataset generator, a REST API for deployment, Grad-CAM visualization for explainability, Docker integration, and detailed exploratory analysis.

SmartSort-CAM/

├── app/ # REST API handler

│ └── main.py # Inference API client (calls FastAPI endpoint)

│

├── cv_pipeline/ # Core CV logic

│ └── inference.py # Model loading, Grad-CAM, and FastAPI server

│

├── assets/ # Blender rendering assets

│ ├── hdri/ # HDR lighting maps

│ ├── textures/ # Steel surface textures

│ └── stl/ # 3D models of industrial parts

│

├── data/ # Synthetic dataset samples and label CSV

│ └── part_labels.csv

│ ├── test_images/

│ ├── dataset_samples/

│

├── outputs/ # Inference results

│ ├── predictions/ # JSON output from API inference

│ └── visualizations/ # Grad-CAM image overlays

│

├── notebooks/ # Exploratory and training notebooks

│ ├── exploratory.ipynb # EDA of generated dataset

│ └── model_training.ipynb # Training & evaluation of ConvNeXt

│

├── scripts/ # Dataset rendering logic

│ └── Render_dataset.py # Synthetic render generator using Blender

│

├── docker/ # Containerization support

│ ├── Dockerfile

│ └── Makefile

│

├── requirements.txt # Python dependencies

├── .gitignore

└── README.md

Located in scripts/Render_dataset.py, the rendering pipeline utilizes:

- Blender's Cycles Renderer for high-fidelity image generation.

- Randomized lighting (HDRI) via

setup_hdri_white_background(). - Random camera angles & object jitter using

setup_camera_aimed_at()andapply_positional_jitter(). - Steel texture application to STL meshes using

import_stl(). - HDRI strength and exposure control to simulate real-world lighting variance.

- Positional randomness and material reflectivity to increase dataset diversity.

The output directory is organized by class and tag (e.g., data/dataset_samples/bolt/good/bolt_001.png).

Finally, the script auto-generates a CSV:

filepath,label

bolt/good/bolt_001.png,bolt

...

This CSV is used for model training.

Found in notebooks/exploratory.ipynb, this notebook analyzes:

- Class distribution bar plots

- Image shape distributions

- Random render samples

- Augmentation previews

- Trained model sanity checks on generated images

This helped verify:

- Data cleanliness

- Label balance

- Visual consistency across categories

Defined in notebooks/model_training.ipynb:

- Backbone:

convnext_largefrom TIMM - Image Size: 512x512

- Loss: CrossEntropy

- Optim: AdamW

- Regularization: Dropout (0.3), weight decay (1e-5)

- Augmentations: Flip, rotation, color jitter

- Split: 80/20 train-validation split

- Validation Accuracy: 100% on synthetic validation set

- F1 / Precision / Recall: All 1.0 (confirmed clean split)

- Domain shift test (blur, noise, rotation): ~99.5% accuracy with high confidence

Found in cv_pipeline/inference.py, the pipeline offers:

- API Endpoint:

/predict - Input: Multipart image upload

- Outputs:

predicted_classconfidenceentropytop-3 predictionsgradcam_overlay(base64 PNG)

- Hooked into the last

conv_dwblock - Produces pixel-level heatmaps

- Visualizations show part regions influencing predictions

All inference outputs are saved to outputs/:

- Grad-CAM overlays:

outputs/visualizations/gradcam_bolt.png - API JSONs:

outputs/predictions/result_bolt.json

These can be used for audits, reports, or additional UI visualization.

In docker/, you’ll find:

FROM pytorch/pytorch:2.1.0-cuda11.8-cudnn8-runtime

WORKDIR /app

COPY . /app

RUN pip install --no-cache-dir -r requirements.txt

EXPOSE 8000

CMD ["uvicorn", "cv_pipeline.inference:app", "--host", "0.0.0.0", "--port", "8000"]make build # Builds Docker image

make run-gpu # Runs inference server with GPU

make stop # Stops all containers# Clone repo

https://github.com/gbr-rl/SmartSort-CAM

# Build Docker image

make build

# Run container (GPU)

make run-gpu

# Send test request

python app/main.py bolt.pngI’m excited to connect and collaborate!

- Email: gbrohiith@gmail.com

- LinkedIn: https://www.linkedin.com/in/rohiithgb/

- GitHub: https://github.com/GBR-RL/

This project is open-source and available under the MIT License.

🌟 If you like this project, please give it a star! 🌟