📺 Watch the System Demo featuring Vectorized Pandas Simulations and Context-Aware Generative AI.

Hyperion is a technical sales acceleration platform designed for the Energy Sector. It solves the "Black Box" problem of AI by anchoring Generative content to a deterministic Geospatial Physics Engine.

Industrial energy sales (Microgrids, Hybrid Plants) suffer from long feedback loops. Sales engineers wait days for simulation teams to validate simple scenarios.

| KPI | Challenge | Hyperion Solution |

|---|---|---|

| Sales Cycle Time | 3-5 days for engineering team validation. | < 200ms real-time simulation via vectorized local compute. |

| Proposal Accuracy | Sales reps estimate numbers, leading to contract disputes. | Physics-First Architecture: Financials (LCOE, CAPEX) are calculated mathematically, not hallucinated by AI. |

| Standardization | Every proposal looks different and risks brand inconsistency. | Templated AI Generation ensures every PDF follows the corporate narrative while using dynamic site data. |

We utilize a "Hybrid Intelligence" architecture where the Python backend handles the math, and the LLM handles the narrative.

Data flow between the User, the Compute Engine, and External AI Services.

graph LR

User[Sales Executive] -- "HTTPS/React" --> UI[Dashboard]

subgraph "Hyperion Container"

UI -- "JSON Payload" --> API[FastAPI Orchestrator]

API -- "Vectorized Arrays" --> Sim[Pandas Physics Engine]

API -- "Context + KPIs" --> Agent[LangChain Service]

end

Agent -- "Prompt Completion" --> OpenAI[GPT-4o]

style User stroke:#333,stroke-width:2px

style UI stroke:#333,stroke-width:2px

style Sim stroke:#333,stroke-width:2px

style Agent stroke:#333,stroke-width:2px

style OpenAI stroke:#333,stroke-width:2px,stroke-dasharray: 5 5

How we prevent Hallucinations by forcing the AI to use pre-calculated data.

sequenceDiagram

participant U as User

participant P as Physics Engine

participant L as LLM

U->>P: Config: "Miami, 10MW Solar, 5 Engines"

P->>P: Calculate Irradiance (Orbital Mechanics)

P->>P: Optimize Dispatch (Battery vs Engine)

P-->>U: Return: LCOE $0.12/kWh, CO2 -40%

U->>L: "Generate Proposal"

Note right of L: System injects calculated KPIs<br/>into the System Prompt.

L-->>U: "Based on Miami's irradiance...<br/>we save 40% CO2..."

Strategic choices balancing latency, accuracy, and cost.

| Component | Decision | Alternatives Considered | Justification (The "Why") |

|---|---|---|---|

| Simulation Engine | Pandas / NumPy | SQL Stored Procedures | Latency: Vectorized CPU operations in memory (<50ms) are orders of magnitude faster than database round-trips for 8,760-hour timeseries calculations. |

| AI Strategy | Prompt Engineering (RAG-Lite) | Fine-Tuned Model | Accuracy: Energy prices and physics don't change enough to warrant a fine-tune. Injecting real-time calculated KPIs into the context window guarantees 100% numerical accuracy (Zero Hallucination). |

| Backend | FastAPI (Async) | Flask / Django | Concurrency: We need to handle long-running OpenAI requests without blocking the high-speed simulation endpoints. FastAPI's native async/await handles this I/O bound traffic efficiently. |

Scenario: 100 Sales Reps generating 50 proposals/day each.

| Resource | Unit Cost | Monthly Est. | Optimization |

|---|---|---|---|

| Physics Compute | $0 (Local CPU) | $0.00 | Calculations run on the container CPU. No external API calls required for math. |

| AI Generation | $0.01 / Proposal | ~$150.00 | Context Pruning: We only send the final aggregated KPIs (approx 500 tokens) to the LLM, not the full hourly timeseries data. |

| Database | $15 / month | $15.00 | Storing configuration metadata (KB size) is trivial compared to timeseries storage. |

- Pydantic Validators: The physics engine crashes if fed invalid data (e.g., negative battery capacity). We implement strict Pydantic models to catch

NaNor impossible values at the API gate, protecting the simulation logic.

- AI Degradation: If OpenAI API fails (503), the system falls back to a Deterministic Template. The user still gets a proposal with the correct charts and numbers, just without the custom "flair" text.

We treat the Physics Engine as "Mission Critical" code.

- 100% Unit Test Coverage: Pytest suite validates the

calculations.pymodule against known Excel benchmarks. - Integration Tests:

AsyncMockis used to simulate OpenAI responses, ensuring the CI/CD pipeline runs offline and incurs zero cost.

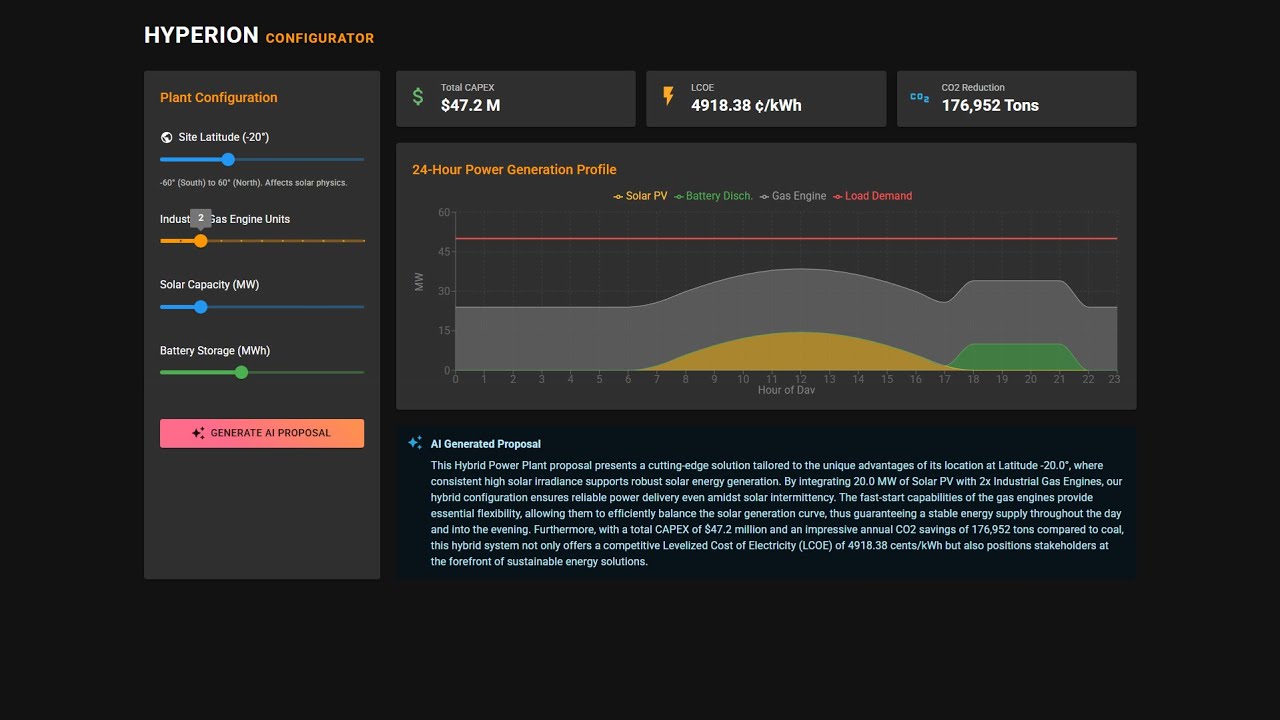

Industrial energy sales require complex calculations to prove value. Spreadsheets are error-prone, and static PDFs don't scale. Hyperion solves this by:

- Geospatial Physics Engine: Instead of static estimates, it uses a Pandas/NumPy engine to model solar irradiance based on Latitude and Orbital Mechanics. It simulates a site-specific 24-hour hybrid dispatch cycle (Solar + Industrial Gas Engine + Battery).

- Instant Financial Feedback: Calculates LCOE (Levelized Cost of Electricity), CAPEX, and CO2 reduction metrics in real-time.

- Context-Aware AI Automation: Uses LangChain & GPT-4o to instantly draft technical proposals. The AI analyzes the geospatial data (e.g., solar seasonality at high latitudes) to generate location-specific value propositions.

The application is built on a containerized Microservices architecture:

- Frontend (React + TypeScript): A high-performance dashboard using Material UI and Recharts for real-time visualization of energy profiles.

- Calculation Engine (Python + Pandas): A vectorised simulation layer that generates synthetic solar data and optimizes dispatch logic (Solar → Battery → Engine).

- Backend API (FastAPI): Asynchronous orchestration handling simulation requests and DB transactions.

- Database (PostgreSQL): Persists hardware specifications (Products) and user configurations.

- AI Service (LangChain): Chains simulation KPIs into prompt templates to generate context-aware sales pitches.

- Geospatial Configurator: Sliders to adjust Site Latitude, Engine Count, Solar MW, and Battery MWh to model any location globally.

- Orbital Physics Engine: Calculates real-time solar irradiance based on Earth-Sun geometry (Declination & Elevation), visualizing the "Duck Curve" effect where engines ramp down as solar peaks.

- Financial Modeling: Automatic calculation of LCOE (Levelized Cost of Electricity), Total CAPEX, and CO2 Savings vs Coal baseline.

- Context-Aware AI Agent: "One-Click" generation of vendor-neutral executive summaries that analyze site-specific solar seasonality and reliability data.

- Framework: FastAPI (Async Python 3.10)

- Simulation: Pandas, NumPy (Vectorized Operations)

- AI: LangChain, OpenAI GPT-4o-mini

- Database: PostgreSQL 15, SQLAlchemy (ORM), Pydantic (Validation)

- Core: React 18, Vite, TypeScript

- UI Library: Material UI (MUI v5)

- Visualization: Recharts (D3-based wrapper)

- Networking: Axios

- Containerization: Docker, Docker Compose

- Hot Reloading: Configured for both Frontend & Backend dev capability.

- Docker Desktop installed

- OpenAI API Key

-

Clone the repository

git clone https://github.com/Nibir1/Hyperion.git cd Hyperion -

Set Environment Variables Create a

.envfile in the root or export the key directly:export OPENAI_API_KEY=sk-proj-xxxx... -

Build and Run

make build

Make build command internally runs:

docker-compose build --no-cache

docker-compose up -d

@echo "Application running at http://localhost:3000"This project includes a comprehensive test suite using Pytest that covers 100% of the critical calculation logic and API endpoints.

The tests run inside the Docker container to ensure environment consistency. We use:

- In-Memory SQLite: To perform fast, isolated database integration tests without affecting the persistence layer.

- Unittest Mocks: To simulate OpenAI API calls, ensuring the test suite is zero-cost and runs offline.

- Pytest-Cov: To verify code coverage metrics.

To run the full test suite and view the coverage report:

make tests- Frontend Dashboard: http://localhost:3000

- Backend Swagger Docs: http://localhost:8000/docs

Hyperion doesn't guess; it calculates.

- Solar: Generates a Gaussian bell curve peaking at 12:00 PM.

- Battery: Detects evening peak demand (18:00-21:00) and discharges stored energy.

- Engines: Fill the remaining "Net Load" gap to ensure 100% reliability.

- User clicks "Generate Proposal".

- Backend runs the simulation to get final KPIs (e.g., "45,000 Tons CO2 Saved").

- LangChain injects these numbers into a prompt template.

- LLM returns a cohesive paragraph explaining why this specific configuration is profitable.

Hyperion/

├── docker-compose.yml # Orchestration

├── backend/

│ ├── app/

│ │ ├── api/ # API Routes

│ │ ├── main.py # Entry Point & Lifespan

│ │ ├── calculations.py # Pandas Simulation Engine

│ │ ├── ai_service.py # LangChain Logic

│ │ ├── models.py # SQLAlchemy Tables

│ │ └── schemas.py # Pydantic Models

│ └── requirements.txt

├── frontend/

│ ├── public/ # Static Assets (Logo)

│ ├── src/

│ │ ├── components/ # UI Blocks (Chart, Form, KPIs)

│ │ ├── services/ # API Integration

│ │ └── App.tsx # Main Dashboard Logic

│ └── package.json

└── README.md

- Core Simulation Engine (Solar/Engine/Battery)

- Financial Logic (LCOE/Capex)

- GenAI Proposal Integration

- User Auth (Save Projects per User)

- PDF Export of Charts

Architected by Nahasat Nibir Senior AI & Data Solutions Architect

-blueviolet?style=for-the-badge)