A platform for building, training, and running inference on TensorFlowJS-based language models with the assistance of LLM-powered agents — hosted on Google Cloud Run.

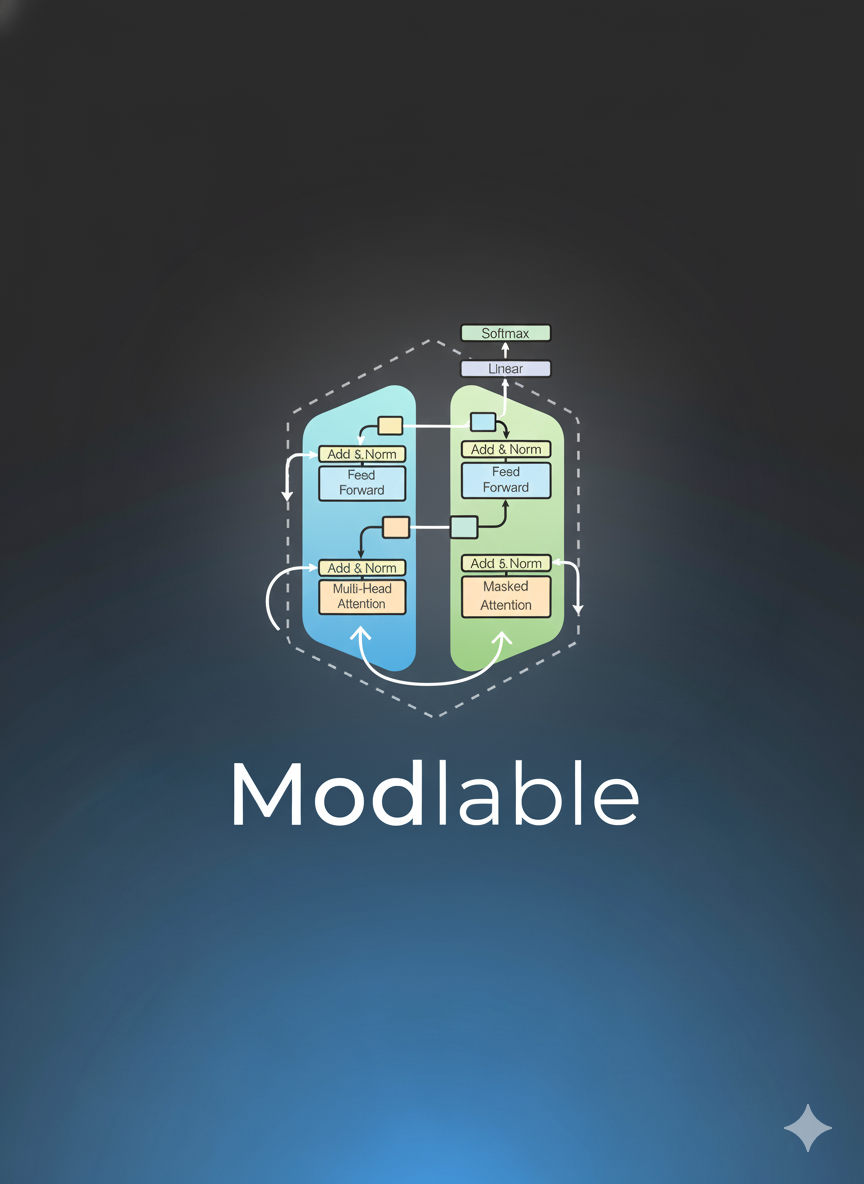

Modlable is an experimental platform that allows developers and researchers to build, train, and deploy lightweight language models (LMs) directly in JavaScript using TensorFlowJS, augmented by Large Language Model (LLM) agents for orchestration, optimization, and workflow automation.

The platform is cloud-native — it’s designed to run efficiently on Google Cloud Run, enabling serverless and scalable model operations with minimal DevOps overhead.

- 🧩 Modular Training Pipelines — Train custom token-based language models in TensorFlowJS.

- 🤖 LLM Orchestration Agents — Use pre-configured agents to guide hyperparameter tuning, dataset preparation, and model evaluation.

- ☁️ Cloud Run Deployment — Run models and inference endpoints on demand using containerized builds.

- 🔁 Seamless Inference API — Serve models as REST endpoints for text generation, embedding, or classification.

- 🧠 Local + Cloud Support — Develop locally, then deploy to GCP with one command.

modlable/

├── frontend/

│ ├── modlable/ # The frontend Angular App

│

├── backend/

│ └── src/ # Genkit + Cloud Functions/Cloud Run for agents

│ ├── package.json # Node project dependencies

├── Dockerfile # Container configuration for running it all

├── .env.example # Example environment configuration

└── README.md # You are here

Before getting started, ensure you have:

- Node.js ≥ 18.x

- npm or yarn

- Docker (for containerization)

- Google Cloud SDK (

gcloud) configured with billing and permissions - A Google Cloud Project with Cloud Run, Artifact Registry, and Cloud Build enabled

-

Clone the repository

git clone https://github.com/yourusername/modlable.git cd modlable -

Install dependencies

npm install

-

Set up environment variables

cp .env.example .env # Edit the .env file with your API keys and Cloud project info

Run the API locally for testing:

npm run devTo start a local training session:

npm run trainRun inference on a local model:

npm run infer "Once upon a time"-

Build and push Docker image

gcloud builds submit --tag gcr.io/[PROJECT_ID]/modlable

-

Deploy to Cloud Run

gcloud run deploy modlable \ --image gcr.io/[PROJECT_ID]/modlable \ --platform managed \ --region [REGION] \ --allow-unauthenticated -

Access your endpoint

https://modlable-[REGION]-a.run.app/infer

| Method | Endpoint | Description |

|---|---|---|

POST |

/train |

Initiate a model training session |

POST |

/infer |

Run inference with a trained model |

GET |

/models |

List all available trained models |

POST |

/agents/optimize |

Use LLM agent for optimization guidance |

Example Request:

curl -X POST https://modlable-[REGION]-a.run.app/infer \

-H "Content-Type: application/json" \

-d '{"prompt": "The future of AI is"}'| Variable | Description |

|---|---|

GOOGLE_PROJECT_ID |

Your GCP project ID |

MODEL_BUCKET |

Cloud Storage bucket for model checkpoints |

OPENAI_API_KEY |

API key for LLM agent integration (optional) |

PORT |

Server port (defaults to 8080) |

Contributions are welcome!

- Fork the repo

- Create a new feature branch

- Submit a pull request with a clear description

MIT License © 2025 — Modlable Contributors

- Web dashboard for model visualization and management

- Support for multimodal training data (text + image)

- Integration with Hugging Face model zoo

- Cross-runtime inference (Node, Browser, Edge)

Made with ❤️ by the Modlable Team Empowering developers to build LMs anywhere.