mintlify-cache-sim (all on top of cloudflare workers)

WIP reproduction of mintlify's edge caching architecture (still WIP and im fixing logical bugs)

a solid write-up on how mintlify uses cloudflare and vercel together got me curious, so i recreated their architecture based on that blog. the mintlify team has been super helpful and this is my take on the same approach with a little bit of ✨ Effect ✨ magic

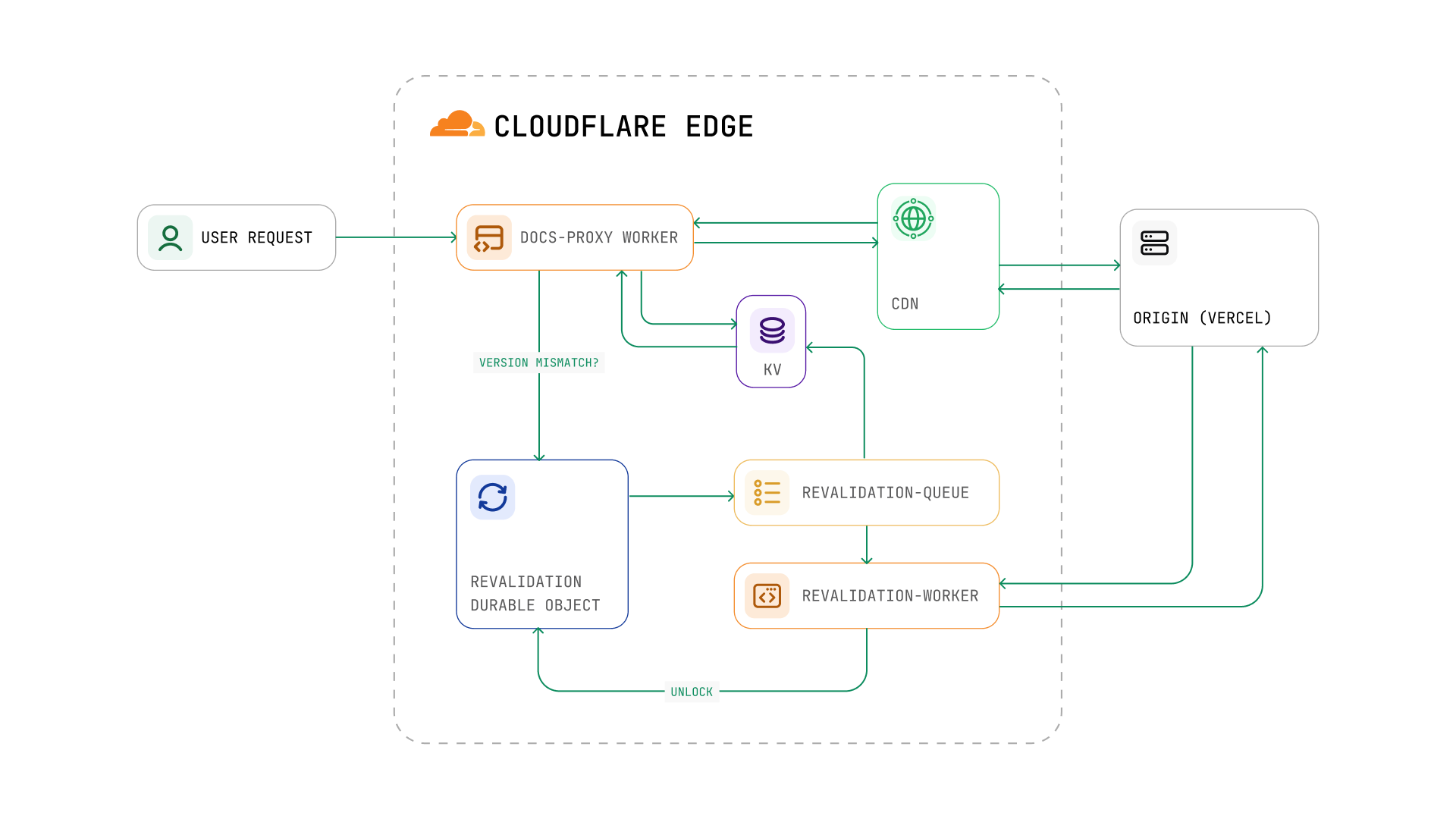

pretty cool architecture from their blog:

took a couple of liberties

- blog approach: cloudflare queues → this repo: durable objects → queues need paid plan & im bork

- blog approach: separate workers → this repo: single worker → simpler as i cant scale to mintlify

- blog approach: cdn cache api w/ cf opts → this repo: caches.default → simpler but still edge cached

- it's not structured and doesnt follow any best practices for now as that comes later

live

- proxy: https://docs.vaishnav.one (cf worker)

- origin: https://nextjs-docs-one-orpin.vercel.app (vercel)

| constant | value | source |

|---|---|---|

| cache ttl | 15 days (1296000s) | blog spec |

| lock timeout | 30 minutes (1800000ms) | blog spec |

| batch size | 6 concurrent | cloudflare limit |

| cache key format | {prefix}/{deploymentId}/{path}:{contentType} |

blog spec |

| key | value | writer |

|---|---|---|

CONFIG:{domain}:origin |

vercel url | manual |

CONFIG:{domain}:prefix |

cache prefix | manual |

DEPLOY:{projectId}:id |

expected version | webhook |

DEPLOYMENT:{domain} |

active version | coordinator |

| method | path | purpose | response |

|---|---|---|---|

| GET | /* |

proxy with caching | page html/rsc |

| POST | /webhook/deployment |

receive vercel webhook | {"status":"ok"} |

| POST | /prewarm |

warm cache proactively | {"status":"queued"} |

| header | values | meaning |

|---|---|---|

x-cache-status |

HIT, MISS |

cache state |

x-vercel-deployment-id |

dpl_xxx |

origin version |

x-vercel-project-id |

prj_xxx |

project id |